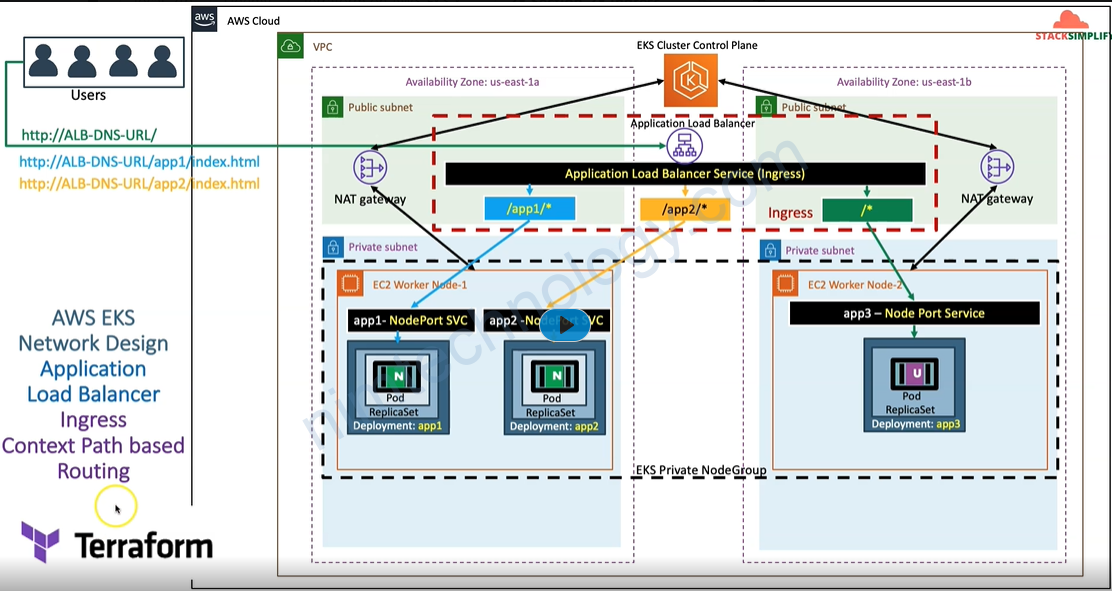

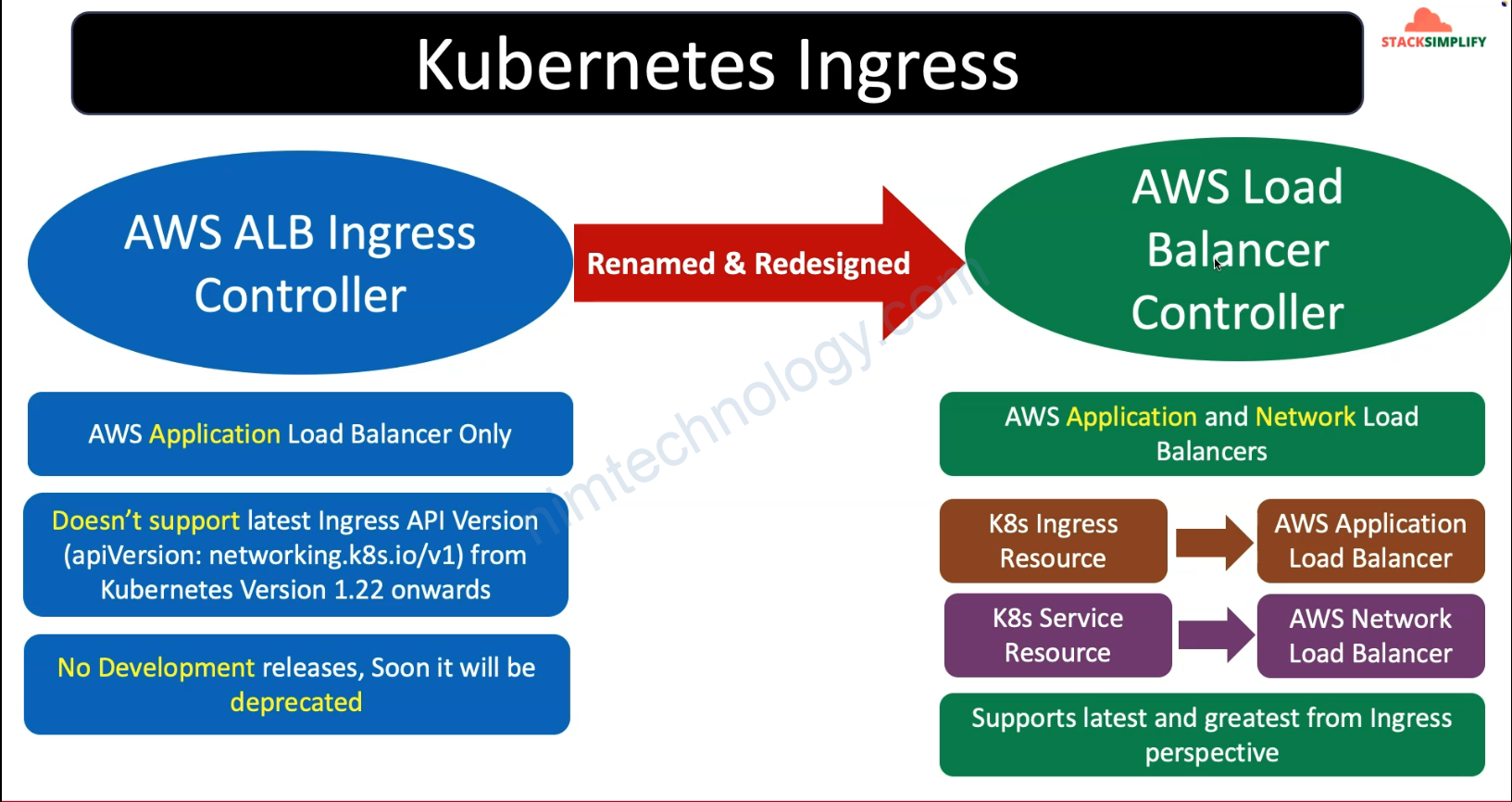

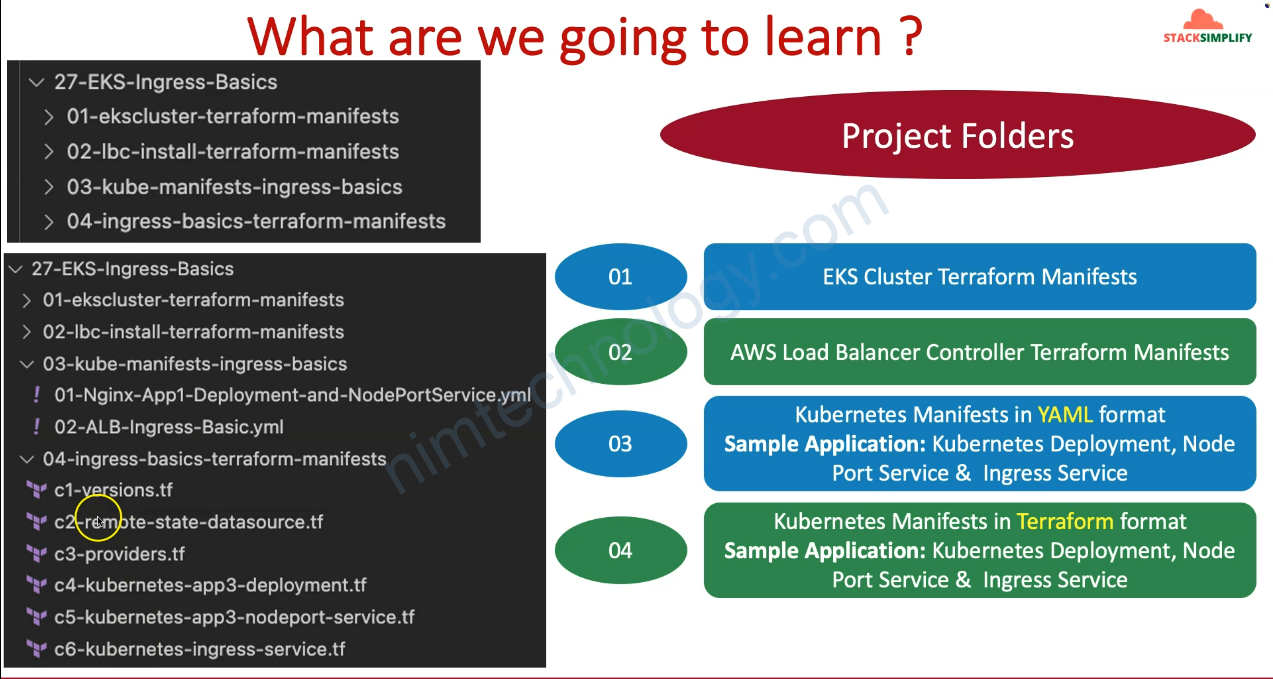

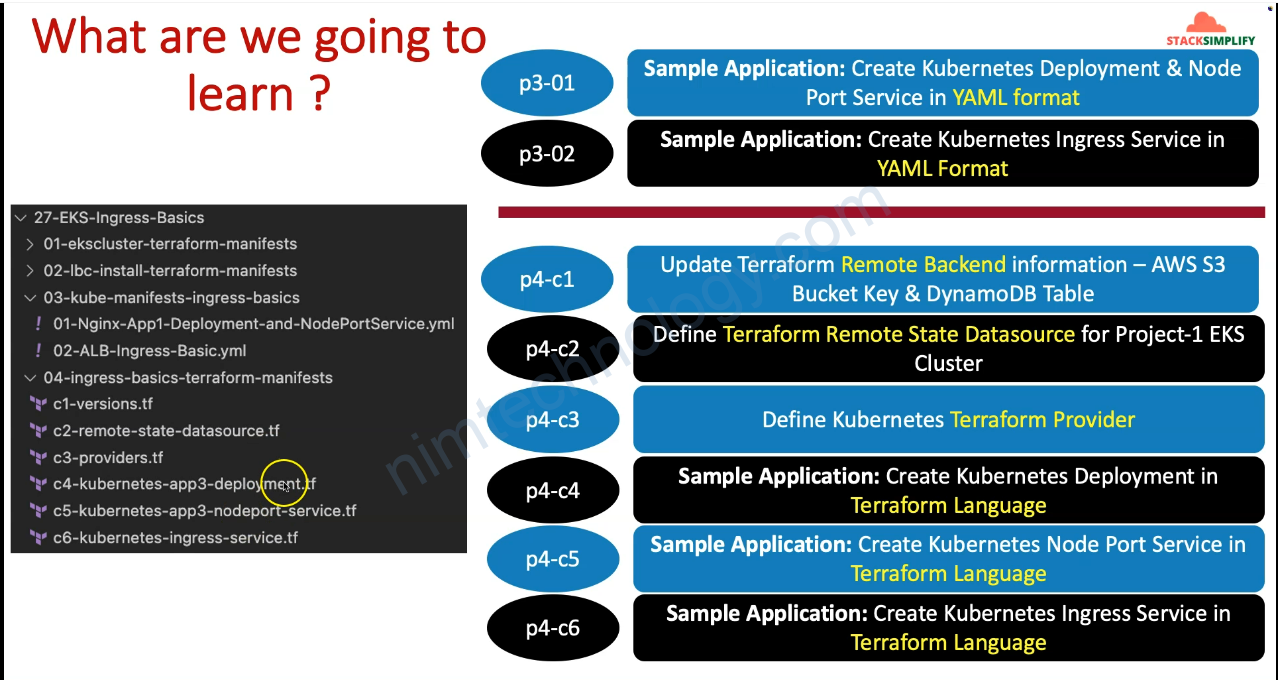

1) Introduction to all Ingress

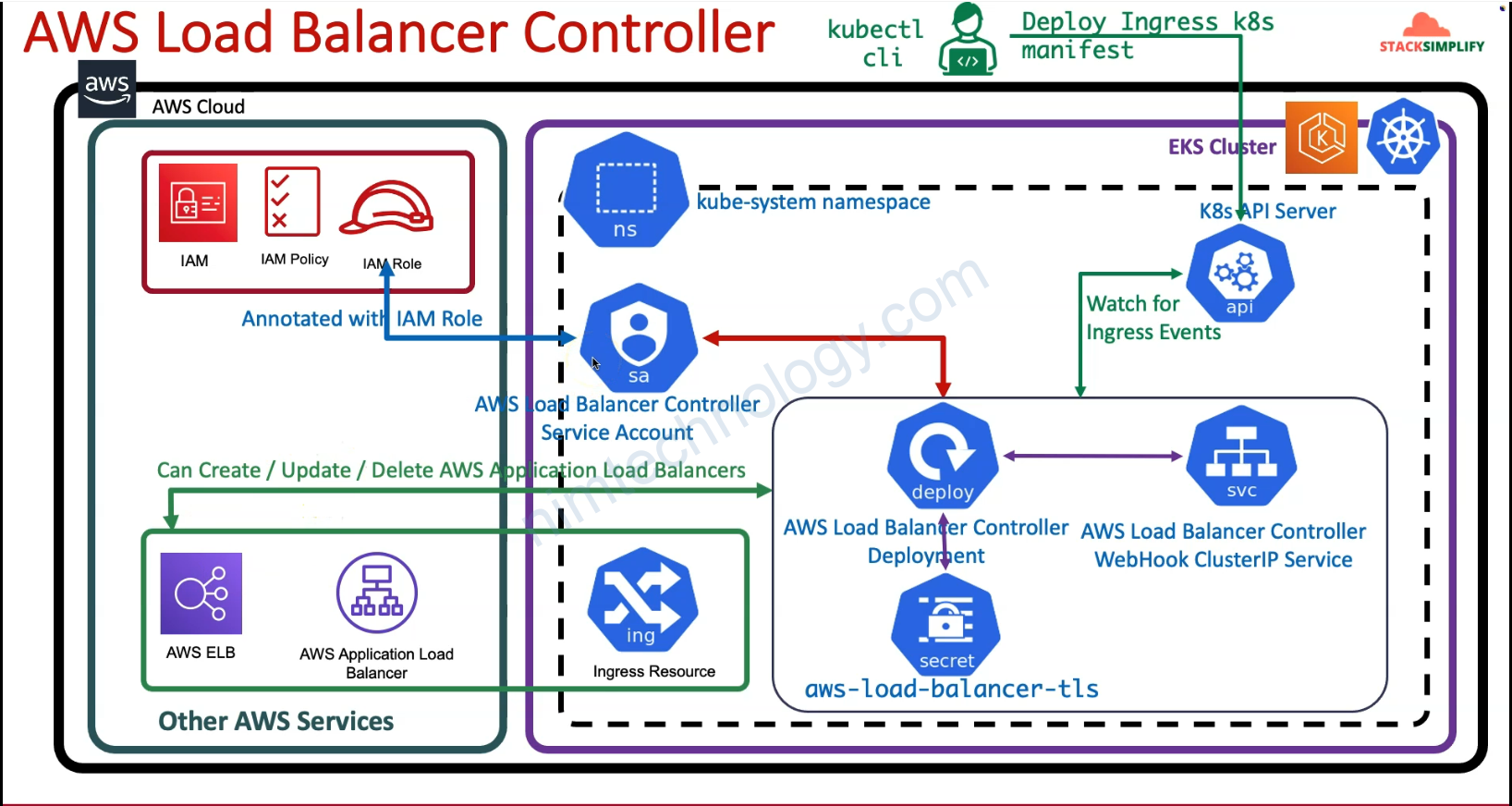

2) AWS Load Balancer Controller

2.1) Introduction

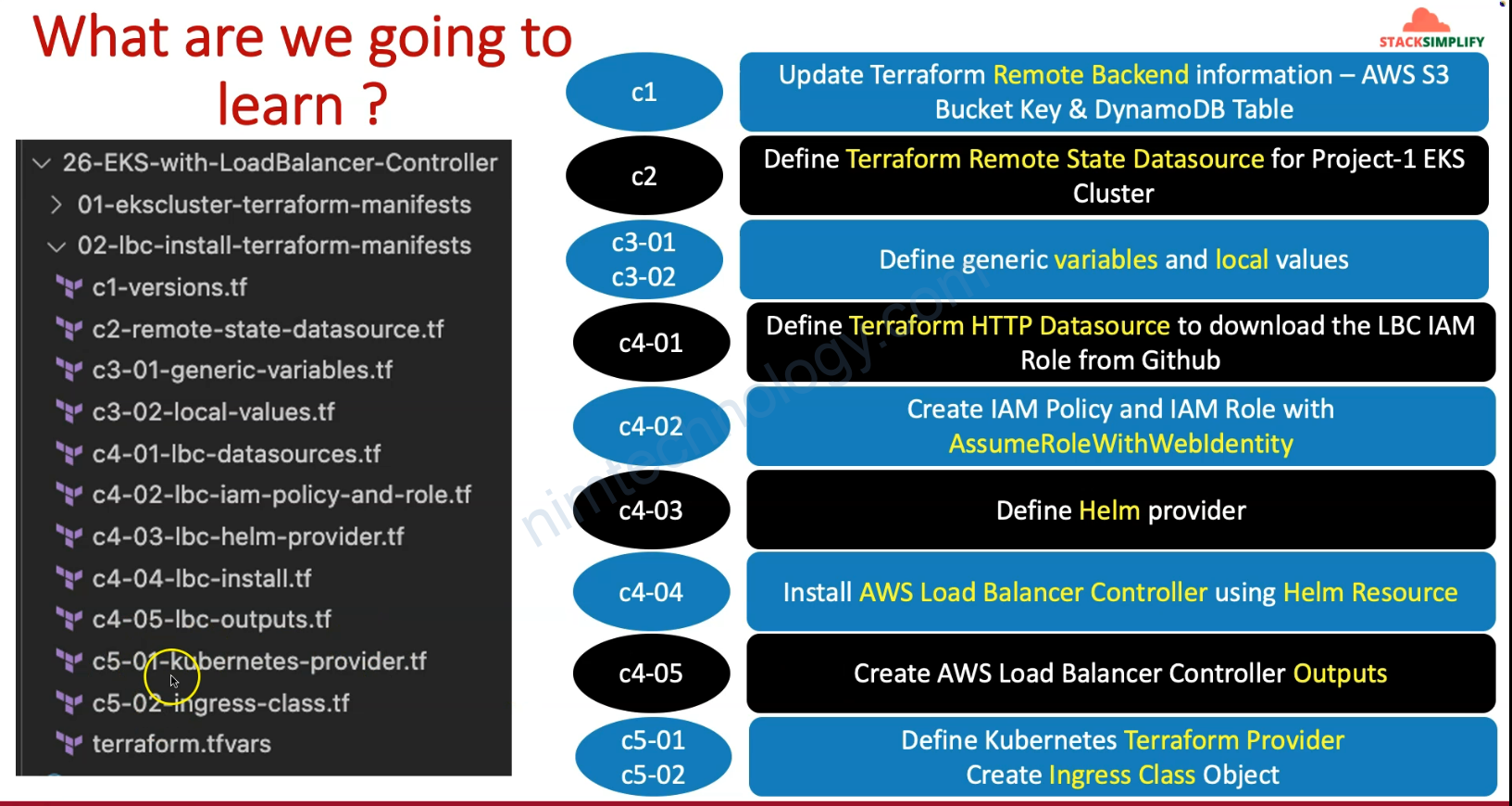

2.2) Intalling AWS Load Balancer Controller with Terraform

chúng ta có file:

c4-01-lbc-datasources.tf

# Datasource: AWS Load Balancer Controller IAM Policy get from aws-load-balancer-controller/ GIT Repo (latest)

data "http" "lbc_iam_policy" {

url = "https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/main/docs/install/iam_policy.json"

# Optional request headers

request_headers = {

Accept = "application/json"

}

}

output "lbc_iam_policy" {

value = data.http.lbc_iam_policy.body

}

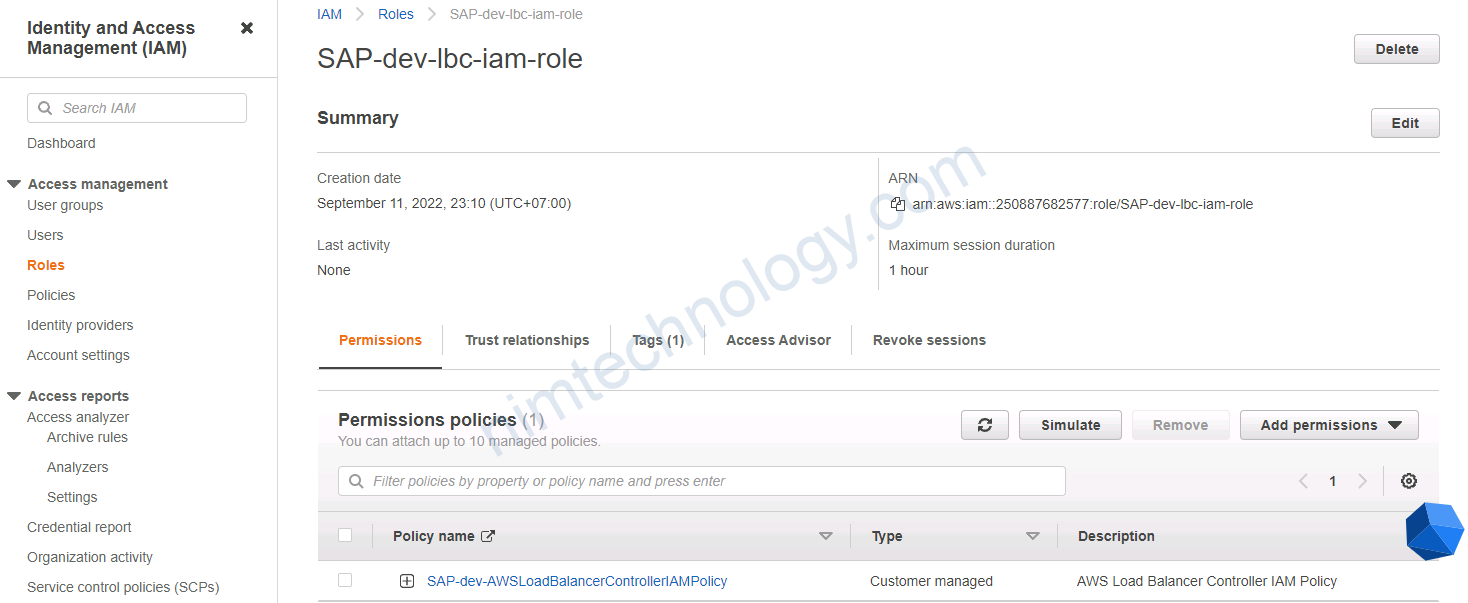

Đầu tiên nó thực hiện download iam_policy.json thông qua data “http”

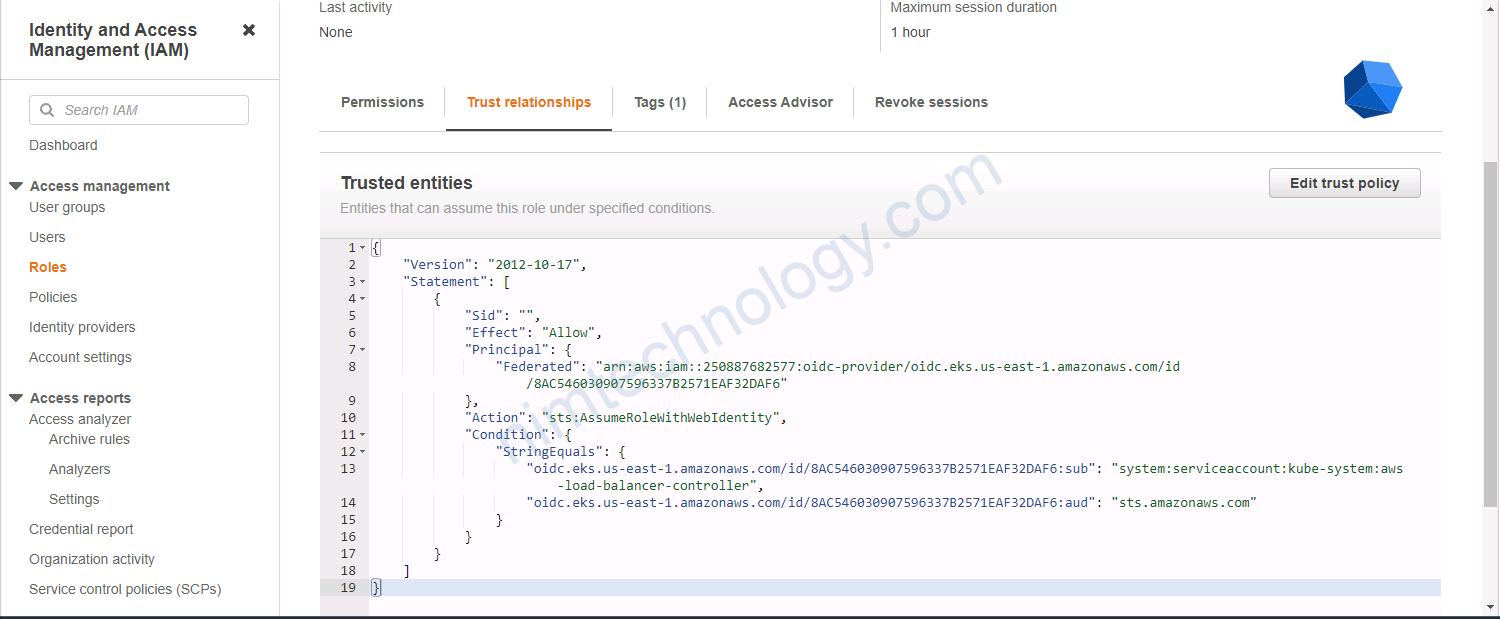

Tiếp theo là bạn thực hiện tạo Policy và tạo 1 assume role rồi thực hiện add Policy vào Role

c4-02-lbc-iam-policy-and-role.tf

>>>>>>>>>>>

>>>>>>

# Resource: Create AWS Load Balancer Controller IAM Policy

resource "aws_iam_policy" "lbc_iam_policy" {

name = "${local.name}-AWSLoadBalancerControllerIAMPolicy"

path = "/"

description = "AWS Load Balancer Controller IAM Policy"

policy = data.http.lbc_iam_policy.body

}

output "lbc_iam_policy_arn" {

value = aws_iam_policy.lbc_iam_policy.arn

}

# Resource: Create IAM Role

resource "aws_iam_role" "lbc_iam_role" {

name = "${local.name}-lbc-iam-role"

# Terraform's "jsonencode" function converts a Terraform expression result to valid JSON syntax.

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRoleWithWebIdentity"

Effect = "Allow"

Sid = ""

Principal = {

Federated = "${data.terraform_remote_state.eks.outputs.aws_iam_openid_connect_provider_arn}"

}

Condition = {

StringEquals = {

"${data.terraform_remote_state.eks.outputs.aws_iam_openid_connect_provider_extract_from_arn}:aud": "sts.amazonaws.com",

"${data.terraform_remote_state.eks.outputs.aws_iam_openid_connect_provider_extract_from_arn}:sub": "system:serviceaccount:kube-system:aws-load-balancer-controller"

}

}

},

]

})

tags = {

tag-key = "AWSLoadBalancerControllerIAMPolicy"

}

}

# Associate Load Balanacer Controller IAM Policy to IAM Role

resource "aws_iam_role_policy_attachment" "lbc_iam_role_policy_attach" {

policy_arn = aws_iam_policy.lbc_iam_policy.arn

role = aws_iam_role.lbc_iam_role.name

}

output "lbc_iam_role_arn" {

description = "AWS Load Balancer Controller IAM Role ARN"

value = aws_iam_role.lbc_iam_role.arn

}

Ở resource “aws_iam_policy” “lbc_iam_policy” thì nó tạo Policy theo file đã download ở bước trước

workload và có service account là aws-load-balancer-controller sẽ được access resource AWS LoadBalancer Controller

Thực hiện tạo provider Helm để connect EKS.

c4-03-lbc-helm-provider.tf

>>>>>

>>>

>>>

# Datasource: EKS Cluster Auth

data "aws_eks_cluster_auth" "cluster" {

name = data.terraform_remote_state.eks.outputs.cluster_id

}

# HELM Provider

provider "helm" {

kubernetes {

host = data.terraform_remote_state.eks.outputs.cluster_endpoint

cluster_ca_certificate = base64decode(data.terraform_remote_state.eks.outputs.cluster_certificate_authority_data)

token = data.aws_eks_cluster_auth.cluster.token

}

}

Giờ đến bước install AWS Load Balancer Controller using HELM

c4-04-lbc-install.tf

>>>>>

>>>>

# Install AWS Load Balancer Controller using HELM

# Resource: Helm Release

resource "helm_release" "loadbalancer_controller" {

depends_on = [aws_iam_role.lbc_iam_role]

name = "aws-load-balancer-controller"

repository = "https://aws.github.io/eks-charts"

chart = "aws-load-balancer-controller"

namespace = "kube-system"

# Value changes based on your Region (Below is for us-east-1)

set {

name = "image.repository"

value = "602401143452.dkr.ecr.us-east-1.amazonaws.com/amazon/aws-load-balancer-controller"

# Changes based on Region - This is for us-east-1 Additional Reference: https://docs.aws.amazon.com/eks/latest/userguide/add-ons-images.html

}

set {

name = "serviceAccount.create"

value = "true"

}

set {

name = "serviceAccount.name"

value = "aws-load-balancer-controller"

}

set {

name = "serviceAccount.annotations.eks\\.amazonaws\\.com/role-arn"

value = "${aws_iam_role.lbc_iam_role.arn}"

}

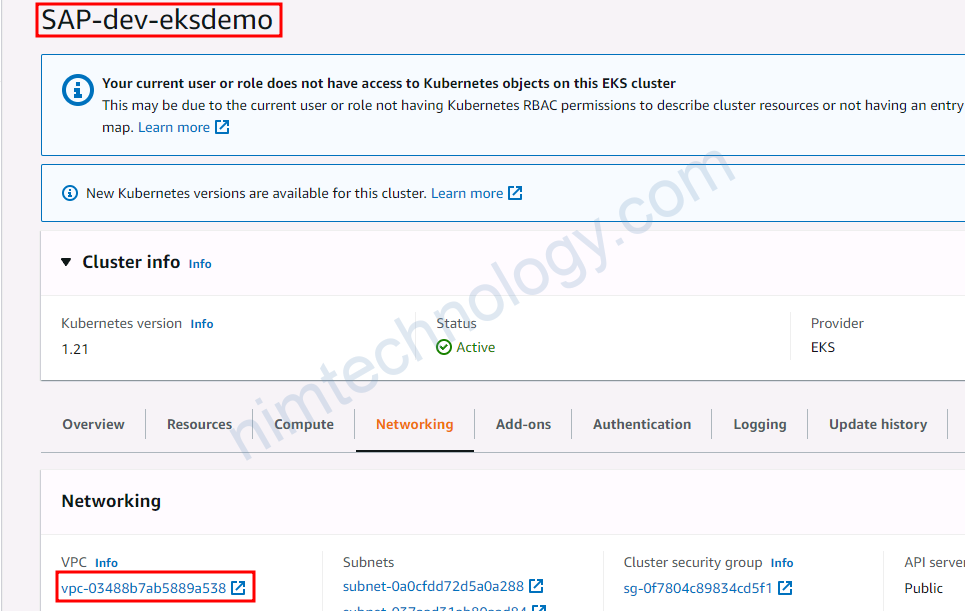

set {

name = "vpcId"

value = "${data.terraform_remote_state.eks.outputs.vpc_id}"

}

set {

name = "region"

value = "${var.aws_region}"

}

set {

name = "clusterName"

value = "${data.terraform_remote_state.eks.outputs.cluster_id}"

}

}

Ở file trên bạn sẽ thấy có những thứ sau đây:

repository = "https://aws.github.io/eks-charts"

chart = "aws-load-balancer-controller"===> Nó trỏ đến helm chart Public.

Tiếp đến bạn sẽ set 1 số thông số: image.repository, serviceAccount.create(tạo service), …

Để terraform có thể tương tác với kubernetes cluster của bạn thì chúng ta config 1 provider “kubernetes”

và đây là phần mình show namespace: kube-system

root@work-space-u20:~# kubectl get all -n kube-system NAME READY STATUS RESTARTS AGE pod/aws-load-balancer-controller-747df8b87c-rzxxw 1/1 Running 0 6m26s pod/aws-load-balancer-controller-747df8b87c-skpdh 1/1 Running 0 6m26s pod/aws-node-ntwlx 1/1 Running 0 40m pod/coredns-66cb55d4f4-65wrb 1/1 Running 0 44m pod/coredns-66cb55d4f4-zxjrw 1/1 Running 0 44m pod/kube-proxy-rvp6x 1/1 Running 0 40m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/aws-load-balancer-webhook-service ClusterIP 172.20.34.214 <none> 443/TCP 6m28s service/kube-dns ClusterIP 172.20.0.10 <none> 53/UDP,53/TCP 44m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/aws-node 1 1 1 1 1 <none> 44m daemonset.apps/kube-proxy 1 1 1 1 1 <none> 44m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/aws-load-balancer-controller 2/2 2 2 6m27s deployment.apps/coredns 2/2 2 2 44m NAME DESIRED CURRENT READY AGE replicaset.apps/aws-load-balancer-controller-747df8b87c 2 2 2 6m28s replicaset.apps/coredns-66cb55d4f4 2 2 2 44m

c5-01-kubernetes-provider.tf

>>>>>>

>>>>

# Terraform Kubernetes Provider

provider "kubernetes" {

host = data.terraform_remote_state.eks.outputs.cluster_endpoint

cluster_ca_certificate = base64decode(data.terraform_remote_state.eks.outputs.cluster_certificate_authority_data)

token = data.aws_eks_cluster_auth.cluster.token

}

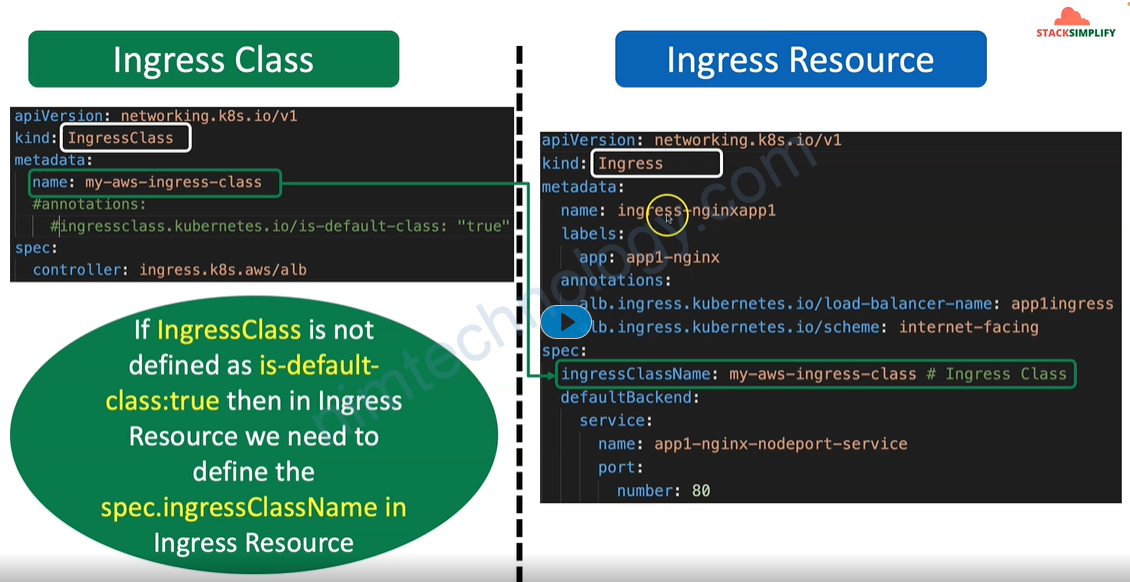

sau đoạn này bạn đã có 1 ingress class mới:

root@work-space-u20:~# kubectl get ingressclass NAME CONTROLLER PARAMETERS AGE alb ingress.k8s.aws/alb <none> 13m my-aws-ingress-class ingress.k8s.aws/alb <none> 4m4s

Khám phá deployment:

kubectl descibe deployment.apps/aws-load-balancer-controller -n kube-system

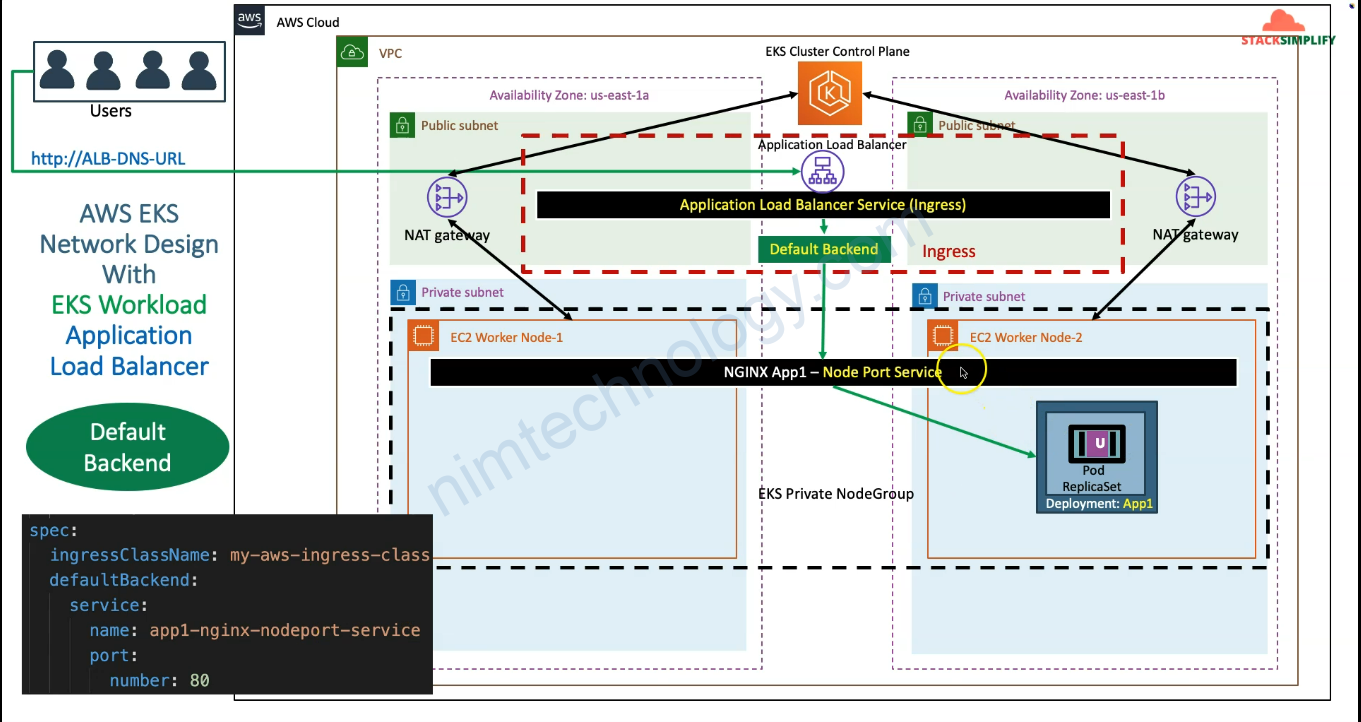

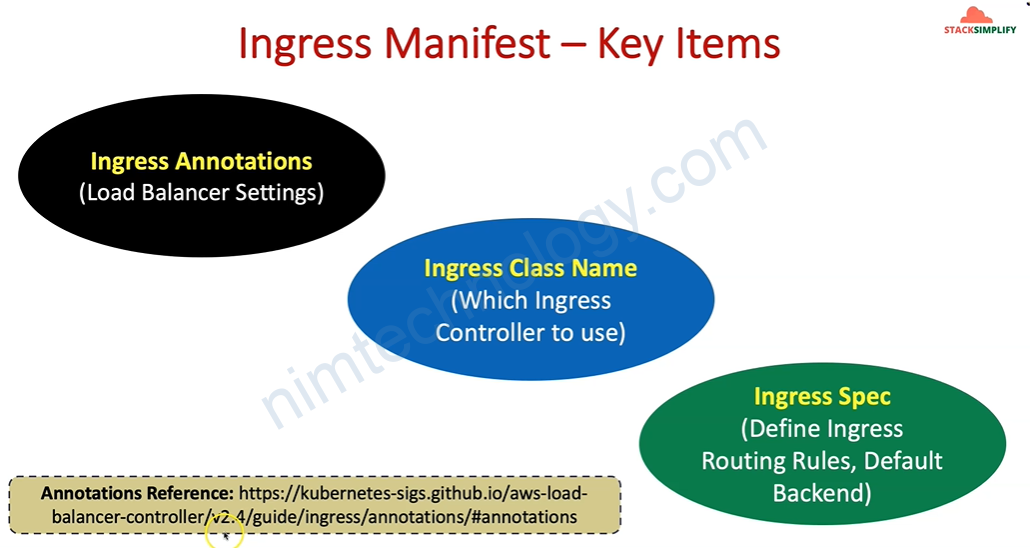

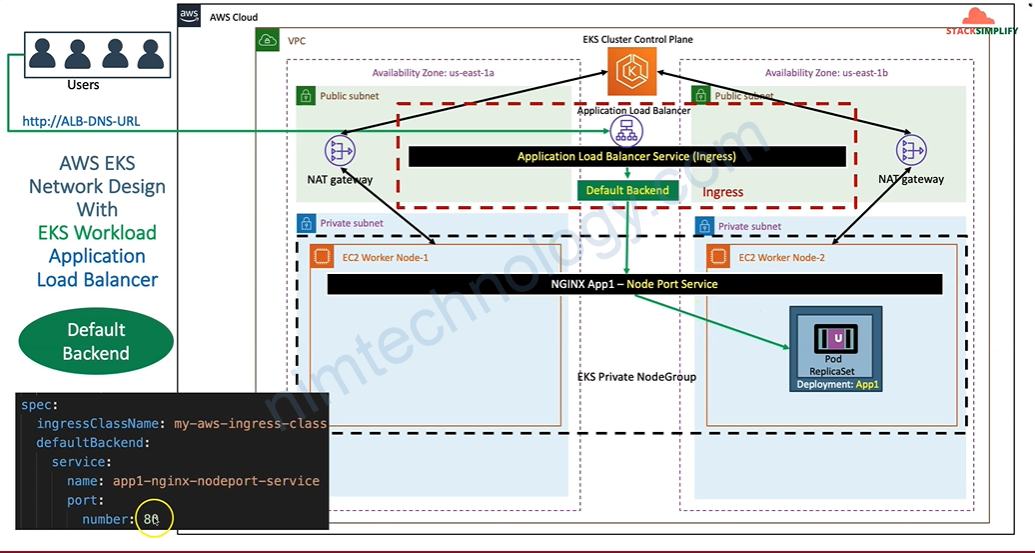

3) Ingress Basics

3.1) Introduction

https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.4/

Giờ chúng ta thử apply các file manifest

deployment-service.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app3-nginx-deployment

labels:

app: app3-nginx

spec:

replicas: 1

selector:

matchLabels:

app: app3-nginx

template:

metadata:

labels:

app: app3-nginx

spec:

containers:

- name: app3-nginx

image: stacksimplify/kubenginx:1.0.0

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: app3-nginx-nodeport-service

labels:

app: app3-nginx

annotations:

#Important Note: Need to add health check path annotations in service level if we are planning to use multiple targets in a load balancer

# alb.ingress.kubernetes.io/healthcheck-path: /index.html

spec:

type: NodePort

selector:

app: app3-nginx

ports:

- port: 80

targetPort: 80

Giờ chúng ta có 1 file ingress:

# Annotations Reference: https://kubernetes-sigs.github.io/aws-load-balancer-controller/latest/guide/ingress/annotations/

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-basics

labels:

app: app3-nginx

annotations:

# Load Balancer Name

alb.ingress.kubernetes.io/load-balancer-name: ingress-basics

#kubernetes.io/ingress.class: "alb" (OLD INGRESS CLASS NOTATION - STILL WORKS BUT RECOMMENDED TO USE IngressClass Resource) # Additional Notes: https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.3/guide/ingress/ingress_class/#deprecated-kubernetesioingressclass-annotation

# Ingress Core Settings

alb.ingress.kubernetes.io/scheme: internet-facing

# Health Check Settings

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/healthcheck-port: traffic-port

alb.ingress.kubernetes.io/healthcheck-path: /index.html

alb.ingress.kubernetes.io/healthcheck-interval-seconds: '15'

alb.ingress.kubernetes.io/healthcheck-timeout-seconds: '5'

alb.ingress.kubernetes.io/success-codes: '200'

alb.ingress.kubernetes.io/healthy-threshold-count: '2'

alb.ingress.kubernetes.io/unhealthy-threshold-count: '2'

spec:

ingressClassName: my-aws-ingress-class # Ingress Class

defaultBackend:

service:

name: app3-nginx-nodeport-service

port:

number: 80

# 1. If "spec.ingressClassName: my-aws-ingress-class" not specified, will reference default ingress class on this kubernetes cluster

# 2. Default Ingress class is nothing but for which ingress class we have the annotation `ingressclass.kubernetes.io/is-default-class: "true"`

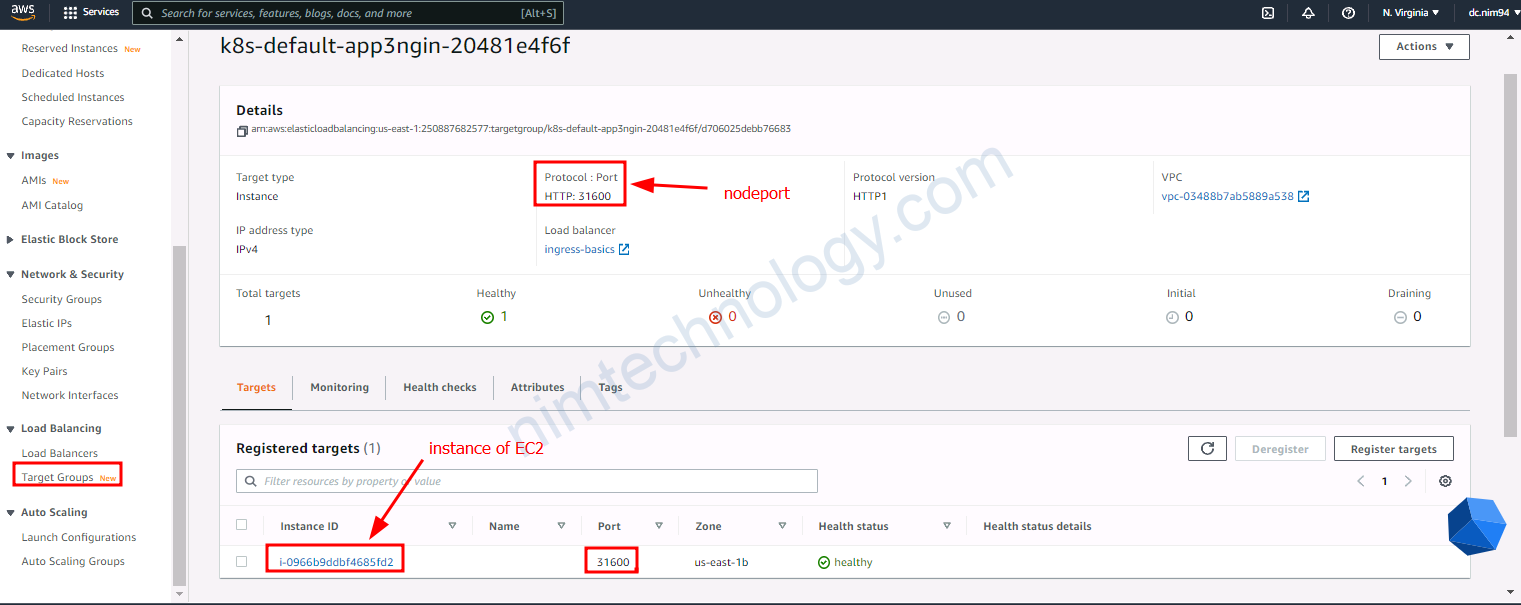

sau khi bạn apply xong thì bạn sẽ thấy có 1 ingress có domain.

root@work-space-u20:~/eks# kubectl get ingress NAME CLASS HOSTS ADDRESS PORTS AGE ingress-basics my-aws-ingress-class * ingress-basics-228990159.us-east-1.elb.amazonaws.com 80 24s

bạn nhớ mở browser và truy cập http://ingress-basics-228990159.us-east-1.elb.amazonaws.com

Bạn thấy rõ là Load Balancing đang trỏ vào node port của server hay VM

Giờ xóa deployment service và ingress.

root@work-space-u20:~/eks# kubectl delete -f deployment-service.yaml deployment.apps "app3-nginx-deployment" deleted service "app3-nginx-nodeport-service" deleted root@work-space-u20:~/eks# kubectl delete -f ingress.yaml ingress.networking.k8s.io "ingress-basics" deleted

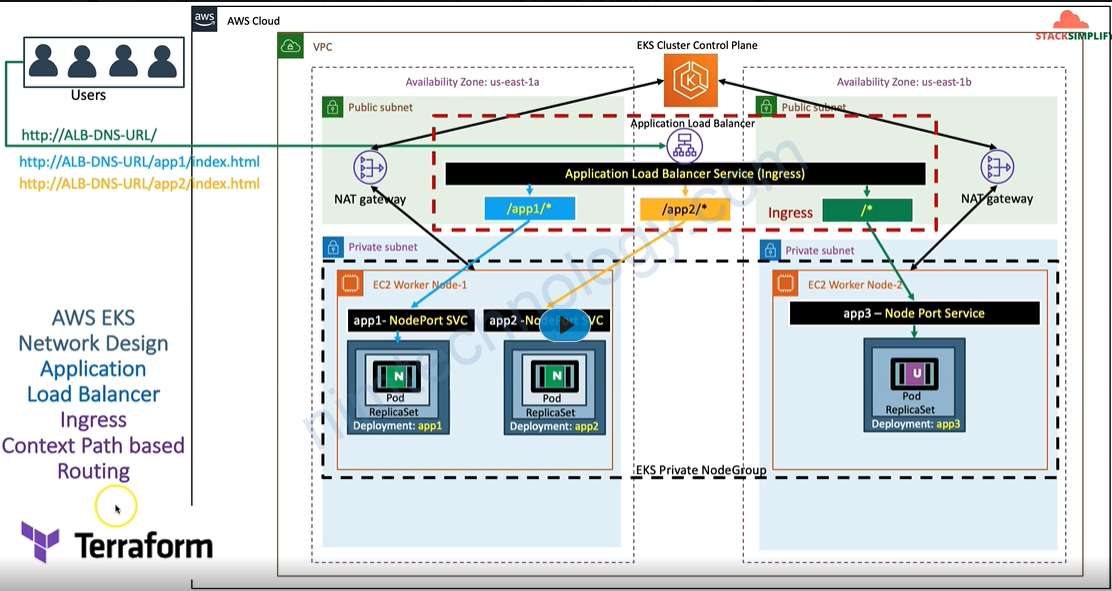

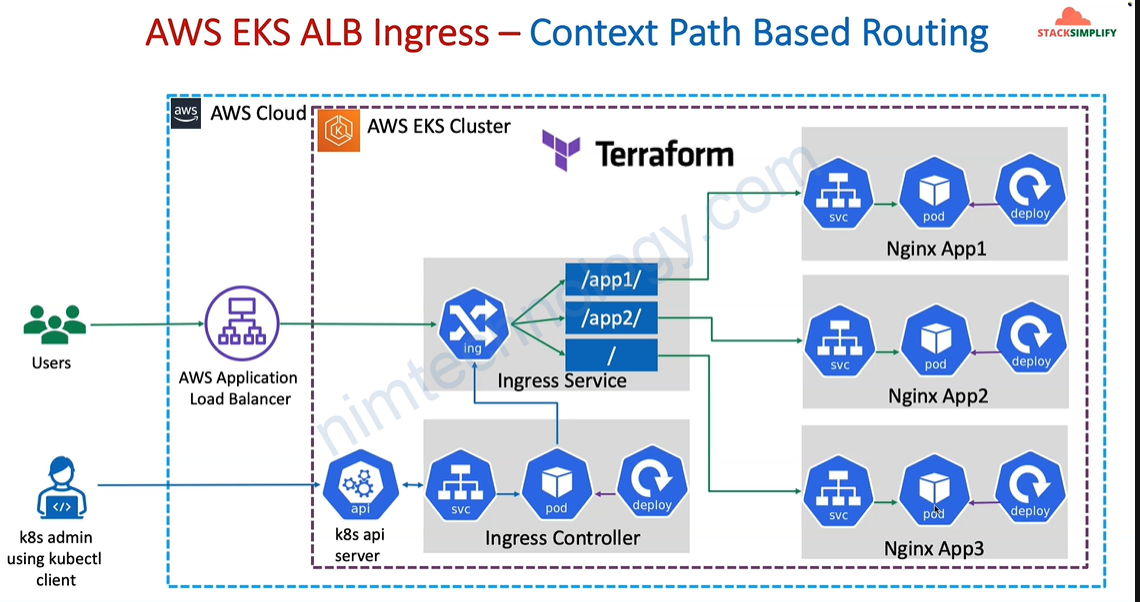

4) Ingress Context Path based Routing

4.1) Introduction

# Annotations Reference: https://kubernetes-sigs.github.io/aws-load-balancer-controller/latest/guide/ingress/annotations/

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-cpr

annotations:

# Load Balancer Name

alb.ingress.kubernetes.io/load-balancer-name: ingress-cpr

# Ingress Core Settings

#kubernetes.io/ingress.class: "alb" (OLD INGRESS CLASS NOTATION - STILL WORKS BUT RECOMMENDED TO USE IngressClass Resource)

alb.ingress.kubernetes.io/scheme: internet-facing

# Health Check Settings

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/healthcheck-port: traffic-port

#Important Note: Need to add health check path annotations in service level if we are planning to use multiple targets in a load balancer

alb.ingress.kubernetes.io/healthcheck-interval-seconds: '15'

alb.ingress.kubernetes.io/healthcheck-timeout-seconds: '5'

alb.ingress.kubernetes.io/success-codes: '200'

alb.ingress.kubernetes.io/healthy-threshold-count: '2'

alb.ingress.kubernetes.io/unhealthy-threshold-count: '2'

spec:

ingressClassName: my-aws-ingress-class # Ingress Class

defaultBackend:

service:

name: app3-nginx-nodeport-service

port:

number: 80

rules:

- http:

paths:

- path: /app1

pathType: Prefix

backend:

service:

name: app1-nginx-nodeport-service

port:

number: 80

- path: /app2

pathType: Prefix

backend:

service:

name: app2-nginx-nodeport-service

port:

number: 80

# - path: /

# pathType: Prefix

# backend:

# service:

# name: app3-nginx-nodeport-service

# port:

# number: 80

# Important Note-1: In path based routing order is very important, if we are going to use "/*" (Root Context), try to use it at the end of all rules.

# 1. If "spec.ingressClassName: my-aws-ingress-class" not specified, will reference default ingress class on this kubernetes cluster

# 2. Default Ingress class is nothing but for which ingress class we have the annotation `ingressclass.kubernetes.io/is-default-class: "true"`

Bạn có thể tham khảo terraform ở đây:

https://github.com/mrnim94/terraform-aws/tree/master/eks-ingress

Ngoài ra bạn có thể tham khảo module của minh:

https://registry.terraform.io/modules/mrnim94/eks-alb-ingress/aws/latest

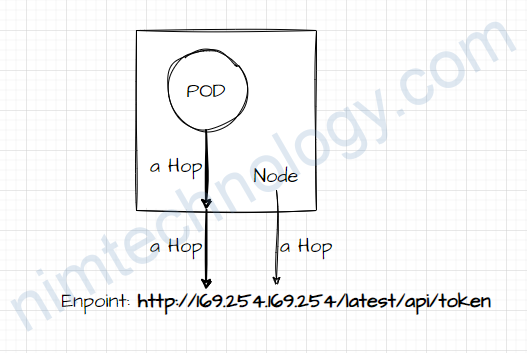

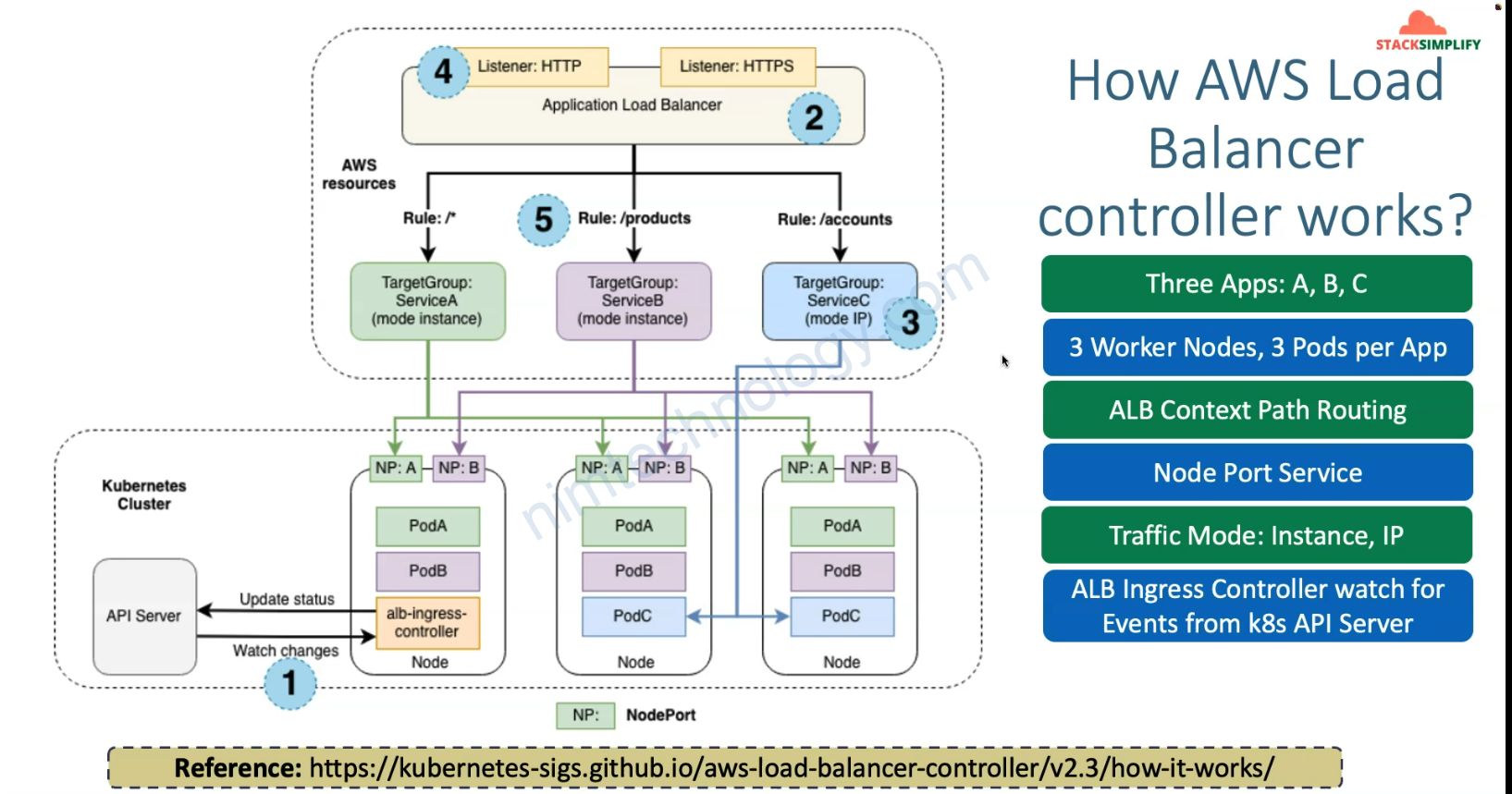

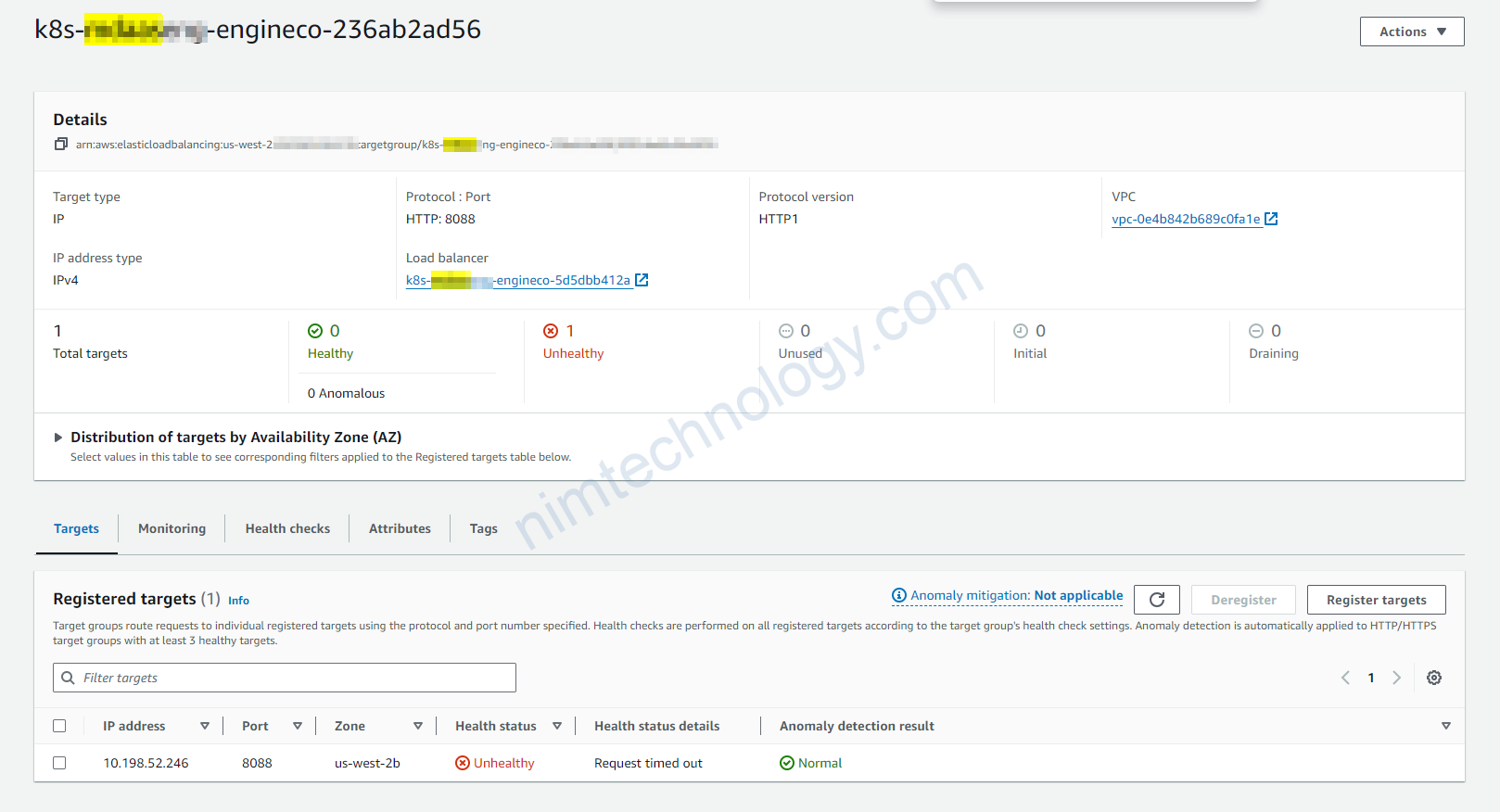

5) How to Route Traffic From ALB to Pod IPs directly.

Giờ chúng ta cần tìm hiểu lại 2 các mà ALB route traffics from ALB to POD

Direct to Pod IPs (IP Mode)

In IP Mode, the ALB routes traffic directly to the pod IPs, bypassing the node-level networking layer. This mode is enabled by setting alb.ingress.kubernetes.io/target-type: ip in your Ingress annotations.

[Internet]

|

v

[ALB - Application Load Balancer]

| (1) Selects pod based on rules

|

| +---------------------------------------------------+

| | VPC |

| | +-----------------+ +-----------------+ |

| | | Node 1 | | Node 2 | |

| | | | | | |

| +----> [Pod IP 1.1] | | [Pod IP 2.1] +---->(2) Routes to Pod IP

| | | | | |

| +-----------------+ +-----------------+ |

| | |

+----+---------------------------------------------------+

- Traffic from the internet reaches the ALB.

- The ALB routes traffic directly to the pod IPs across different nodes, bypassing the node-level networking.

- Each pod processes the traffic independently.

Ở đây cũng không yêu service NodePort chỉ cần ClusterIP là được

Vì ở đây chỉ có 1 pod nên bạn sẽ thấy nó là 1 ip và pod 8088 thì chắc chắc là vào IP Pod

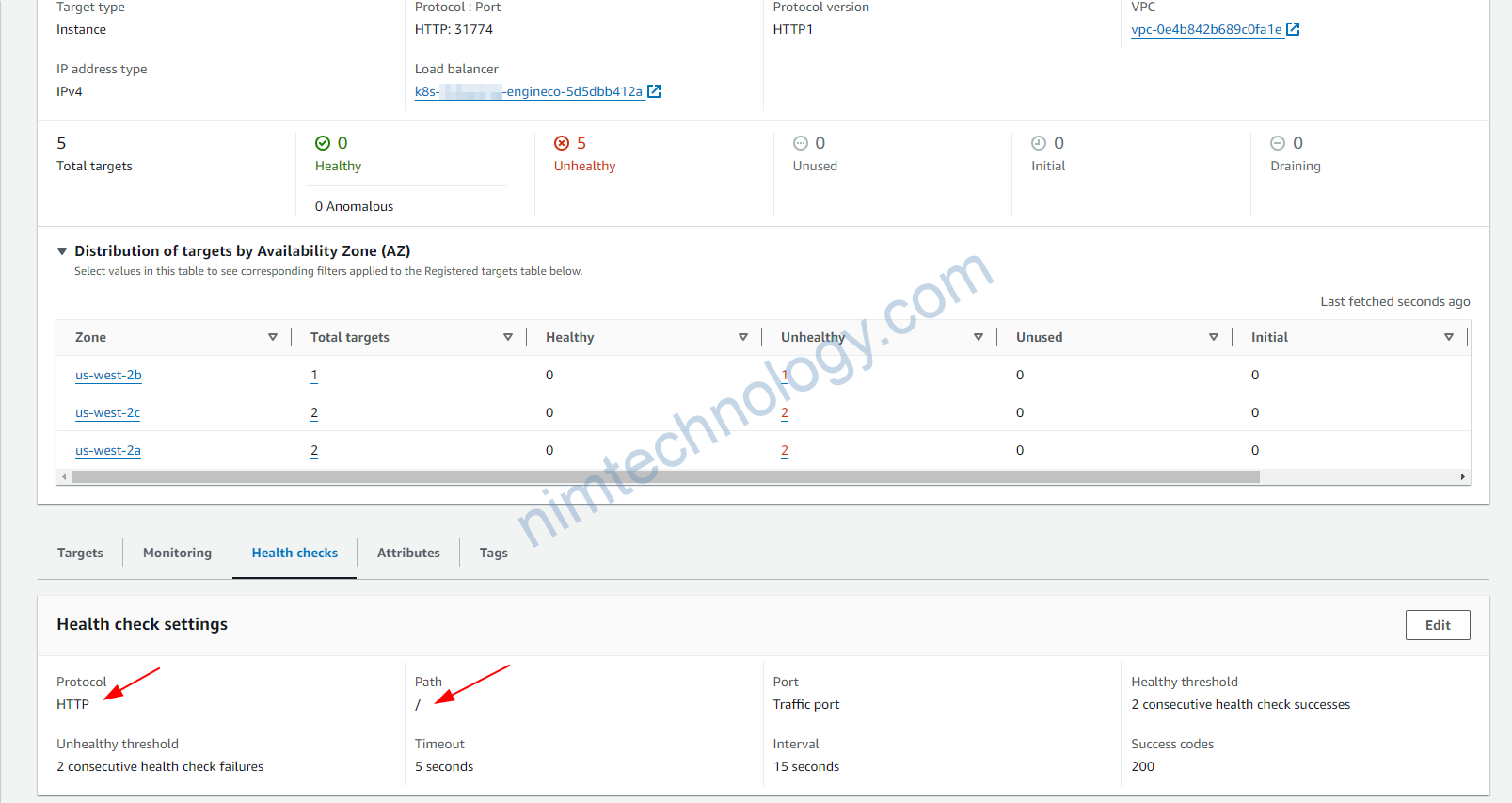

Node-Level Networking (NodePort)

In NodePort mode, the ALB sends traffic to a specific port (the NodePort) on the nodes. Kubernetes then routes this traffic to the appropriate pods based on service definitions.

[Internet]

|

v

[ALB - Application Load Balancer]

| (1) Routes to a NodePort on any node

|

| +---------------------------------------------------+

| | VPC |

| | +-----------------+ +-----------------+ |

| | | Node 1 | | Node 2 | |

| | | [NodePort 30000] | [NodePort 30000] |

+----+----> | | | +---->(2) kube-proxy routes

| | | v | v | | to the appropriate pod

| | | [Service selects] | [Service selects] |

| | | | | | | |

| | | [Pod 1.1] | [Pod 2.1] | |

| | +-----------------+ +-----------------+ |

| | |

+----+---------------------------------------------------+

- NodePort Routing: The ALB routes traffic to the designated NodePort on any node within the cluster. This NodePort is exposed on every node by Kubernetes and is associated with a specific service.

- Internal Routing: Kubernetes, via kube-proxy, routes the traffic from the NodePort to the appropriate pod based on the service definition. This may involve routing traffic to a pod on the same node or a different node, depending on where the selected pod is running.

Bạn thấy port 31774 thì đây chắc chắn là NodePort

6) Create ALB, then create service on K8S (EKS)

Có 1 số case bạn muốn tạo 1 AWS Loadbalancer trước rồi cấu hình rất nhiều thử trên đó.

Tiếp đến bạn mới trỏ traffics đến 1 service bất kì trên K8s thì làm sao.

Bạn sẽ không đi theo cách thông thường thì phải làm sao:

Khi bạn tạo ALB trước thì bạn sẽ có ARN của ALB đó.

trên K8s bạn tạo 1 resource là TargetGroupBinding trong apiVersion: elbv2.k8s.aws/v1beta1

apiVersion: v1

kind: Service

metadata:

name: "nim-mtls-v4"

namespace: "prod-nim"

spec:

type: NodePort

selector:

app: nim-gateway

ports:

- protocol: TCP

port: 80

targetPort: 9000

nodePort: 30056

---

apiVersion: elbv2.k8s.aws/v1beta1

kind: TargetGroupBinding

metadata:

name: "nim-mtls-v4"

namespace: "prod-nim"

spec:

serviceRef:

name: "nim-mtls-v4"

port: 80

targetGroupARN: arn:aws:elasticloadbalancing:us-east-2:XXXXXXXXX:targetgroup/prod-mtls-v4-use2/42b46xxxxx4629

targetType: instance

Summary

- Service: Creates a NodePort service named

mdc-mtls-v4in theprod-md-cloud-restnamespace, exposing the application running on port 9000 of the pods with labelapp: mdc-gatewayto port 80 and mapping it to node port 30056. - TargetGroupBinding: Binds the service

mdc-mtls-v4to an AWS target group, enabling the integration with an AWS ELB for routing external traffic to the Kubernetes service.

failed calling webhook “vingress.elbv2.k8s.aws”.

context deadline exceeded

Error from server (InternalError): error when creating "ingress-alb.yaml": Internal error occurred: failed calling webhook "vingress.elbv2.k8s.aws": failed to call webhook: Post "https://aws-load-balancer-webhook-service.kube-system.svc:443/validate-networking-v1-ingress?timeout=10s": context deadline exceededhttps://stackoverflow.com/questions/70681534/failed-calling-webhook-vingress-elbv2-k8s-aws

node_security_group_additional_rules = {

ingress_allow_access_from_control_plane = {

type = "ingress"

protocol = "tcp"

from_port = 9443

to_port = 9443

source_cluster_security_group = true

description = "Allow access from control plane to webhook port of AWS load balancer controller"

}

#https://stackoverflow.com/questions/70681534/failed-calling-webhook-vingress-elbv2-k8s-aws

}

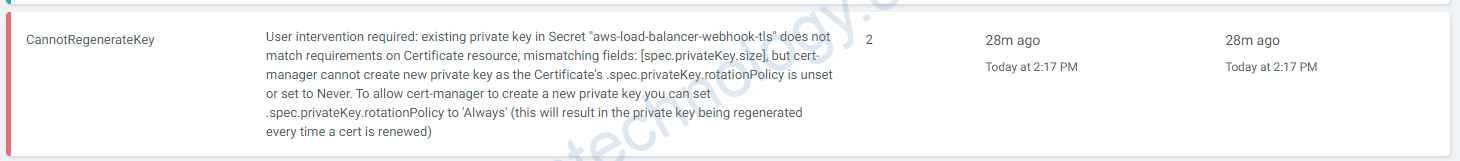

tls: failed to verify certificate: x509: certificate signed by unknown authority

Failed deploy model due to Internal error occurred: failed calling webhook "mtargetgroupbinding.elbv2.k8s.aws": failed to call webhook: Post "https://aws-load-balancer-webhook-service.kube-system.svc:443/mutate-elbv2-k8s-aws-v1beta1-targetgroupbinding?timeout=10s": tls: failed to verify certificate: x509: certificate signed by unknown authorityLúc này mình thấy certificate: aws-load-balancer-serving-cert và event báo là:

CannotRegenerateKey: User intervention required: existing private key in Secret “aws-load-balancer-webhook-tls” does not match requirements on Certificate resource, mismatching fields: [spec.privateKey.size], but cert-manager cannot create new private key as the Certificate’s .spec.privateKey.rotationPolicy is unset or set to Never. To allow cert-manager to create a new private key you can set .spec.privateKey.rotationPolicy to ‘Always’ (this will result in the private key being regenerated every time a cert is renewed)

Tiếp là xóa secret đó và restart pod: aws-load-balancer-controller

Tiếp theo sync lại ingress của application.

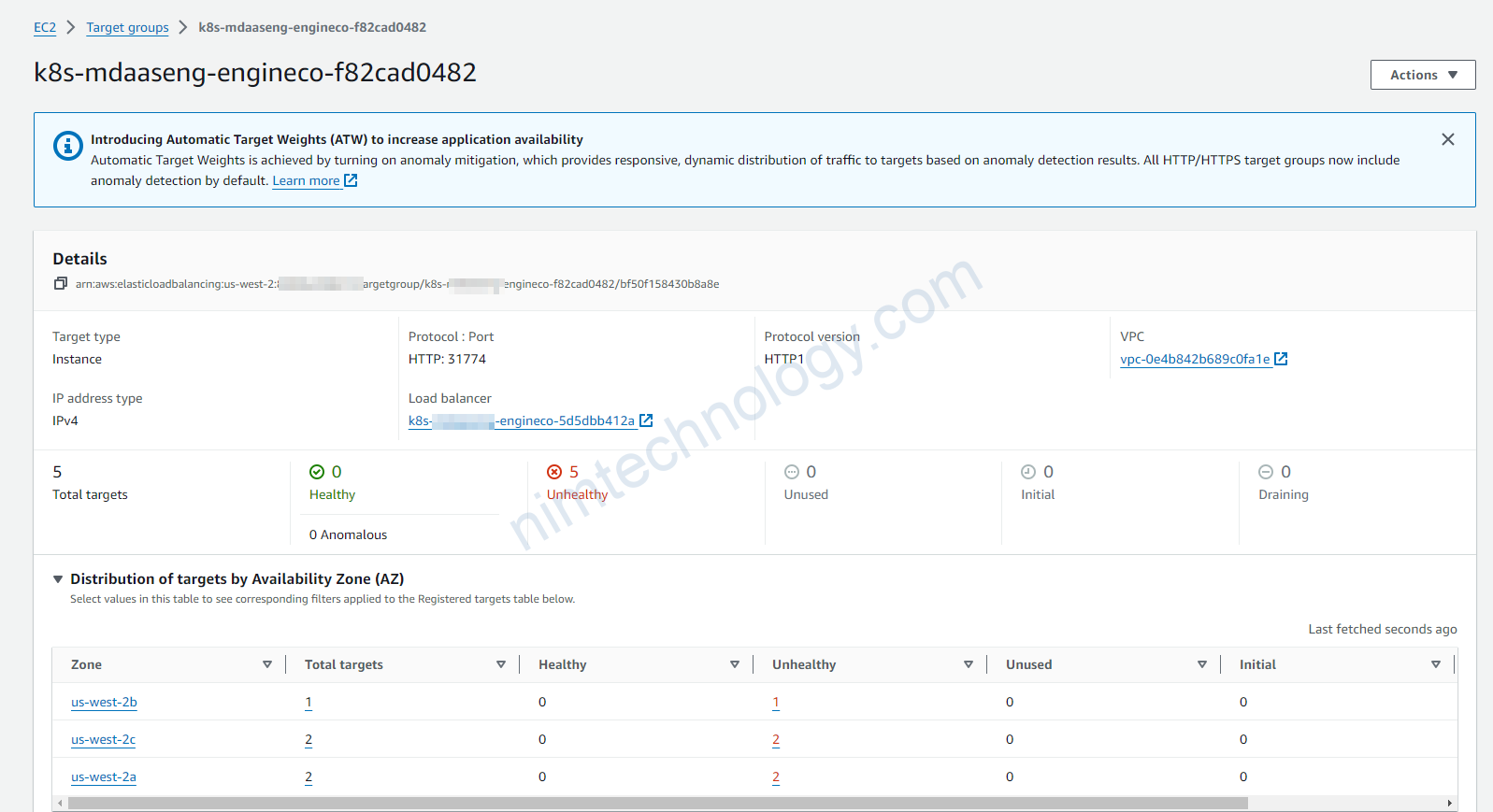

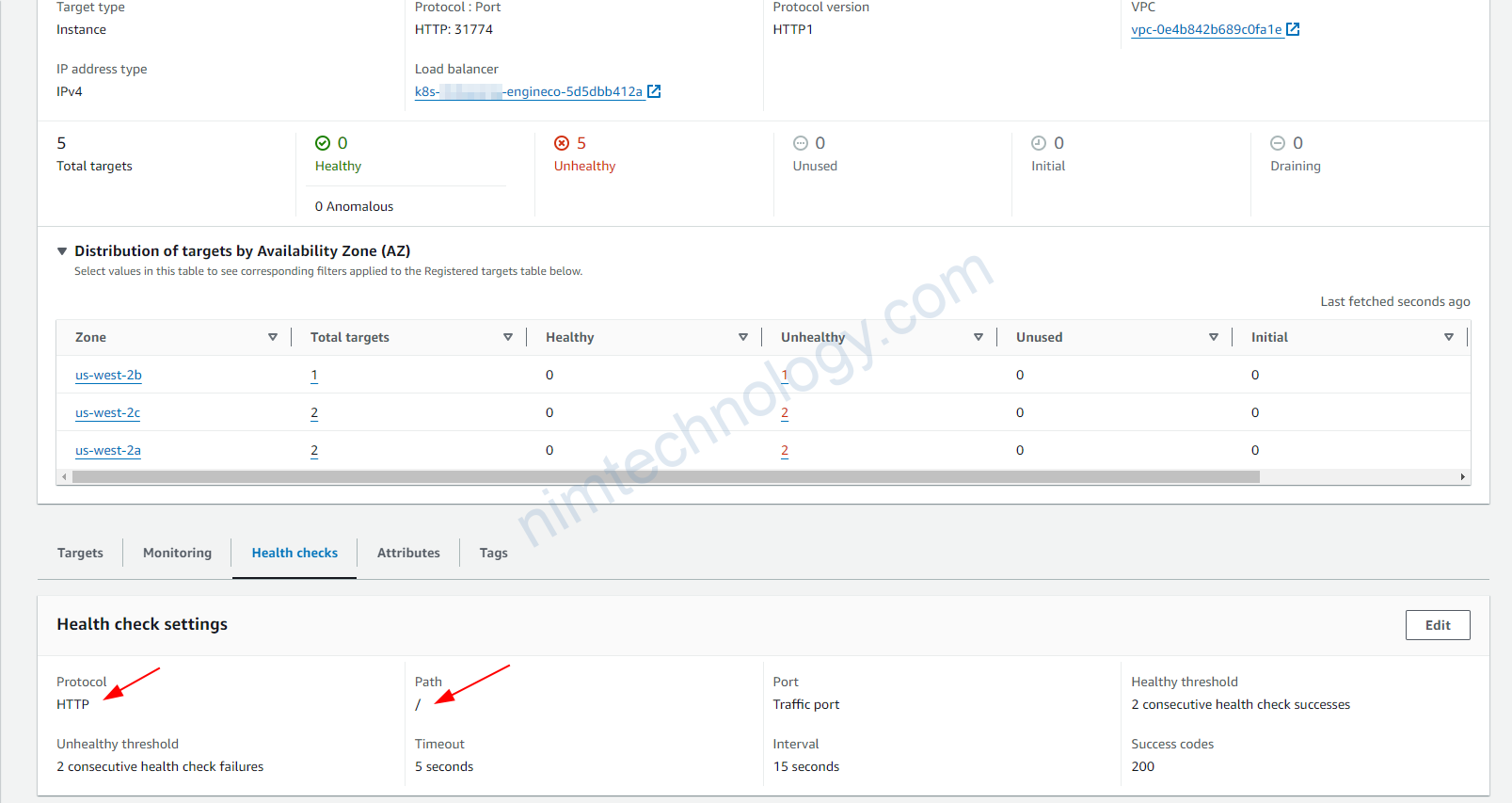

The health check of Target Group is Unhealthy

Nếu bạn thấy Target Group bị unHealthy như hình.

thì bạn check tiếp cái monitor đang watching trên cái gì?

Liệu path và protocol đã chính sách chưa?

hoặc bạn có thể chỉnh status code.

Bạn có thể thao khảo ở đây:

https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.1/guide/ingress/annotations/#health-check

để tạo health check phù hợp

AWS Load Balancer Controller find my subnet in Amazon EKS

https://repost.aws/knowledge-center/eks-load-balancer-controller-subnets

https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.5/deploy/subnet_discovery/