Link tham khảo thêm:

========> action thôi

1) Prepare the requirements before install k8s via K0S.

Sử dụng script để tạo change pass root trên ubuntu ubuntu-20

#!/bin/bash

# Enable ssh password authentication

echo "Enable ssh password authentication"

sed -i 's/^PasswordAuthentication no/PasswordAuthentication yes/' /etc/ssh/sshd_config

sed -i 's/.*PermitRootLogin.*/PermitRootLogin yes/' /etc/ssh/sshd_config

systemctl reload sshd

# Set Root password

echo "Set root password"

echo -e "admin\nadmin" | passwd root >/dev/null 2>&1

gen ssh-key

root@k8s-master:~# ssh-keygen -t rsa -b 2048 -f ~/.ssh/id_rsa_k0s

Generating public/private rsa key pair.

Enter passphrase (empty for no passphrase): <để trống>

Enter same passphrase again: <để trống>

Your identification has been saved in /root/.ssh/id_rsa_k0s

Your public key has been saved in /root/.ssh/id_rsa_k0s.pub

The key fingerprint is:

SHA256:HvatXNUWf5jcvvRVXDxIUG41ysVkGEPnGq3sIORPlYQ root@k8s-master

The key's randomart image is:

+---[RSA 2048]----+

| .=B=B |

| E=.%o.|

| . X =o|

| o +.+*=|

| So o += O|

| o o+.o. oo|

| . ..o. .o|

| . o . +|

| o ..|

+----[SHA256]-----+

copy public sang các con worker

root@k8s-master:~# ssh-copy-id -i ~/.ssh/id_rsa_k0s.pub 192.168.101.41

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa_k0s.pub"

The authenticity of host '192.168.101.41 (192.168.101.41)' can't be established.

ECDSA key fingerprint is SHA256:nm6S4HLwHaZj1bkPAzTO04SDXMbUyQU/DRYxIcCGaK0.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.101.41's password: <pass root>

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.101.41'"

and check to make sure that only the key(s) you wanted were added.

Dùng lệnh này ssh sang cách server khác sem có được hem

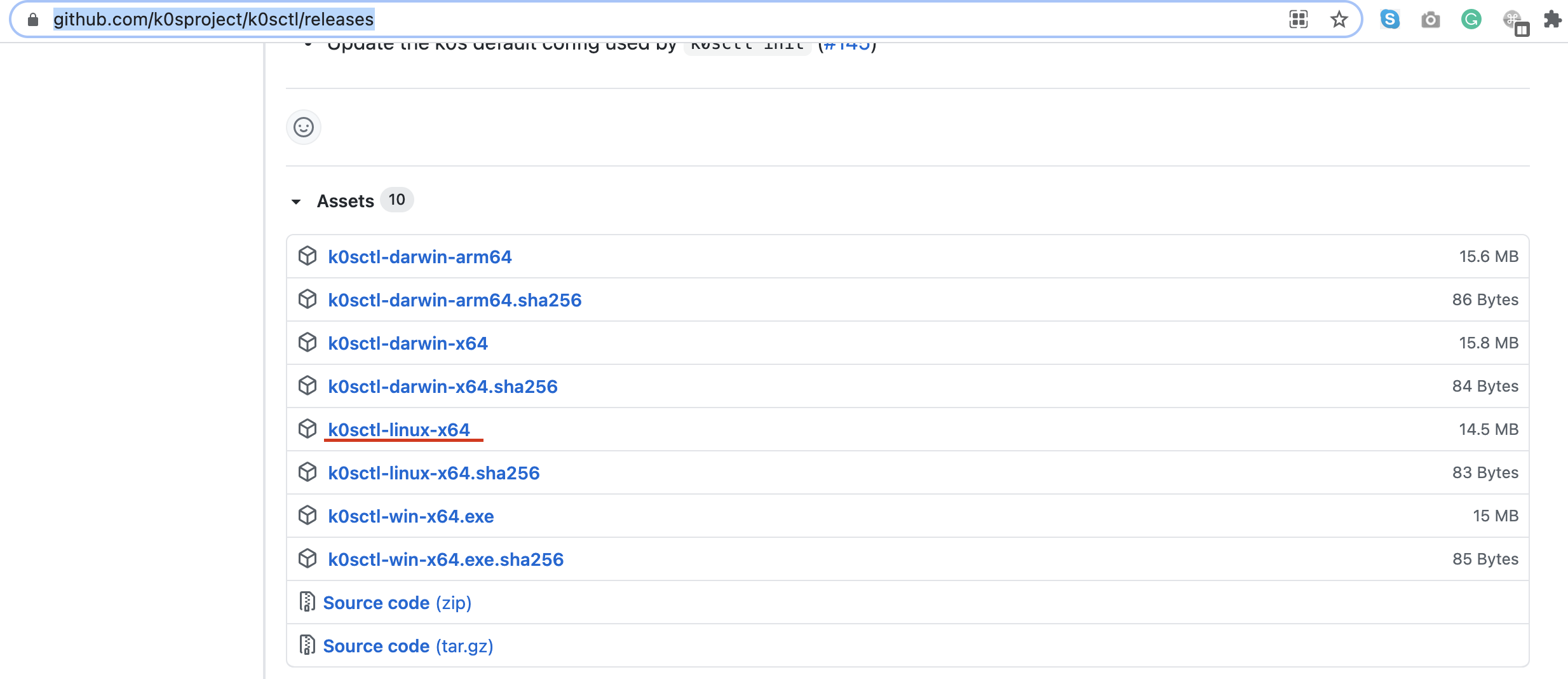

ssh 192.168.101.41 -i ~/.ssh/id_rsa_k0sVào link tải k0sctl:

https://github.com/k0sproject/k0sctl/releases

wget https://github.com/k0sproject/k0sctl/releases/download/v0.9.0/k0sctl-linux-x64 -O k0sctl

root@k8s-master:~# ls

k0sctl snap

root@k8s-master:~# chmod +x k0sctl

root@k8s-master:~# mv k0sctl /usr/local/bin/

root@k8s-master:~# k0sctl version

version: v0.9.0

commit: 6d364ff

Link tham khảo các câu lệnh https://docs.k0sproject.io/main/k0sctl-install/

root@k8s-master:~# k0sctl init > k0sctl.yaml

root@k8s-master:~# ls

k0sctl.yaml snap

1.1) Kubernetes CNI Providers Calico

Sửa file k0sctl.yaml

apiVersion: k0sctl.k0sproject.io/v1beta1

kind: Cluster

metadata:

name: k0s-cluster

spec:

hosts:

- ssh:

address: 192.168.101.40

user: root

port: 22

keyPath: /root/.ssh/id_rsa_k0s

role: controller

privateInterface: ens160

- ssh:

address: 192.168.101.41

user: root

port: 22

keyPath: /root/.ssh/id_rsa_k0s

role: worker

privateInterface: ens160

- ssh:

address: 192.168.101.42

user: root

port: 22

keyPath: /root/.ssh/id_rsa_k0s

role: worker

privateInterface: ens160

- ssh:

address: 192.168.101.43

user: root

port: 22

keyPath: /root/.ssh/id_rsa_k0s

role: worker

privateInterface: ens160

k0s:

version: 1.21.3+k0s.0

config:

spec:

api:

extraArgs:

service-node-port-range: 30000-32767

network:

podCIDR: 10.244.0.0/16

serviceCIDR: 10.96.0.0/12

provider: calico

calico:

mode: vxlan

vxlanPort: 4789

vxlanVNI: 4096

mtu: 1450

wireguard: false

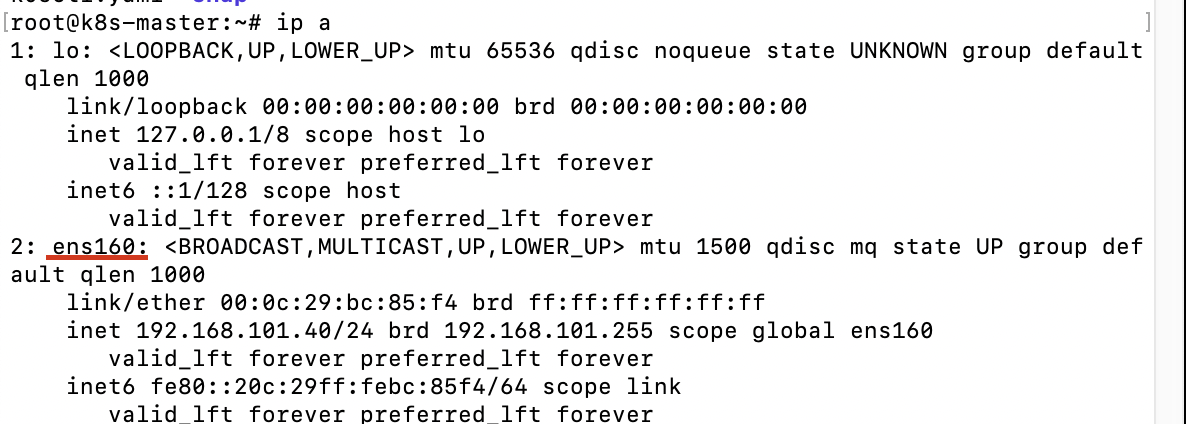

nhớ chú ý privateInterface: ens160

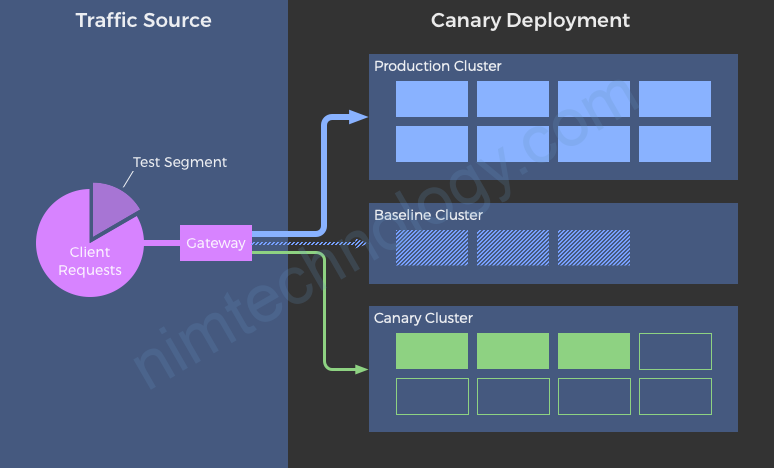

1.2) Install kubernetes with Cilium CNI Provider by K0s

(update Sun, Feb 25th, 2023)

Mình biết đến thanh niên Cilium là vị link dưới.

https://cloud.google.com/kubernetes-engine/docs/how-to/dataplane-v2

Nghe một người anh em giấu mắt tên Duy bảo là sẽ bỏ kube-proxy

Hôm nay mình vọc cài phát đã:

Sau một thời gian upgrade bị lỗi mình tìm được issue trên KOS

https://github.com/k0sproject/k0s/issues/2301

Và link hướng dẫn của anh trai:

https://github.com/xinity/k0s_cilium_playground

apiVersion: k0sctl.k0sproject.io/v1beta1

kind: Cluster

metadata:

name: k0s-cilium-cluster-1

spec:

hosts:

- ssh:

address: 192.168.101.40

user: root

port: 22

keyPath: /root/.ssh/id_rsa_k0s

role: controller

privateInterface: ens160

installFlags:

- --enable-worker

- --debug=true

- ssh:

address: 192.168.101.41

user: root

port: 22

keyPath: /root/.ssh/id_rsa_k0s

role: worker

privateInterface: ens160

- ssh:

address: 192.168.101.42

user: root

port: 22

keyPath: /root/.ssh/id_rsa_k0s

role: worker

privateInterface: ens160

- ssh:

address: 192.168.101.43

user: root

port: 22

keyPath: /root/.ssh/id_rsa_k0s

role: worker

privateInterface: ens160

k0s:

version: v1.26.1+k0s.0

dynamicConfig: false

config:

apiVersion: k0s.k0sproject.io/v1beta1

kind: Cluster

metadata:

name: k0s-cilium-cluster-1

spec:

api:

k0sApiPort: 9443

port: 6443

extraArgs:

service-node-port-range: 30000-32767

installConfig:

users:

etcdUser: etcd

kineUser: kube-apiserver

konnectivityUser: konnectivity-server

kubeAPIserverUser: kube-apiserver

kubeSchedulerUser: kube-scheduler

konnectivity:

adminPort: 8133

agentPort: 8132

network:

kubeProxy:

disabled: true

mode: iptables

kuberouter:

autoMTU: true

mtu: 0

peerRouterASNs: ""

peerRouterIPs: ""

podCIDR: 10.244.0.0/16

serviceCIDR: 10.96.0.0/12

provider: custom

podSecurityPolicy:

defaultPolicy: 00-k0s-privileged

storage:

type: etcd

telemetry:

enabled: true

extensions:

helm:

repositories:

- name: cilium

url: https://helm.cilium.io/

charts:

- name: cilium

chartname: cilium/cilium

version: "1.12.6"

values: |

k8sServiceHost: 192.168.101.40 #change it

k8sServicePort: 6443

cluster:

name: k0s-cilium-cluster-1

id: 1

rollOutCiliumPods: true

hubble:

enabled: true

metrics:

enabled:

- dns:query;ignoreAAAA

- drop

- tcp

- flow

- icmp

- http

port: 9965

serviceAnnotations: {}

serviceMonitor:

enabled: false

labels: {}

annotations: {}

metricRelabelings: ~

relay:

enabled: true

rollOutPods: true

prometheus:

enabled: true

port: 9966

serviceMonitor:

enabled: false

labels: {}

annotations: {}

interval: "10s"

metricRelabelings: ~

ui:

enabled: true

standalone:

enabled: false

tls:

certsVolume: {}

rollOutPods: true

ipam:

mode: "cluster-pool"

operator:

clusterPoolIPv4PodCIDR: "10.244.0.0/16"

clusterPoolIPv4PodCIDRList: ["10.244.0.0/16"]

clusterPoolIPv4MaskSize: 24

clusterPoolIPv6PodCIDR: "fd00::/104"

clusterPoolIPv6PodCIDRList: []

clusterPoolIPv6MaskSize: 120

prometheus:

enabled: true

port: 9962

serviceMonitor:

enabled: false

labels: {}

annotations: {}

metricRelabelings: ~

operator:

enabled: true

rollOutPods: true

prometheus:

enabled: true

port: 9963

serviceMonitor:

enabled: false

labels: {}

annotations: {}

metricRelabelings: ~

skipCRDCreation: false

removeNodeTaints: true

setNodeNetworkStatus: true

unmanagedPodWatcher:

restart: true

intervalSeconds: 15

kubeProxyReplacement: "strict"

kubeProxyReplacementHealthzBindAddr: "0.0.0.0:10256"

namespace: cilium

refrence links:

https://github.com/k0sproject/k0s/issues/988

https://docs.cilium.io/en/v1.9/gettingstarted/k8s-install-kubeadm/

http://www.wangqingzheng.com/yunweipai/91/40491.html

##Note Hiện tại thì để cài cilium 1.12.0 với bạn k8s mới thì cài từ v1.21.8+k0s.0

sau đó nâng cấp dần version k8s lên!

2) K0S actions

2.1) Apply config

root@k8s-master:~# k0sctl apply --config k0sctl.yaml

⠀⣿⣿⡇⠀⠀⢀⣴⣾⣿⠟⠁⢸⣿⣿⣿⣿⣿⣿⣿⡿⠛⠁⠀⢸⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀█████████ █████████ ███

⠀⣿⣿⡇⣠⣶⣿⡿⠋⠀⠀⠀⢸⣿⡇⠀⠀⠀⣠⠀⠀⢀⣠⡆⢸⣿⣿⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀███ ███ ███

⠀⣿⣿⣿⣿⣟⠋⠀⠀⠀⠀⠀⢸⣿⡇⠀⢰⣾⣿⠀⠀⣿⣿⡇⢸⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀███ ███ ███

⠀⣿⣿⡏⠻⣿⣷⣤⡀⠀⠀⠀⠸⠛⠁⠀⠸⠋⠁⠀⠀⣿⣿⡇⠈⠉⠉⠉⠉⠉⠉⠉⠉⢹⣿⣿⠀███ ███ ███

⠀⣿⣿⡇⠀⠀⠙⢿⣿⣦⣀⠀⠀⠀⣠⣶⣶⣶⣶⣶⣶⣿⣿⡇⢰⣶⣶⣶⣶⣶⣶⣶⣶⣾⣿⣿⠀█████████ ███ ██████████

k0sctl v0.9.0 Copyright 2021, k0sctl authors.

Anonymized telemetry of usage will be sent to the authors.

By continuing to use k0sctl you agree to these terms:

https://k0sproject.io/licenses/eula

INFO ==> Running phase: Connect to hosts

INFO [ssh] 192.168.101.40:22: connected

INFO [ssh] 192.168.101.41:22: connected

INFO [ssh] 192.168.101.41:22: connected

INFO [ssh] 192.168.101.41:22: connected

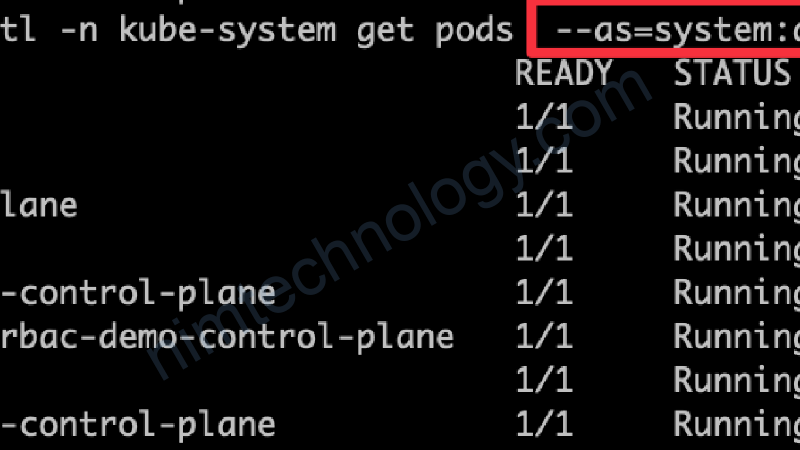

2.2) kubectl

Chờ 1 lúc run xong thì:

k0sctl kubeconfig --config k0sctl.yaml > ~/.kube/config

Giờ cài kubectl

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubectl

kubectl get node

2.3) Delete K8s

Nếu chán quá bạn có thể xoá mấy k8s bằng câu lệnh

k0sctl reset --config k0sctl.yaml

2.4) where are the k8s configs?

root@k8s-master-cluster2:/etc/k0s# ls /var/lib/k0s/

bin/ etcd/ konnectivity.conf manifests/ pki/

root@k8s-master-cluster2:/etc/k0s# ls /var/lib/k0s/pki/

admin.conf ca.key front-proxy-client.key scheduler.conf

admin.crt ccm.conf k0s-api.crt scheduler.crt

admin.key ccm.crt k0s-api.key scheduler.key

apiserver-etcd-client.crt ccm.key konnectivity.conf server.crt

apiserver-etcd-client.key etcd konnectivity.crt server.key

apiserver-kubelet-client.crt front-proxy-ca.crt konnectivity.key

apiserver-kubelet-client.key front-proxy-ca.key sa.key

ca.crt front-proxy-client.crt sa.pub

Comment on “[KOS] Use KOS to install kubernetes so easily!”