Nếu bạn sửa dụng kafka-connect mà sẽ cần add thêm rất nhiều plugin vào server kafka-connect.

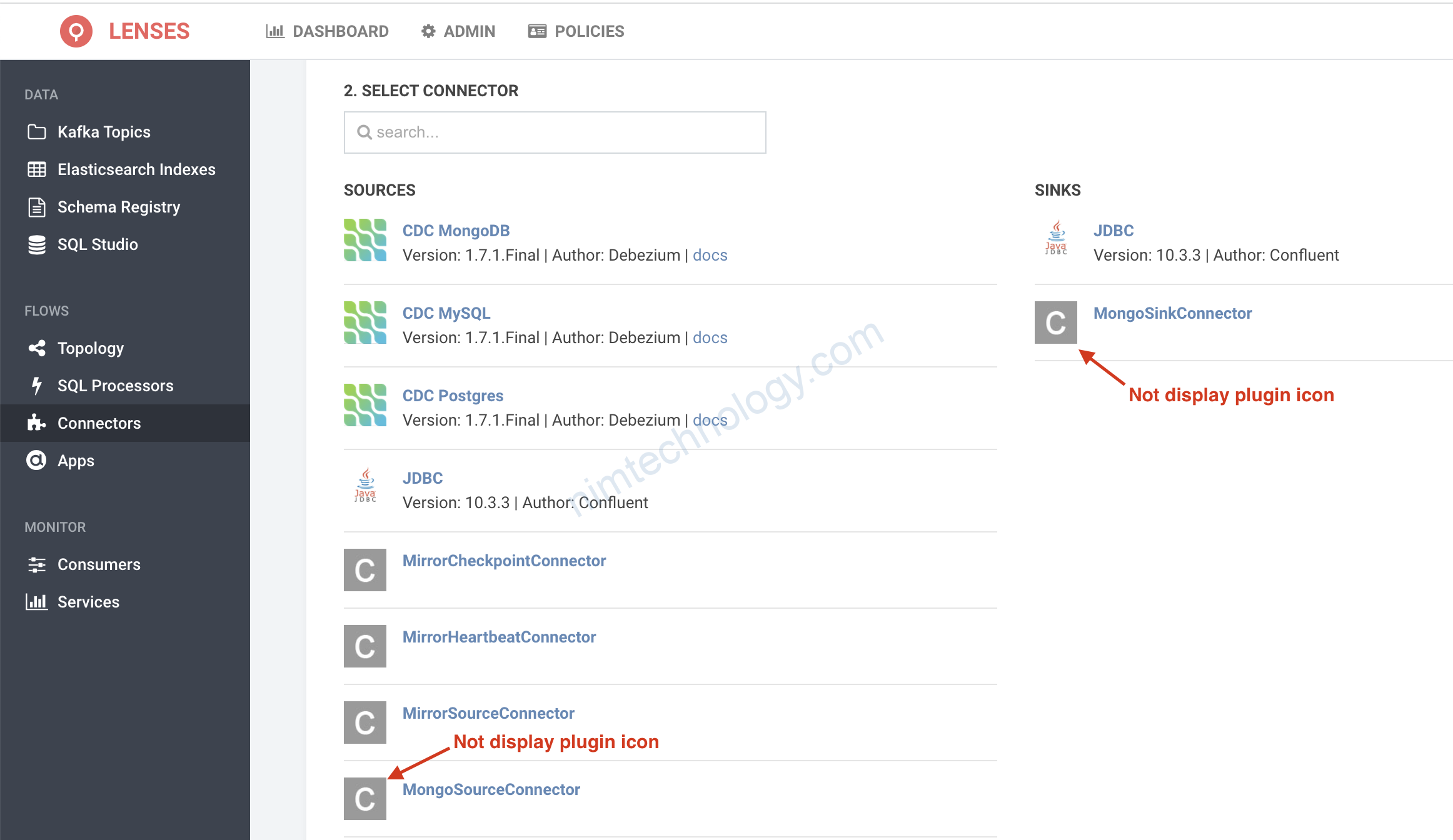

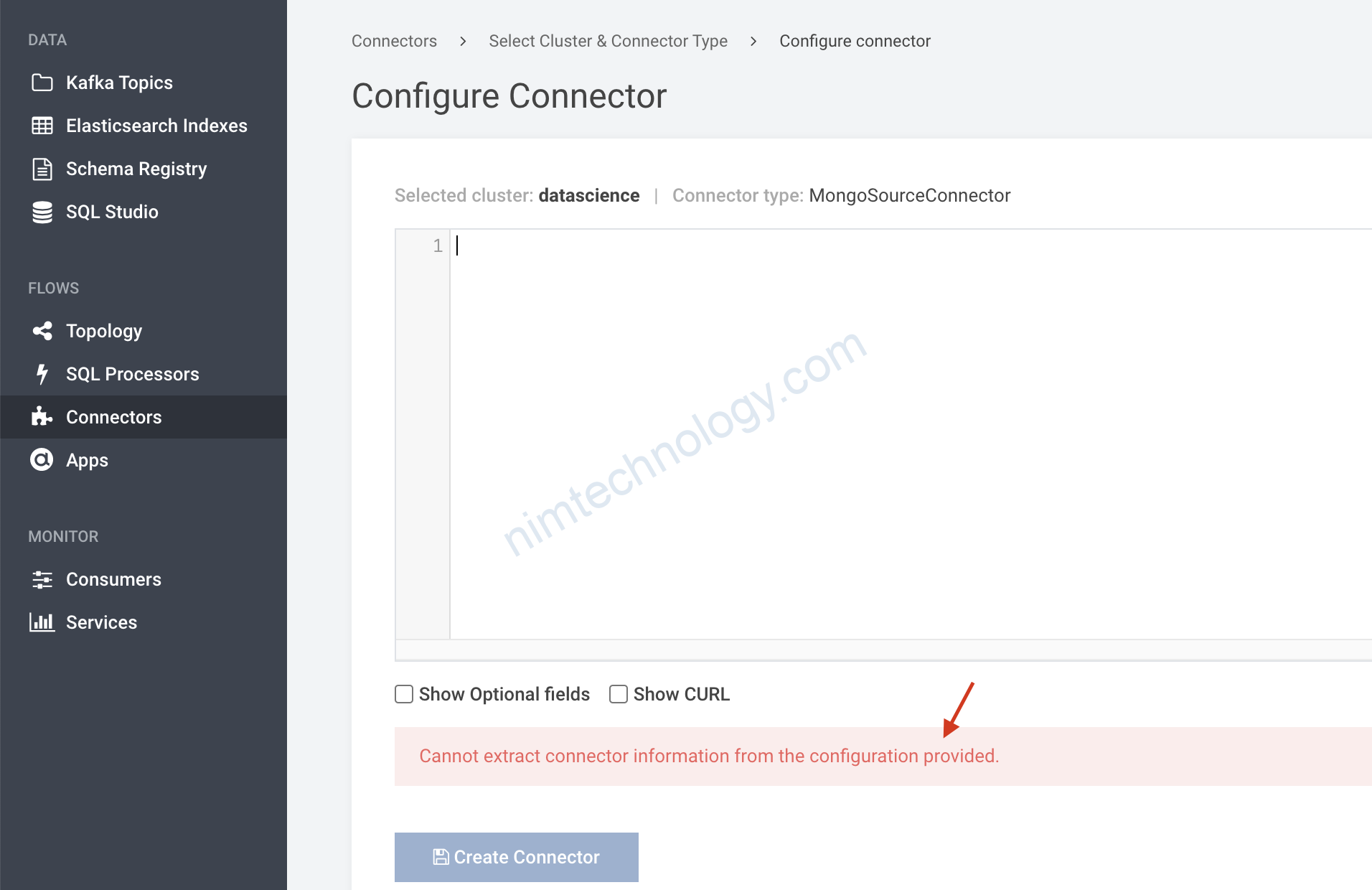

Mình đang gặp vấn đề có 1 vài plugin lenses ko thể validate được.

Ví dụ như plugin này: MongoDB Connector (Source and Sink)

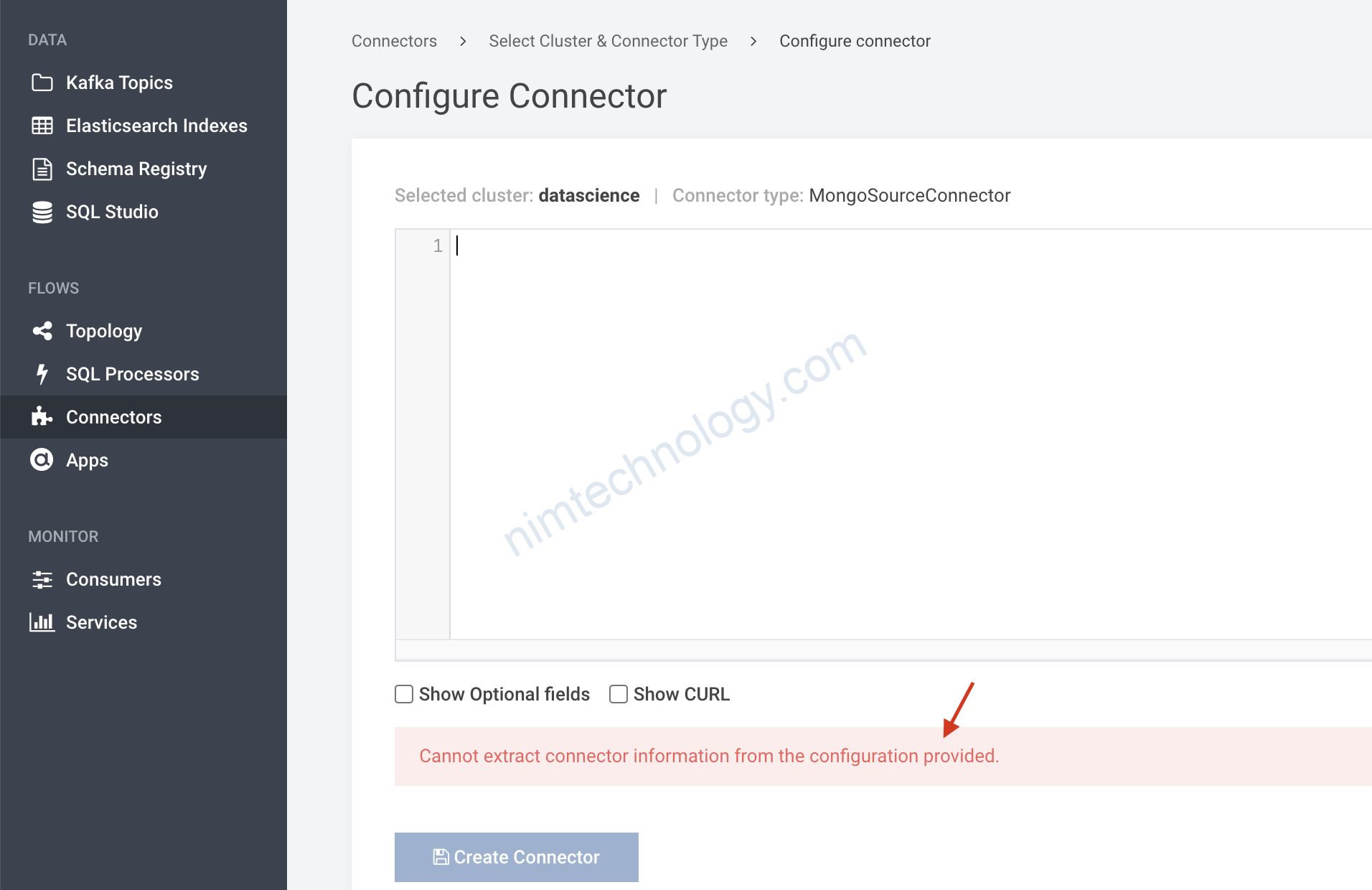

khi mình create 1 connector có hiện tượng sau:

Lenses kafka

Có 1 người anh chỉ mình:

https://docs.lenses.io/5.0/configuration/static/options/topology/#configure-lenses-to-load-a-custom-connector

chúng ta cần Configure Lenses to load a custom connector

Nếu bạn chạy docker compose thì

version: '3'

services:

lenses:

image: lensesio/lenses:latest

container_name: lenses

...

environment:

...

LENSES_CONNECTORS_INFO: |

[

{

class.name = "com.splunk.kafka.connect.SplunkSinkConnector"

name = "Splunk Sink",

instance = "splunk.hec.uri"

sink = true,

extractor.class = "io.lenses.config.kafka.connect.SimpleTopicsExtractor"

icon = "splunk.png",

description = "Stores Kafka data in Splunk"

docs = "https://github.com/splunk/kafka-connect-splunk",

author = "Splunk"

},

{

class.name = "io.debezium.connector.sqlserver.SqlServerConnector"

name = "CDC MySQL"

instance = "database.hostname"

sink = false,

property = "database.history.kafka.topic"

extractor.class = "io.lenses.config.kafka.connect.SimpleTopicsExtractor"

icon = "debezium.png"

description = "CDC data from RDBMS into Kafka"

docs = "//debezium.io/docs/connectors/mysql/",

author = "Debezium"

}

]

...

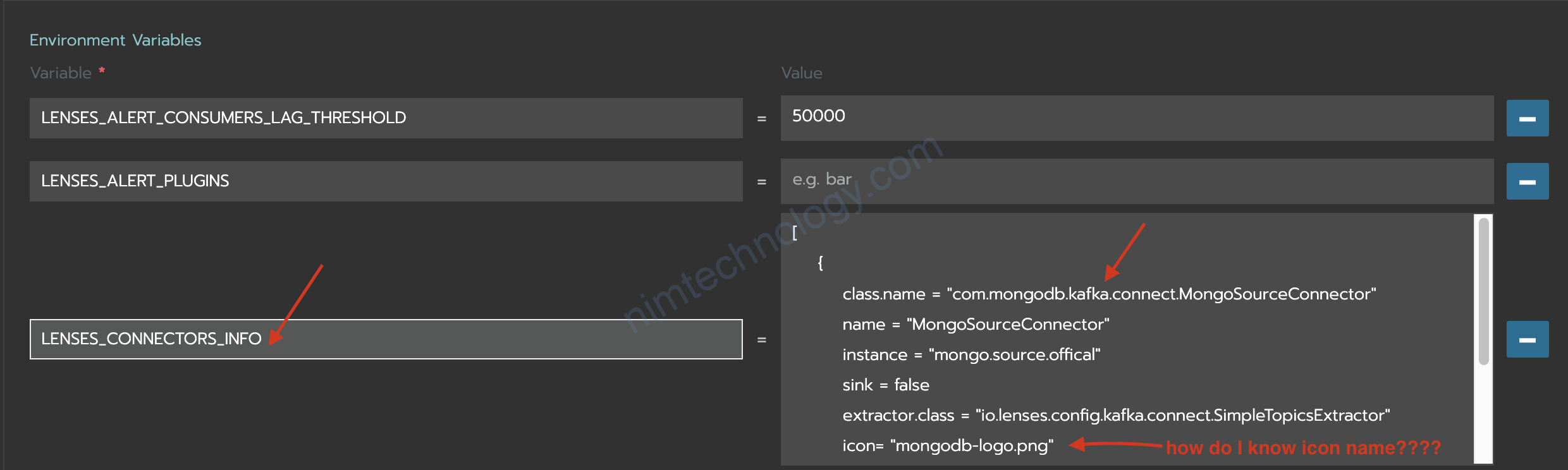

LENSES_CONNECTORS_INFO

[

{

class.name = "com.mongodb.kafka.connect.MongoSourceConnector"

name = "MongoSourceConnector"

instance = "mongo.source.offical"

sink = false

extractor.class = "io.lenses.config.kafka.connect.SimpleTopicsExtractor"

icon= "mongodb.png"

description = "Mongo Offical source connector"

author = "Mongodb team"

property = "topic"

}

]

với deployment trên kubenetes thì cũng y chang nhé các bạn.

Mình sài rancher nên easy lắm

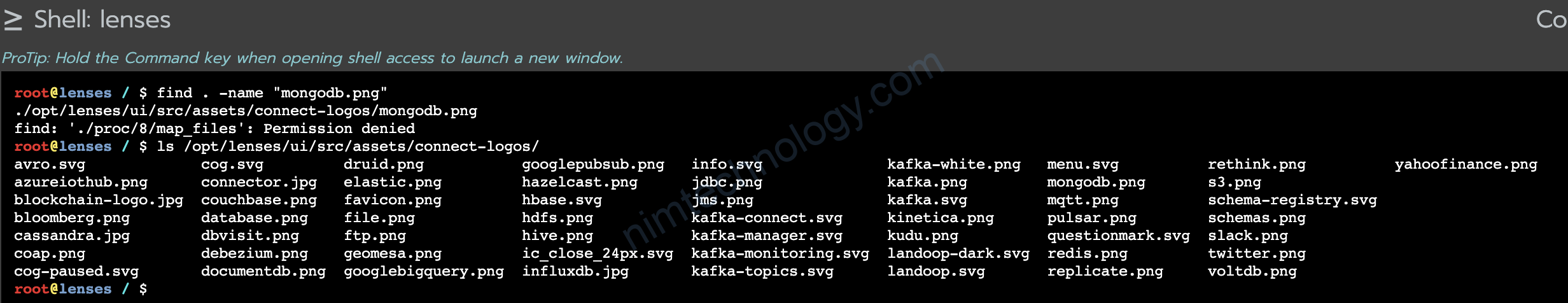

Nhiều anh em sẽ hỏi là sao biêt được tên của file icon.

hiện tại thi ở như trong ảnh