1) Docker

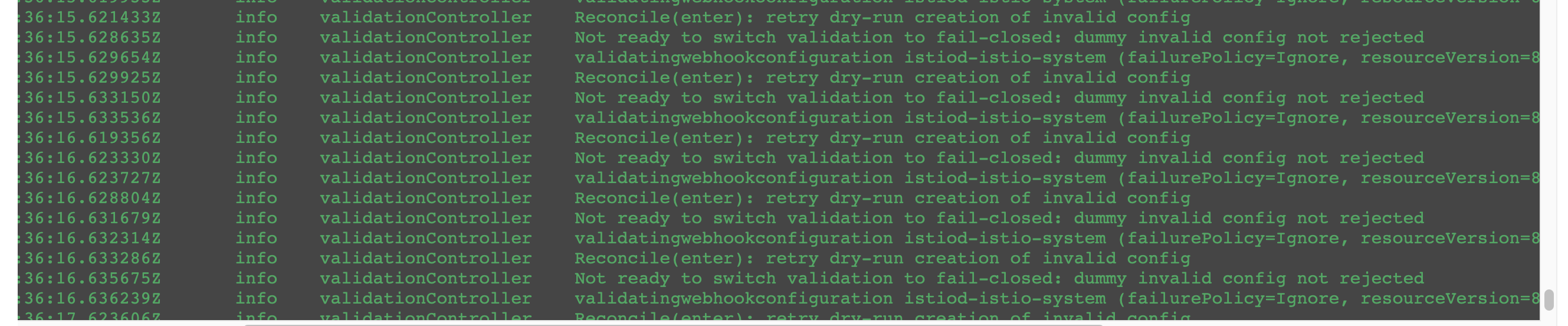

Show log 10 dòng cuối:

docker logs -f --tail 10 container_nameDocker build

docker build -t ps:version1 --force-rm -f Dockerfile .kubectl -n argocd port-forward deployment.apps/argocd-server 8080:8080với các build mà sử dụng git private:

GITHUB_TOKEN=abcsldfnjsdbfasd

docker build -t ps:version1 --force-rm --build-arg GITHUB_TOKEN=${GITHUB_TOKEN} -f Dockerfile.ci .trong Dockerfile thì bạn sẽ có dòng RUN như sau:

Host key verification failed. fatal: Could not read from remote repository. Please make sure you have the correct access rights and the repository exists. error building image: error building stage: failed to execute command: waiting for process to exit: exit status 1

Nếu bạn bị lỗi trên thì thêm line bên dưới vào dockerfile

RUN git config --global url."https://${GITHUB_TOKEN}:x-oauth-basic@github.com/".insteadOf "https://github.com/"Run docker và excute ngay lập tức

docker run -it --entrypoint /bin/bash mrnim94/terraform-kubectl:0.0.5Copying Files from Docker Container to Host

docker cp container_name:/path/in/container /path/on/host

##example:

# Copy a file

docker cp activemq-artemis:/var/lib/artemis/data/myfile.txt ./

# Copy a directory

docker cp activemq-artemis:/var/lib/artemis/etc/ ./artemis-config/1.1) Docker vs VM

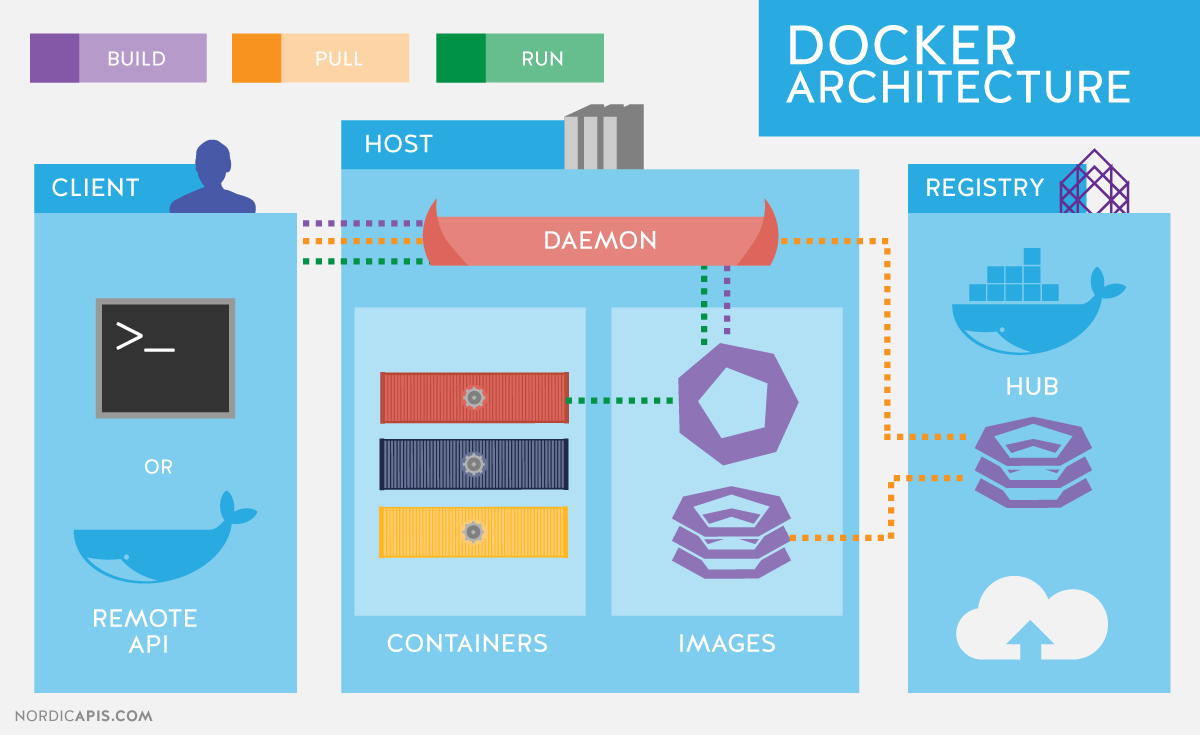

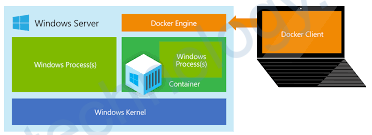

Docker and VM (Virtual Machine) are two different technologies used for virtualization.

A virtual machine is a software-based emulation of a physical machine that allows multiple operating systems to run on a single physical machine. Each VM includes a virtual CPU, memory, storage, and network interface, and can run any operating system that can run on a physical machine.

Docker, on the other hand, is a software platform that allows developers to create, deploy, and run applications in a containerized environment. Docker containers are lightweight and share the same operating system kernel, libraries, and other system resources, making them more efficient and portable than traditional virtual machines.

In summary, virtual machines emulate an entire operating system, while Docker containers only include the application and its dependencies, making them more lightweight and efficient. Both technologies have their own strengths and use cases, and the choice between them depends on the specific requirements of the application and its deployment environment.

More simple:

Virtual machines (VMs) are like fully functioning computers that run inside your computer. They simulate a complete operating system with its own hardware and resources, allowing you to run multiple operating systems on a single physical machine.

Docker is a tool that allows developers to create “containers” for their applications. Containers are like little packages that contain all the necessary code and dependencies for the application to run. These containers are much smaller and more efficient than virtual machines, as they share resources with the host operating system.

So, the main difference is that VMs are like full-fledged computers inside your computer, while Docker containers are like little packages that contain only the necessary code to run the application.

1.2) Optimize the file size of the docker image based on dockerfile

Optimizing the file size of a Docker image based on a Dockerfile can be achieved by following these best practices:

- Use a smaller base image: Start with a smaller base image like Alpine or BusyBox instead of a full-blown OS like Ubuntu or CentOS.

- Combine commands: Use multi-line commands to reduce the number of layers created in the image. For example, instead of creating a separate layer for each RUN command, you can combine multiple commands into a single RUN command.

- Clean up after each command: Remove any unnecessary files or temporary files after each command. This can be done using the “&& rm -rf /var/lib/apt/lists/*” command in an apt-get update command.

- Use .dockerignore file: Create a .dockerignore file to exclude unnecessary files from the Docker build context. This can significantly reduce the size of the final image.

- Minimize package installations: Only install packages that are required by your application. Avoid installing unnecessary packages or dependencies that are not needed.

- Use a multi-stage build: Use a multi-stage build to separate the build environment from the runtime environment. This can significantly reduce the size of the final image.

By following these best practices, you can create smaller and more efficient Docker images, which can reduce the overall build and deployment time and save storage space.

Explain more:

Certainly! Let me explain Practice 2 in more detail.

Practice 2 is about minimizing the number of layers in your Docker image. Each instruction in a Dockerfile creates a new layer in the final image, which can increase the size of the image. By combining multiple instructions into a single RUN command, you can reduce the number of layers and the overall size of the image.

For example, consider the following Dockerfile:

FROM ubuntu:latest

RUN apt-get update

RUN apt-get install -y \

apache2 \

php7.2 \

libapache2-mod-php7.2 \

php7.2-mysql \

&& apt-get clean

COPY . /var/www/html/

CMD ["apache2ctl", "-D", "FOREGROUND"]

In this Dockerfile, we have used separate RUN commands to update the package list, install the required packages, and clean up the apt cache. Each RUN command creates a new layer in the final image.

To reduce the number of layers, we can combine these instructions into a single RUN command like this:

FROM ubuntu:latest

RUN apt-get update && \

apt-get install -y \

apache2 \

php7.2 \

libapache2-mod-php7.2 \

php7.2-mysql && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

COPY . /var/www/html/

CMD ["apache2ctl", "-D", "FOREGROUND"]

In this revised Dockerfile, we have combined the three RUN commands into a single RUN command. This reduces the number of layers and results in a smaller final image.

Overall, Practice 2 is about finding ways to minimize the number of layers in your Docker image by combining commands wherever possible. This can help reduce the size of the final image and make it more efficient.

2) Docker-compose

Run docker-compose chỉ định file:

docker-compose -f docker-compose.test.yml up3) Docker images

docker save --output myimage.tar myimage_id

>>>>Lưu image ra đĩa

docker load -i myimage.tar

>>>>Nạp Image trên đĩa vào Docker

docker tag image_id imagename:version

>>>>Đổi tên Image