1) Backend state

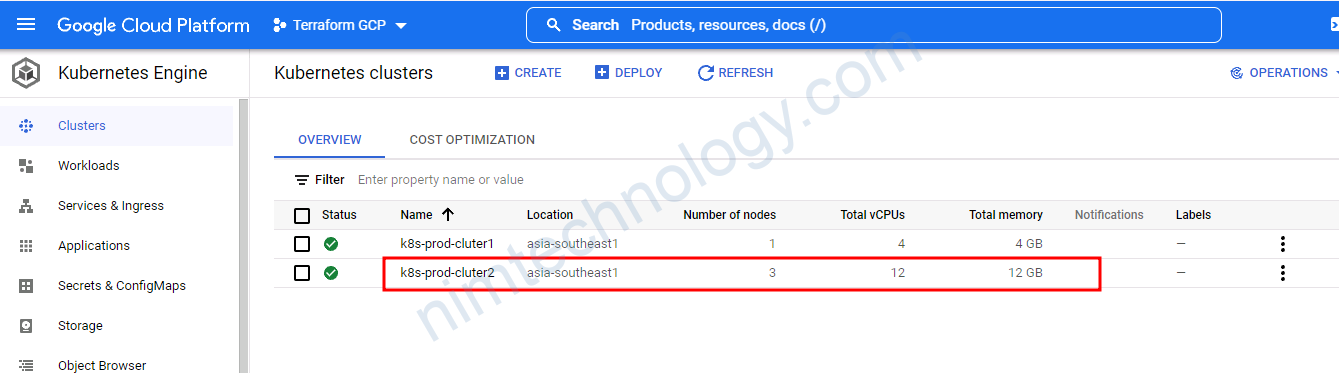

Bài này thì mình thực hiện build multi cluster k8s trên GCP

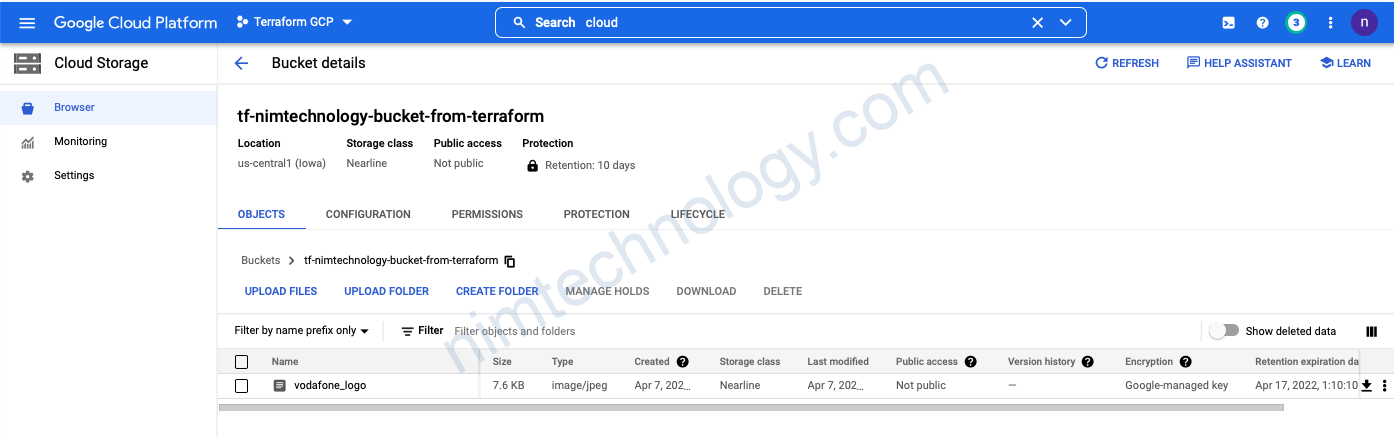

Để chuẩn bị cho đúng với bài tập thực tế. Chúng ta cần tạo 1 backend cho terraform trên GCS.

gcs-terraform-state/main.tf

>>>>>

resource "google_storage_bucket" "GCS1" {

name = "nimtechnology-infra-tf-state"

storage_class = "NEARLINE"

location = "US-CENTRAL1"

labels = {

"env" = "tf_env"

"dep" = "complience"

}

}

gcs-terraform-state/provider.tf

>>>>>

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "3.85.0"

}

}

}

provider "google" {

# Configuration options

project = "terraform-gcp-346216"

region = "us-central1"

zone = "us-central1-a"

credentials = "keys.json"

}

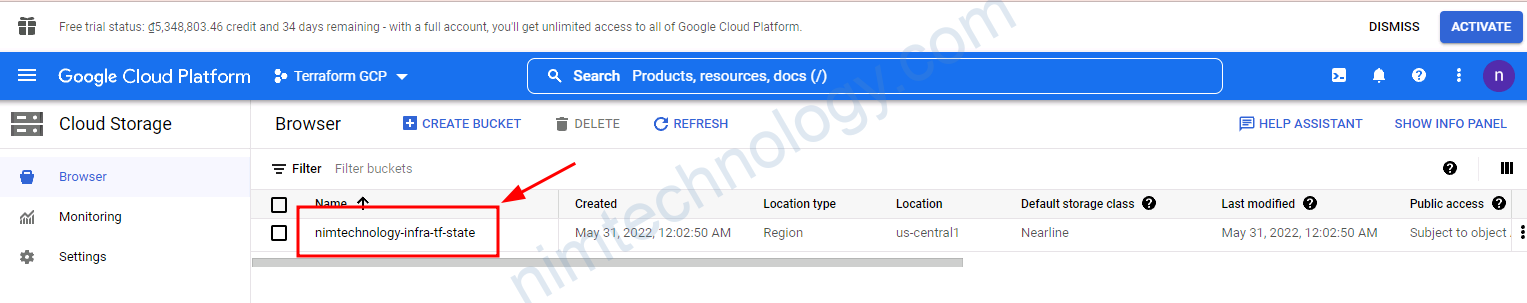

sau khi bạn chạy terraform apply bạn sẽ tạo được bucket trên GCS

Giờ minh tạo 1 folder là cluster 1. và bặt đầu project.

Remote state

Đầu tiền là file

provider.tf

>>>>>>>>

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "3.85.0"

}

}

backend "gcs" {

bucket = "nimtechnology-infra-tf-state"

prefix = "gcp/cluster1"

}

}

provider "google" {

# Configuration options

project = "terraform-gcp-346216"

region = "us-central1"

zone = "us-central1-a"

credentials = "keys.json"

}

ở đây có 1 phần mà chúng ta cần chú ý đó là

backend “gcs” Đây là nơi khai báo backend cho terraform

Để hiểu hơn về backend của terraform thì mình có mô tả ở đây.

https://nimtechnology.com/2022/05/03/terraform-terraform-beginner-lesson-4-remote-state/

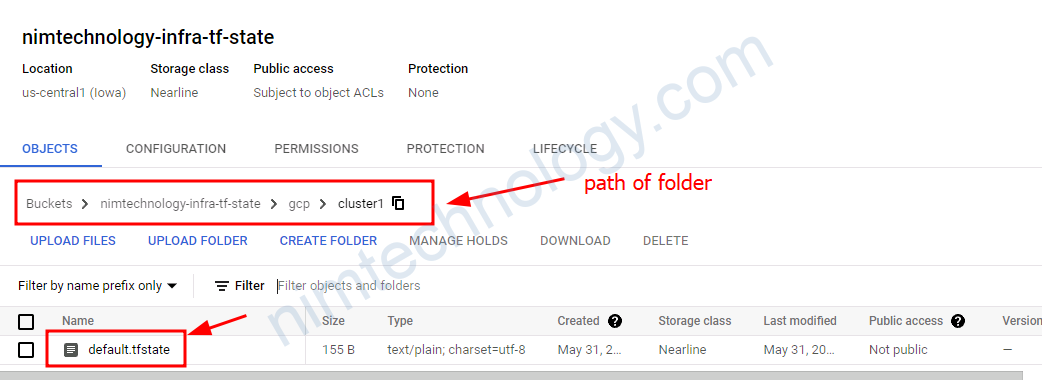

Bạn sẽ hiểu đơn giản là khi mà terraform hoạt động thì nó sẽ sinh ra file data terraform.tfstate và giờ chúng ta muốn nó lưu lên GCS của google để an toàn cũng như nhiều user có thể sử dụng được file này!

bucket = “nimtechnology-infra-tf-state”: bạn sẽ điền tên mà bucket mà bạn đã tạo ở bước trên

prefix = “gcp/cluster1”: terraform sẽ tạo 1 thử mục là gpc/cluster1 và lưu terraform.tfstate vào thư mục đã tạo.

2) Network

2.1) VPC

Giờ chúng ta tạo VPC cho các cluster k8s.

Chúng ta sử dụng module để làm việc với terraform

https://registry.terraform.io/modules/terraform-google-modules/network/google/

│ Error: Error when reading or editing Project Service terraform-gcp-346216/container.googleapis.com: Error disabling service "container.googleapis.com" for project "terraform-gcp-346216": googleapi: Error 403: Permission denied to disable service [container.googleapis.com]

│ Help Token: Ae-hA1MssoJk-qR0nbaoWhH2sayf7Cfc0xLVIbXy5yyfDQICgiR0Ht7Aybs_MWWYP5Qo2IyNPHmUja1ZxPTkzVnj1KeUzcdby4fOmMB-uEkVLiHS

│ Details:

│ [

│ {

│ "@type": "type.googleapis.com/google.rpc.PreconditionFailure",

│ "violations": [

│ {

│ "subject": "110002",

│ "type": "googleapis.com"

│ }

│ ]

│ },

│ {

│ "@type": "type.googleapis.com/google.rpc.ErrorInfo",

│ "domain": "serviceusage.googleapis.com",

│ "reason": "AUTH_PERMISSION_DENIED"

│ }

│ ]

│ , forbidden

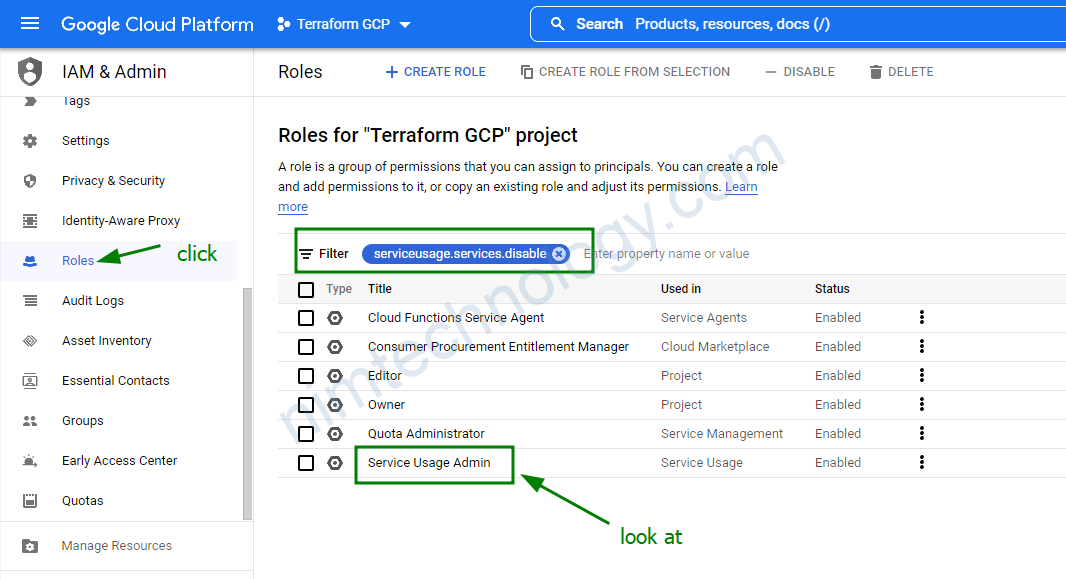

Nim chú ý vào dòng này Error when reading or editing Project Service

Theo một bài viết tham khảo:

https://stackoverflow.com/questions/70807862/how-to-solve-error-when-reading-or-editing-project-service-foo-container-google

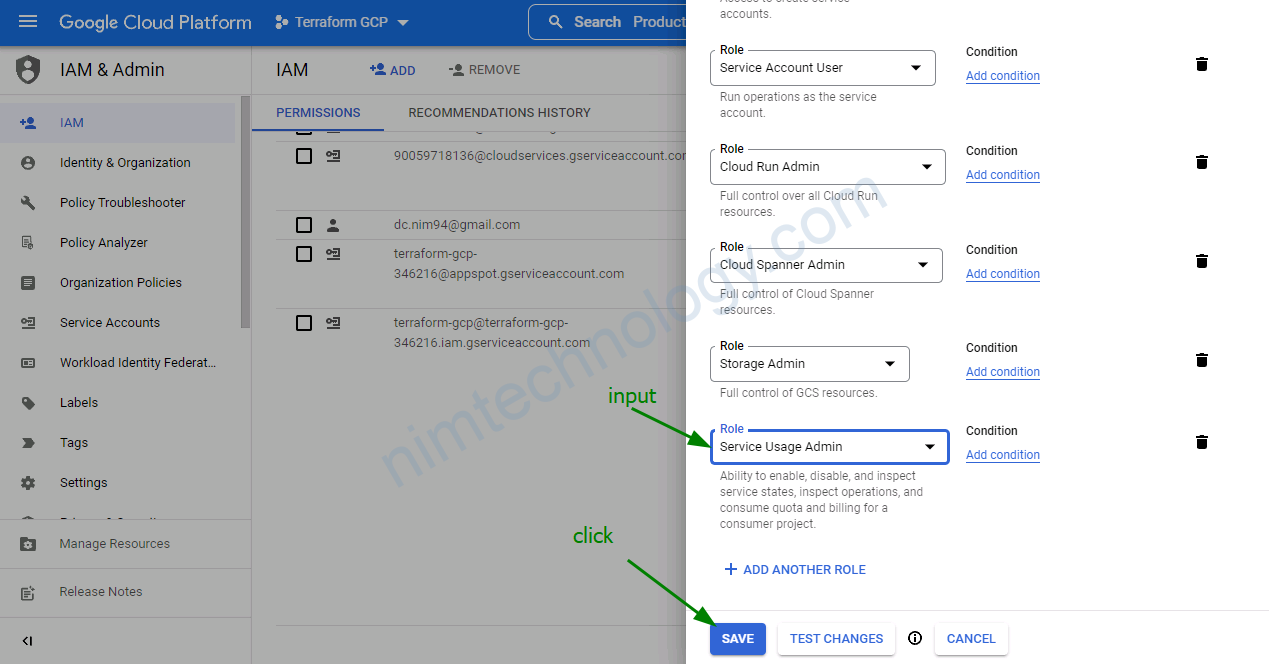

Chúng ta add thêm role Service Usage Admin cho user

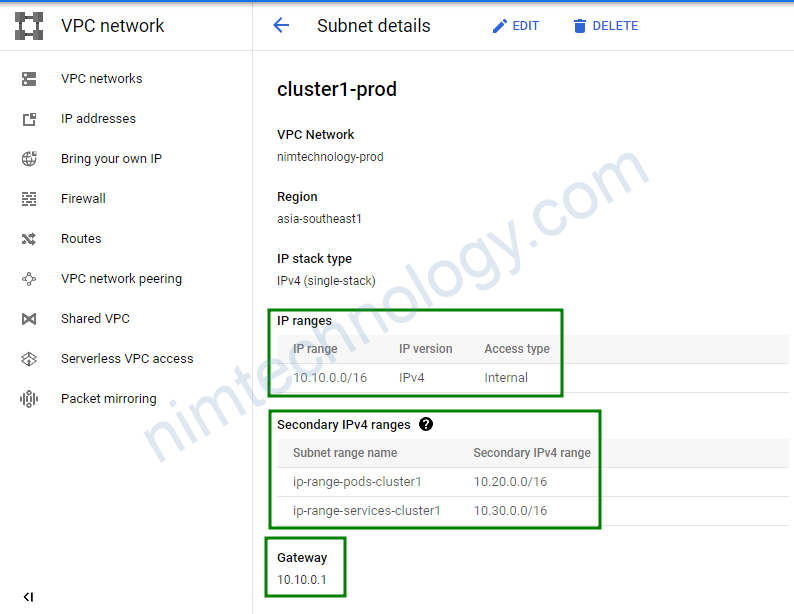

Các file config terraform với VPC:

02-vpc.tf

>>>>>>>>>>>>>>>

module "gcp-network" {

source = "terraform-google-modules/network/google"

version = "5.1.0"

project_id = var.project_id

network_name = "${var.network}-${var.env_name}"

routing_mode = "GLOBAL"

delete_default_internet_gateway_routes = true

subnets = [

{

subnet_name = "${var.subnetwork}-${var.env_name}"

subnet_ip = "10.10.0.0/16"

subnet_region = var.region

subnet_private_access = "true"

},

]

routes = [

{

name = "${var.subnetwork}-egress-internet"

description = "Default route to the Internet"

destination_range = "0.0.0.0/0"

next_hop_internet = "true"

},

]

secondary_ranges = {

"${var.subnetwork}-${var.env_name}" = [

{

range_name = var.ip_range_pods_name

ip_cidr_range = "10.20.0.0/16"

},

{

range_name = var.ip_range_services_name

ip_cidr_range = "10.30.0.0/16"

},

]

}

}

Giải thích 1 chút:

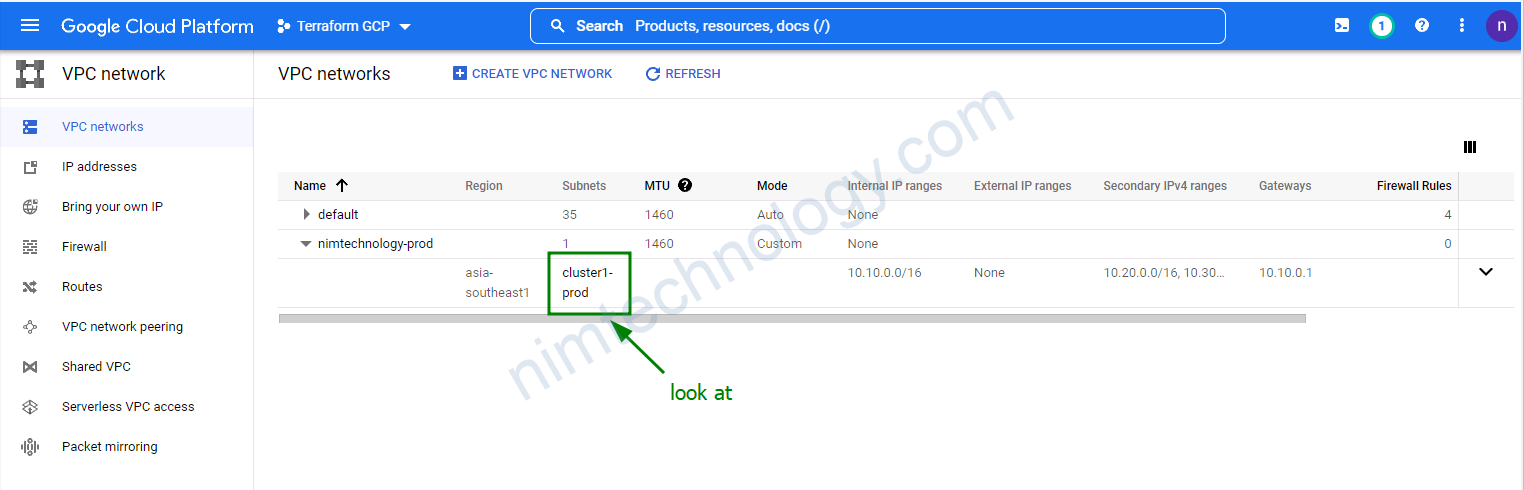

network_name = “${var.network}-${var.env_name}” Bạn đặt tên cho network cha. Trong network cha thì bạn chi thành nhiều network con, mỗi network con tương ứng với 1 cluster k8s.

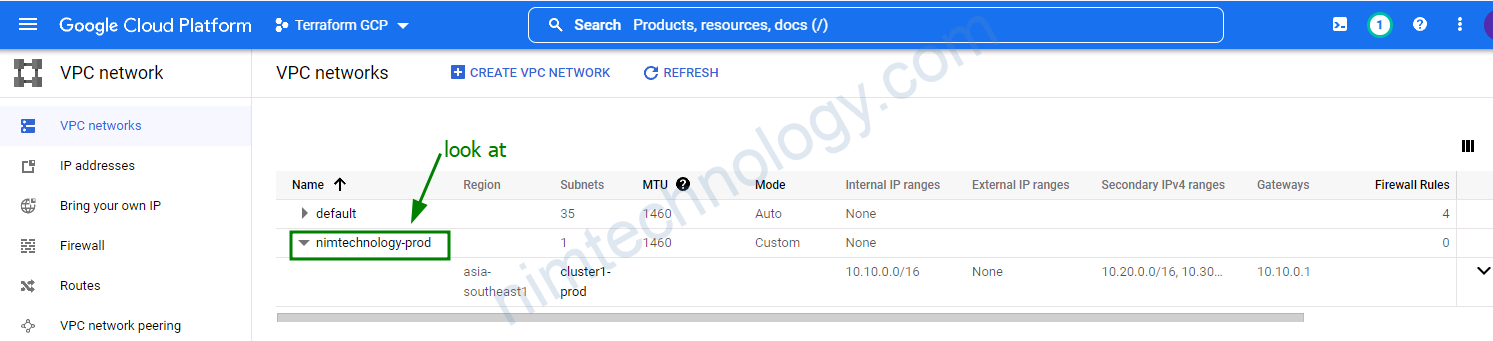

Sau khi chạy terraform apply

Còn 1 loại nữa là shared_vpc thì cái này như sai công ty organization

https://registry.terraform.io/modules/terraform-google-modules/project-factory/google/latest/examples/shared_vpc

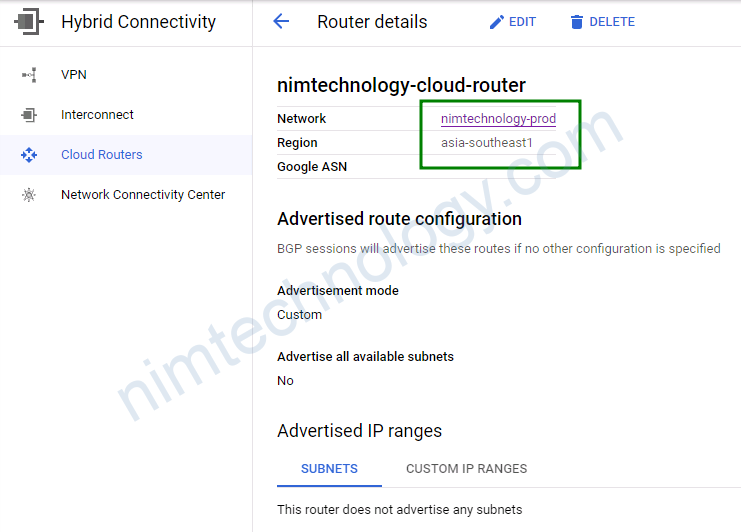

2.2) Create Cloud Router

https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/compute_router

Bây giờ để các node hay pod có thể đi ra ngoài thì chúng cần tạo cloud router

03-cloud-router.tf

>>>>>>>>>>>>>

// this is needed to allow a private gke cluster access to the internet (e.g. pull images)

resource "google_compute_router" "router" {

name = "${var.network}-cloud-router"

network = module.gcp-network.network_self_link

region = var.region

}

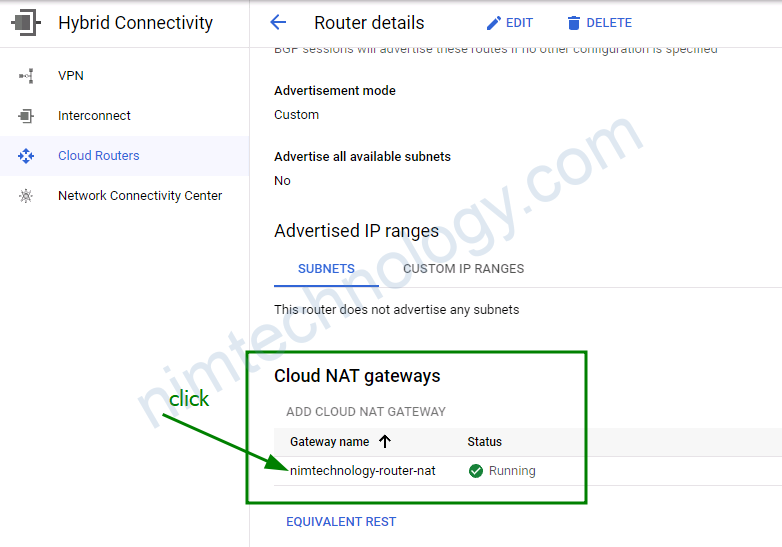

2.3) Cloud Nat

// this is the address the nat will use externally

resource "google_compute_address" "nat_address" {

name = "${var.network}-nat-address"

region = var.region

}

// this is needed to allow a private gke cluster access to the internet (e.g. pull images)

resource "google_compute_router_nat" "nat" {

name = "${var.network}-router-nat"

router = google_compute_router.router.name

nat_ip_allocate_option = "MANUAL_ONLY"

nat_ips = [google_compute_address.nat_address.self_link]

source_subnetwork_ip_ranges_to_nat = "ALL_SUBNETWORKS_ALL_IP_RANGES"

region = var.region

}

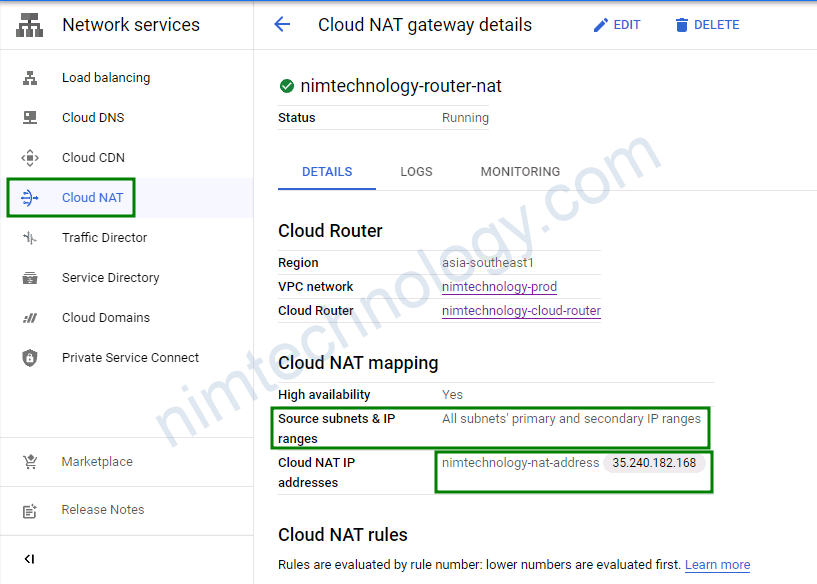

Bạn sẽ thấy trong config của cloud router sẽ có thấy cloud nat

Bạn thấy là config cloud Nat thì source sẽ là tất cả các IP private trong primary và secondary

Đi ra sẽ bằng 1 IP Public

Và đấy là file variable 00-variable.tf

variable "region" {

default = "asia-southeast1"

}

variable "project_id" {

default = "terraform-gcp-346216"

}

variable network {

type = string

default = "nimtechnology"

description = "this is a main VPC"

}

variable env_name {

type = string

default = "prod"

description = "This is Production environment"

}

variable subnetwork {

type = string

default = "cluster1"

description = "subnetwork name of cluster 1"

}

variable "ip_range_pods_name" {

description = "The secondary ip range to use for pods of cluster1"

default = "ip-range-pods-cluster1"

}

variable "ip_range_services_name" {

description = "The secondary ip range to use for services of cluster1"

default = "ip-range-services-cluster1"

}

variable "node_locations" {

default = [

"asia-southeast1-a",

"asia-southeast1-b",

"asia-southeast1-c",

]

}

3) Cluster K8s

3.1) Common Errors

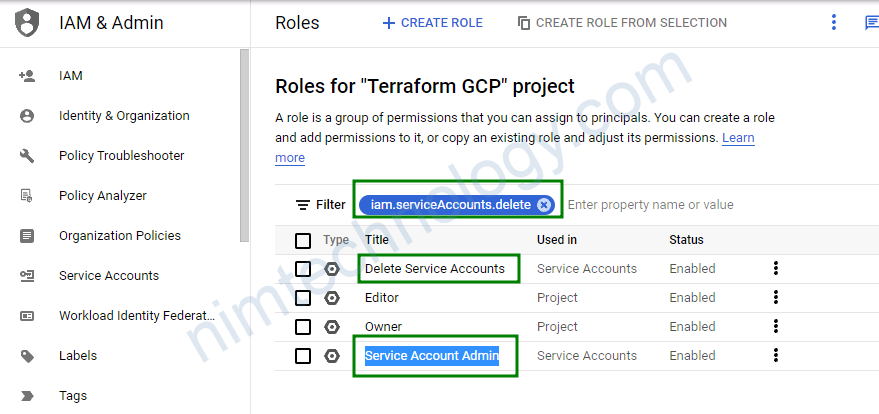

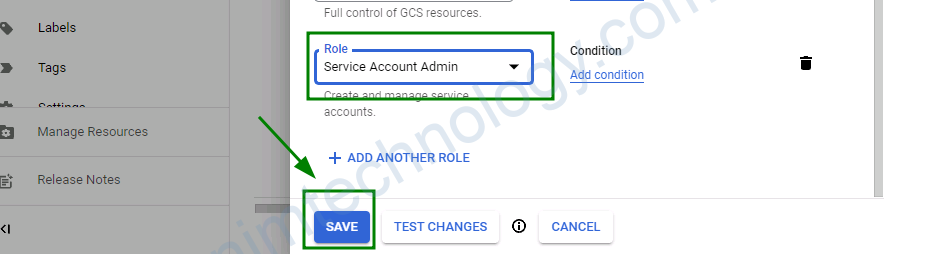

╷ │ Error: googleapi: Error 403: Permission iam.serviceAccounts.delete is required to perform this operation on service account projects/terraform-gcp-346216/serviceAccounts/spinnaker-gcs@terraform-gcp-346216.iam.gserviceaccount.com., forbidden

Nếu gặp lỗi trên khi detele thì bạn cần update thêm role

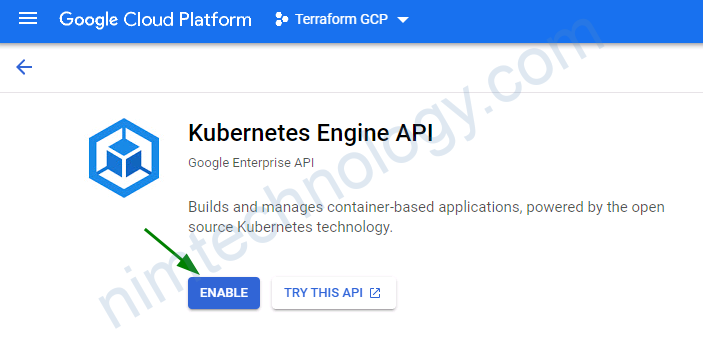

│ Error: Error retrieving available container cluster versions: googleapi: Error 403: Kubernetes Engine API has not been used in project 90059718136 before or it ible it by visiting https://console.developers.google.com/apis/api/container.googleapis.com/overview?project=90059718136 then retry. If you enabled this API recentlinutes for the action to propagate to our systems and retry.

>>> >>> Error: expected "private_cluster_config.0.master_ipv4_cidr_block" to contain a network Value with between 28 and 28 significant bits, got: 16

Minh chuyển lại config như sau:

variable "master_cidr" {

default = "10.10.0.0/28"

}

>>>>>>>>>> >>>>>>> googleapi: Error 400: Master version "1.19.14-gke.1900" is unsupported., badRequest

googleapi: Error 404: Not found: project "terraform-gcp-346216" does not have a subnetwork named "cluster1" in region "asia-southeast1"., notFound

Lỗi trên thì kiểm tra lại vpc

>>>>>>> >>>>>>> googleapi: Error 400: Network "nimtechnology" does not exist., badRequest

>>>>>>> >>>>>>>>> Error waiting for creating GKE cluster: The given master_ipv4_cidr 10.10.0.0/28 overlaps with an existing network 10.10.0.0/16.

Với lỗi trên thì bạn chỉnh lại master_ipv4_cidr_block nó phải không được trùng với subnet của VPC.

3.2) Prepare Knowledge.

3.2.1) Node pool

Đâu tiên chúng ta cần tìm hiểu node_pools và đây là 1 config mẫu.

node_pools = [

{

name = "default-node-pool"

machine_type = "e2-custom-4-4096"

version = "1.21.11-gke.1100"

min_count = 0

max_count = 8

disk_size_gb = 40

disk_type = "pd-ssd"

image_type = "COS"

auto_repair = true

auto_upgrade = false

preemptible = false

initial_node_count = 1

},

Và giờ mình sẽ giải thích 1 vài variable.

machine_type: bạn sẽ chọn là node worker của bạn có bao nhiêu CPU và RAM

Mình thấy đã số chọn kiểu custom, bạn sẽ chọn được số ram và cpu theo ý bạn mà không theo 1 khuôn mẫu nào.

VD: machine_type = “e2-custom-4-4096” thì

– Bạn chọn dòng E2 và bạn có thể tham khảo các dòng khác ở link dưới

https://cloud.google.com/compute/docs/machine-types

– Bạn muốn là mỗi node sẽ có 4 vCPU và 4GB RAM.

machine_type = “custom-8-19456”

– Với config trên thì bạn chỉ quan tâm là node của bạn khi tạo ra sẽ có 8 vCPU và 19GB RAM

version = “1.21.11-gke.1100”: Đây là version K8s

min_count = 0

max_count = 8

trong config node_pool thì autoscaling mặc định là true

và min_count và max_count là config auto scale của cluster

disk_size_gb: Size of the disk attached to each node, specified in GB. The smallest allowed disk size is 10GB

disk_type: Type of the disk attached to each node (e.g. ‘pd-standard’ or ‘pd-ssd’) và default là pd-standard

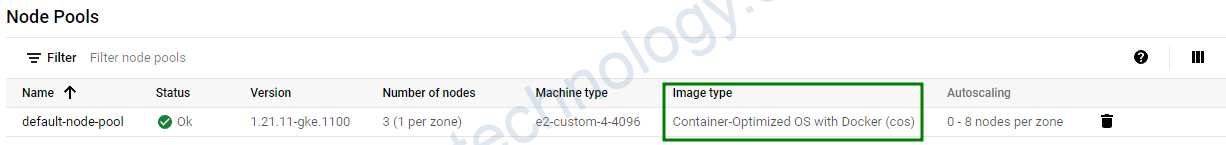

image_type = “COS”: thì mình thấy khi tạo nó như ảnh bên dưới

auto_upgrade = false chỗ này nhìn là biết chúng ta không muốn đùng cái ngủ dậy các node worker tự động upgrade.

preemptible = false đây là một kiểu tiết kiệm khá thú dị trên google cloud

Bạn có thể đọc bài biết để hiểu thêm về thực tế.

Giảm tỉ lệ nhiều preemptible node cùng bị terminate trong GKE cluster

3.2.2) node_pools_labels

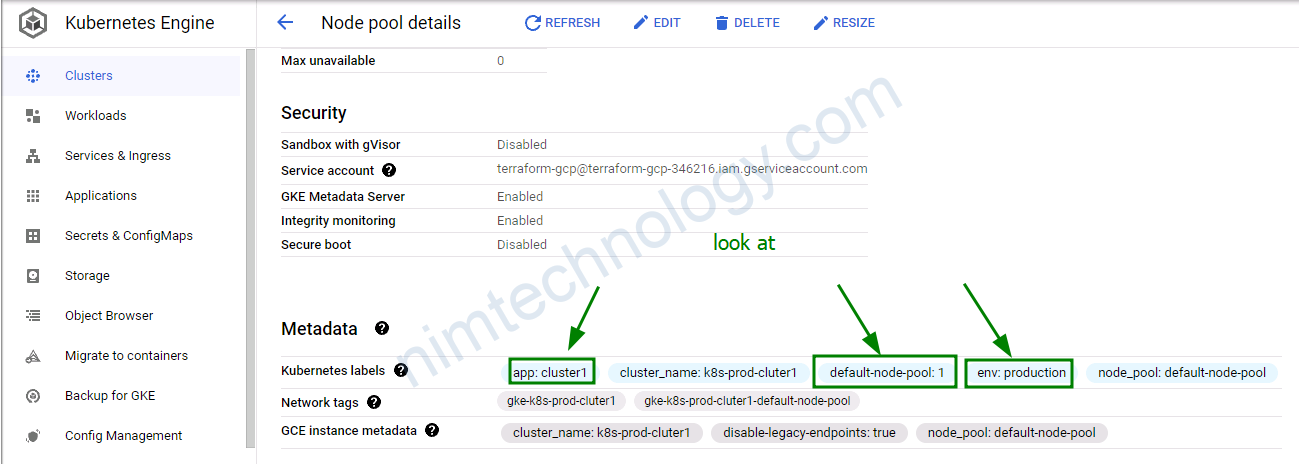

Map of maps containing node labels by node-pool name

Chúng ta có config như sau:

node_pools_labels = {

all = {

env = "production"

app = "cluster1"

}

default-node-pool = {

default-node-pool = "1"

}

...

với all = {} thị bạn sẽ add label cho các node trong cluster

<node pool name> = {} bạn sẽ chỉ add label cho những node thuộc node_pool chỉ định

Như case bên dưới

default-node-pool = {

default-node-pool = “1”

}

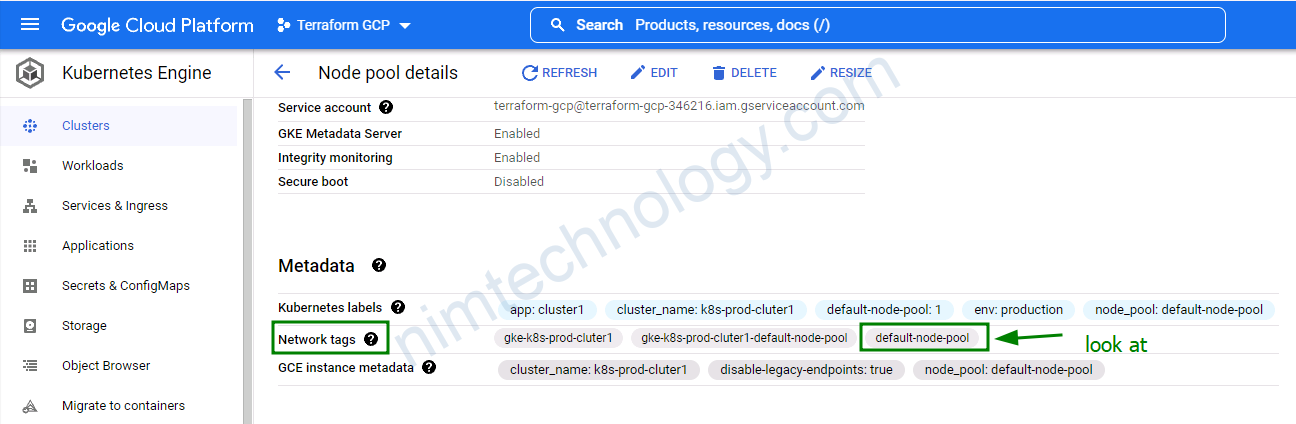

3.2.3) node_pools_tags

Map of lists containing node network tags by node-pool name

Mính sẽ cung cấp config như sau:

node_pools_tags = {

all = []

default-node-pool = [

"default-node-pool",

]

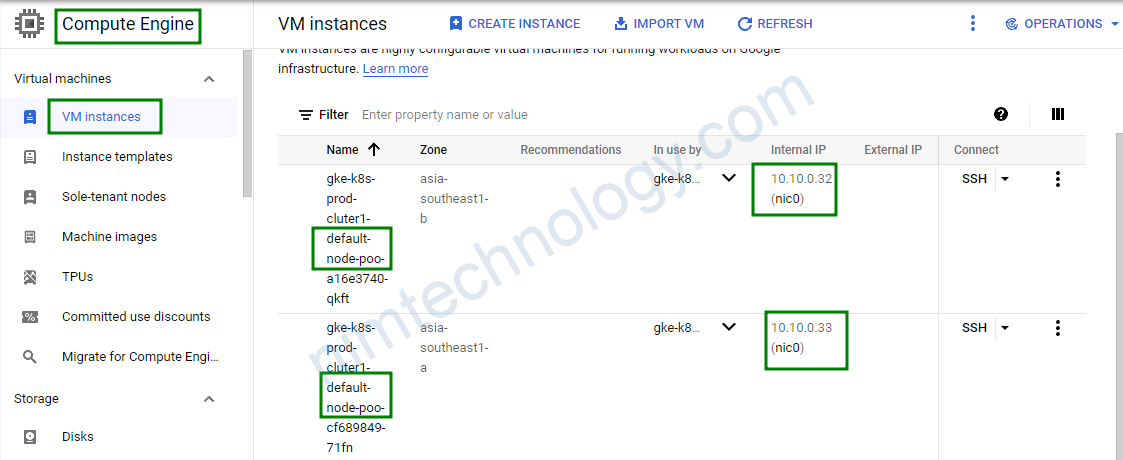

Và kiểm tra trên hình như sau:

3.2.4) master_authorized_networks

List of master authorized networks. If none are provided, disallow external access (except the cluster node IPs, which GKE automatically whitelists).

Cấu hình này giống như kiểu cho network (VPC) nào được connect cluster k8s

master_authorized_networks = [

{

cidr_block = "10.0.0.0/8"

display_name = allow-all-private"

},

Khi bạn config

enable_private_endpoint = true

enable_private_nodes = true

thì bạn bắt buộc phài config master_authorized_networks

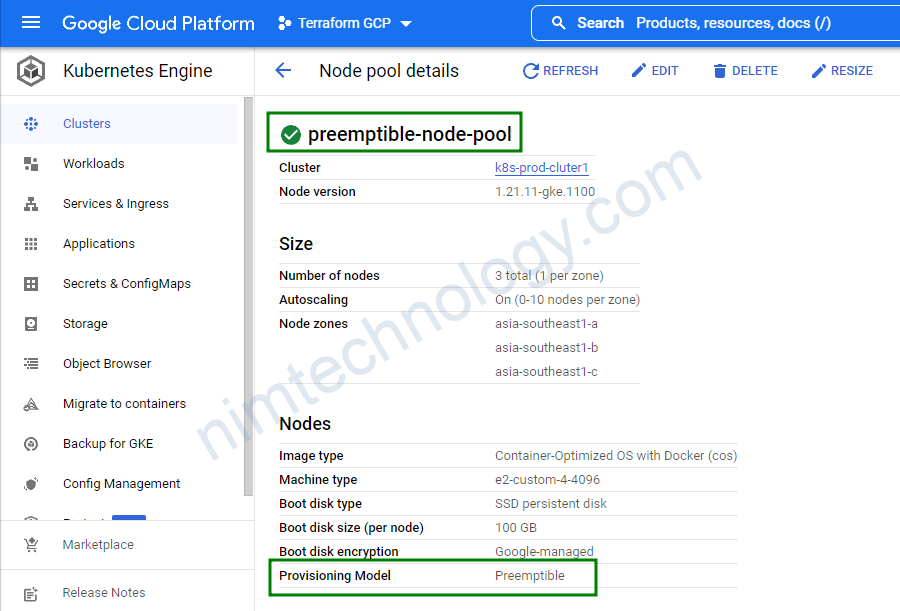

3.3) Add a new preemptible node pool.

ở đây thì mình tạo 1 preemptible node pool , config cũng đơn giản.

node_pools = [

.....

},

{

name = "preemptible-node-pool"

machine_type = "e2-custom-4-4096"

version = "1.21.11-gke.1100"

min_count = 0

max_count = 10

disk_size_gb = 100

disk_type = "pd-ssd"

image_type = "COS"

auto_repair = true

auto_upgrade = false

preemptible = true

initial_node_count = 1

},

node_pools_labels = {

all = {

env = "production"

app = "cluster1"

}

....

preemptible-node-pool = {

preemptible-pool = "1"

}

node_pools_tags = {

all = []

....

preemptible-node-pool = [

"preemptible-node-pool",

]

}

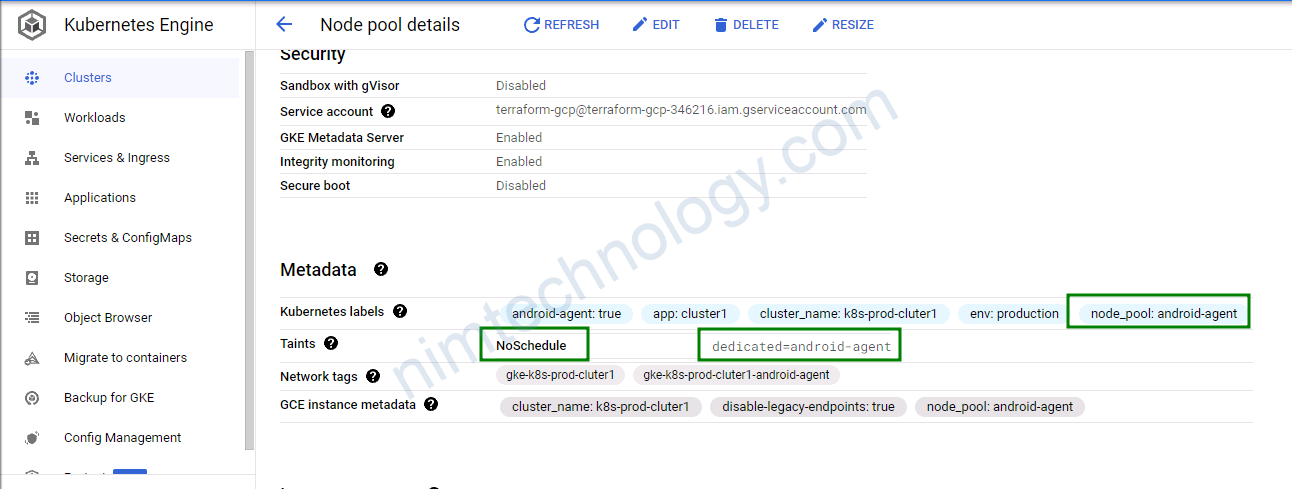

3.4 Create A new node pool with taints

thướng trong cluster của chúng ta sẽ có các node ram khủng hay cpu để phụ vụ cho 1 số workload riêng biệt

node_pools = [

......

{

name = "android-agent"

machine_type = "custom-16-32768"

version = "1.19.10-gke.1600"

node_locations = "asia-southeast1-b"

min_count = 0

max_count = 6

disk_size_gb = 30

disk_type = "pd-balanced"

image_type = "COS"

auto_repair = true

auto_upgrade = false

preemptible = false

},

node_pools_labels = {

all = {

env = "production"

app = "cluster1"

.....

android-agent = {

android-agent = "true"

}

node_pools_taints = {

.....

android-agent = [

{

key = "dedicated"

value = "android-agent"

effect = "NO_SCHEDULE"

},

]

.....

}

Bạn có thể khám phá.

https://cloud.google.com/kubernetes-engine/docs/release-notes

service_account: The service account to run nodes as if not overridden in node_pools. The create_service_account variable default value (true) will cause a cluster-specific service account to be created.

Summary

chúng ta sẽ có file 00-variable.tf

variable "region" {

default = "asia-southeast1"

}

variable "project_id" {

default = "terraform-gcp-346216"

}

variable network {

type = string

default = "nimtechnology"

description = "this is a main VPC"

}

variable env_name {

type = string

default = "prod"

description = "This is Production environment"

}

variable subnetwork {

type = string

default = "cluster1"

description = "subnetwork name of cluster 1"

}

variable "ip_range_pods_name" {

description = "The secondary ip range to use for pods of cluster1"

default = "ip-range-pods-cluster1"

}

variable "ip_range_services_name" {

description = "The secondary ip range to use for services of cluster1"

default = "ip-range-services-cluster1"

}

variable "node_locations" {

default = [

"asia-southeast1-a",

"asia-southeast1-b",

"asia-southeast1-c",

]

}

variable "master_cidr" {

default = "10.40.0.0/28"

}

variable "compute_engine_service_account" {

default = "terraform-gcp@terraform-gcp-346216.iam.gserviceaccount.com"

}

variable ssh_user {

type = string

default = "root"

}

variable "key_pairs" {

type = map

default = {

root_public_key = "keys/id_rsa.pub",

root_private_key = "keys/root_id_ed25519"

}

}

tiếp đến là file 02-k8s_cluster1.tf

module "k8s_prod_cluster1" {

source = "terraform-google-modules/kubernetes-engine/google//modules/beta-private-cluster"

version = "17.2.0"

project_id = var.project_id

name = "k8s-${var.env_name}-cluter1"

regional = true

region = var.region

zones = var.node_locations

network = "${var.network}-${var.env_name}"

# network_project_id = var.network_project_id #trường này dành cho organization

subnetwork = "${var.subnetwork}-${var.env_name}"

ip_range_pods = var.ip_range_pods_name

ip_range_services = var.ip_range_services_name

enable_private_endpoint = true

enable_private_nodes = true

network_policy = false

issue_client_certificate = false

remove_default_node_pool = true

master_global_access_enabled = false

kubernetes_version = "1.21.11-gke.1100"

master_ipv4_cidr_block = var.master_cidr

service_account = var.compute_engine_service_account

create_service_account = false

maintenance_start_time = "19:00"

identity_namespace = "${var.project_id}.svc.id.goog"

node_metadata = "GKE_METADATA_SERVER"

enable_shielded_nodes = false

dns_cache = true

enable_vertical_pod_autoscaling = true

gce_pd_csi_driver = true

datapath_provider = "DATAPATH_PROVIDER_UNSPECIFIED"

master_authorized_networks = [

{

cidr_block = "10.0.0.0/8"

display_name = "subnet-vm-common-prod"

},

# {

# cidr_block = "10.246.0.0/15"

# display_name = "subnet-k8s-infras-prod-pod"

# },

# {

# cidr_block = "10.250.0.0/15"

# display_name = "subnet-k8s-common-prod-pod"

# },

# {

# cidr_block = "10.8.1.0/24"

# display_name = "subnet-vm-cluster1-prod"

# },

# {

# cidr_block = "10.244.0.0/15"

# display_name = "subnet-k8s-cluster1-prod-pod"

# },

# {

# cidr_block = "10.218.0.0/15"

# display_name = "subnet-k8s-infras-prod-v2-pod"

# },

]

node_pools = [

{

name = "default-node-pool"

machine_type = "e2-custom-4-4096"

version = "1.21.11-gke.1100"

min_count = 0

max_count = 8

disk_size_gb = 40

disk_type = "pd-ssd"

image_type = "COS"

auto_repair = true

auto_upgrade = false

preemptible = false

initial_node_count = 1

},

# {

# name = "preemptible-node-pool"

# machine_type = "e2-custom-4-4096"

# version = "1.21.11-gke.1100"

# min_count = 0

# max_count = 10

# disk_size_gb = 100

# disk_type = "pd-ssd"

# image_type = "COS"

# auto_repair = true

# auto_upgrade = false

# preemptible = true

# initial_node_count = 1

# },

# {

# name = "std8"

# machine_type = "e2-standard-8"

# version = "1.19.10-gke.1600"

# min_count = 0

# min_count = 0

# max_count = 2

# disk_size_gb = 60

# disk_type = "pd-ssd"

# image_type = "COS"

# auto_repair = true

# auto_upgrade = false

# preemptible = true

# },

# {

# name = "android-agent"

# machine_type = "e2-custom-4-4096"

# version = "1.19.10-gke.1600"

# node_locations = "asia-southeast1-b"

# min_count = 0

# max_count = 6

# disk_size_gb = 30

# disk_type = "pd-balanced"

# image_type = "COS"

# auto_repair = true

# auto_upgrade = false

# preemptible = false

# },

# {

# name = "redis"

# machine_type = "e2-custom-2-8192"

# version = "1.19.14-gke.1900"

# node_locations = "asia-southeast1-b"

# disk_size_gb = 30

# disk_type = "pd-balanced"

# image_type = "COS_CONTAINERD"

# autoscaling = true

# min_count = 0

# max_count = 20

# auto_repair = true

# auto_upgrade = false

# preemptible = false

# },

]

node_pools_oauth_scopes = {

all = [

"https://www.googleapis.com/auth/devstorage.read_only",

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

"https://www.googleapis.com/auth/service.management.readonly",

"https://www.googleapis.com/auth/servicecontrol",

"https://www.googleapis.com/auth/trace.append",

"https://www.googleapis.com/auth/cloud-platform",

"https://www.googleapis.com/auth/compute",

"https://www.googleapis.com/auth/monitoring.write",

"https://www.googleapis.com/auth/cloud_debugger",

]

}

node_pools_labels = {

all = {

env = "production"

app = "cluster1"

}

default-node-pool = {

default-node-pool = "1"

}

# preemptible-node-pool = {

# preemptible-pool = "1"

# }

# android-agent = {

# android-agent = "true"

# }

# redis = {

# "tiki.services/preemptible" = "false"

# "tiki.services/dedicated" = "redis"

# }

}

node_pools_metadata = {

all = {}

}

# node_pools_taints = {

# std8 = [

# {

# key = "dedicated"

# value = "std8"

# effect = "NO_SCHEDULE"

# },

# ]

# android-agent = [

# {

# key = "dedicated"

# value = "android-agent"

# effect = "NO_SCHEDULE"

# },

# ]

# redis = [

# {

# key = "dedicated"

# value = "redis"

# effect = "NO_SCHEDULE"

# }

# ]

# }

node_pools_tags = {

all = []

default-node-pool = [

"default-node-pool",

]

# preemptible-node-pool = [

# "preemptible-node-pool",

# ]

}

}

#------------------------------------------------------------------------------

# IAM role for k8s clusters

#------------------------------------------------------------------------------

# module "iam_prod_cluster_stackdriver_agent_roles" {

# source = "terraform-google-modules/iam/google//examples/stackdriver_agent_roles"

# version = "3.0.0"

# project = var.project_id

# service_account_email = var.compute_engine_service_account

# }

# resource "google_project_iam_member" "k8s_stackdriver_metadata_writer" {

# role = "roles/stackdriver.resourceMetadata.writer"

# member = "serviceAccount:${var.compute_engine_service_account}"

# project = var.project_id

# }

4) GCS (I am rechecking)

phần này là mình ví dụ bạn muốn tạo storage class và pvc cho Spinnaker workload.

4.1) Terraform Service Accounts Module

Terraform Service Accounts Module

This module allows easy creation of one or more service accounts, and granting them basic roles.

module "sa-prod-cluster1-spinnaker-gcs" {

source = "terraform-google-modules/service-accounts/google"

version = "2.0.0"

project_id = var.project_id

names = ["spinnaker-gcs"]

generate_keys = "true"

project_roles = [

"${var.project_id}=>roles/storage.admin",

]

}

4.2) Terraform Google Cloud Storage Module

This module makes it easy to create one or more GCS buckets, and assign basic permissions on them to arbitrary users.

module "gcs_spinnaker_gcs" {

source = "terraform-google-modules/cloud-storage/google"

version = "3.0.0"

location = var.region

project_id = var.project_id

prefix = ""

names = [join("-", tolist([var.project_id, "spinnaker-config"]))]

storage_class = "REGIONAL"

set_admin_roles = true

bucket_admins = {

join("-", tolist([var.project_id, "spinnaker-config"])) = "serviceAccount:${module.sa-prod-cluster1-spinnaker-gcs.email}"

}

set_viewer_roles = true

bucket_viewers = {

join("-", tolist([var.project_id, "spinnaker-config"])) = "group:gcp.platform@tiki.vn"

}

bucket_policy_only = {

tiki-es-snapshot = true

}

}

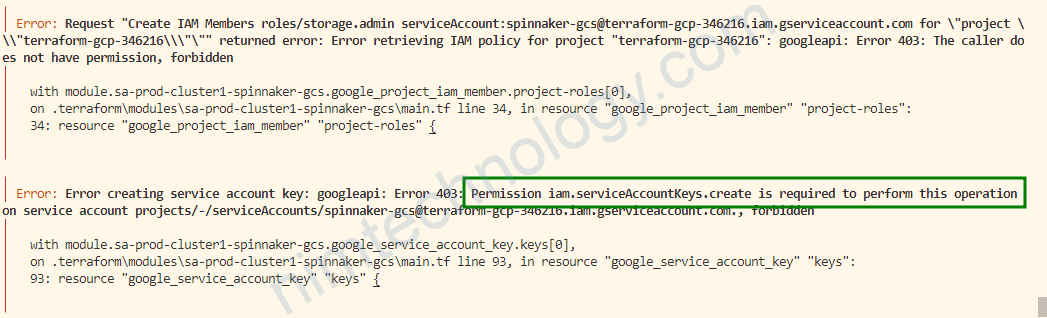

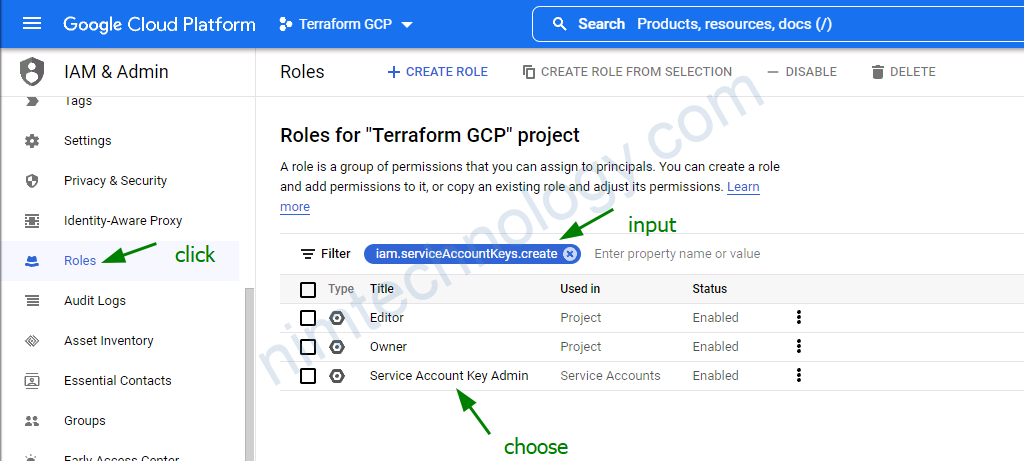

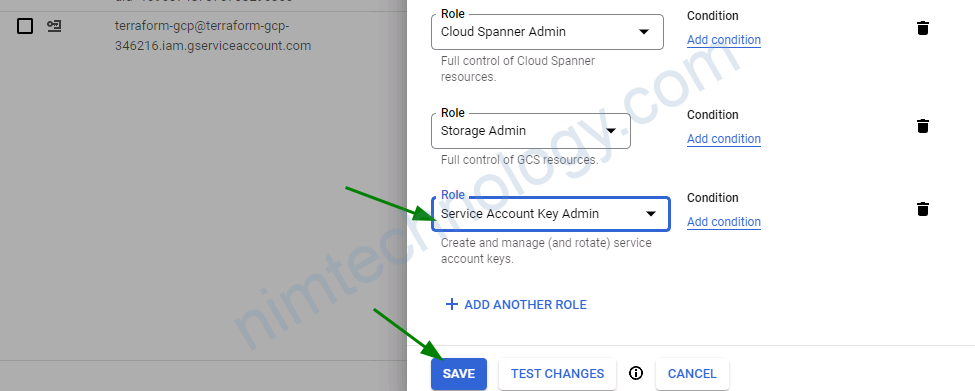

và mình gặp lỗi này

╷

│ Error: Error creating service account key: googleapi: Error 403: Permission iam.serviceAccountKeys.create is required to perform this operation

on service account projects/-/serviceAccounts/spinnaker-gcs@terraform-gcp-346216.iam.gserviceaccount.com., forbidden

│

│ with module.sa-prod-cluster1-spinnaker-gcs.google_service_account_key.keys[0],

│ on .terraform\modules\sa-prod-cluster1-spinnaker-gcs\main.tf line 93, in resource "google_service_account_key" "keys":

│ 93: resource "google_service_account_key" "keys" {

│

╵

╷

│ Error: Request "Create IAM Members roles/storage.admin serviceAccount:spinnaker-gcs@terraform-gcp-346216.iam.gserviceaccount.com for \"project \\\"terraform-gcp-346216\\\"\"" returned error: Error retrieving IAM policy for project "terraform-gcp-346216": googleapi: Error 403: The caller does not have permission, forbidden

│

│ with module.sa-prod-cluster1-spinnaker-gcs.google_project_iam_member.project-roles[0],

│ on .terraform\modules\sa-prod-cluster1-spinnaker-gcs\main.tf line 34, in resource "google_project_iam_member" "project-roles":

│ 34: resource "google_project_iam_member" "project-roles" {

│

╵

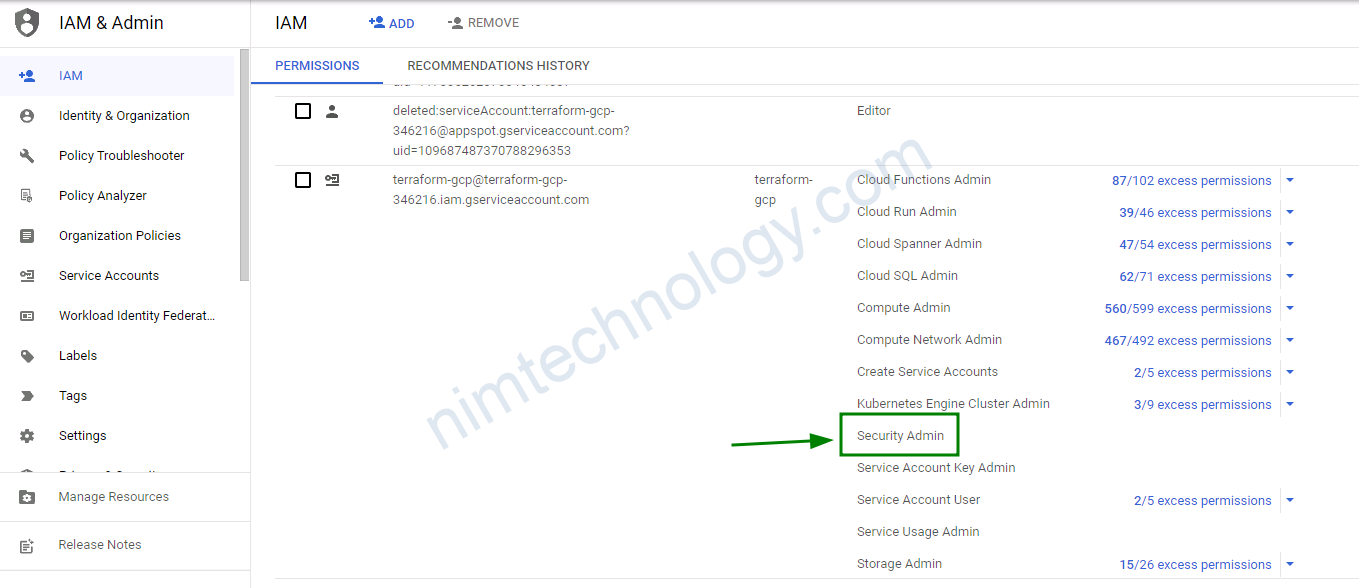

Lỗi trên là chúng ta sử dụng terraform để add role cho IAM của spinnaker.

bạn nên add thêm Security Admin role.

4.3) terraform apply and recheck.

Sau khi terraform apply chả bị lỗi j cả thì chúng ta tiến anh recheck.

>>>>

5) Firewall

>>>>>>>>>>>>>>>>>>>

04-firewall.tf

>>>>>>>>>>>>>

resource "google_compute_firewall" "ssh-rule" {

name = "ssh"

network = "${var.network}"

project = "${var.project_id}"

allow {

protocol = "tcp"

ports = ["22"]

}

source_ranges = ["0.0.0.0/0"]

Bạn có thể tham khảo link này:

https://admintuts.net/server-admin/provision-kubernetes-clusters-in-gcp-with-terraform/

google_compute_firewall will create an ssh rule to enable you to ssh to each node.

6) ssh_key or keypair

>>>>>>>>>>>>>>>>>>>

05-project-metadat.tf

>>>>>>>>>>>>>

resource "google_compute_project_metadata" "metadata_nimtechnology_prod" {

project = data.google_project.project_nimtechnology_prod.project_id

metadata = {

ssh-keys = "${var.ssh_user}:${file(var.key_pairs["root_public_key"])}"

}

}

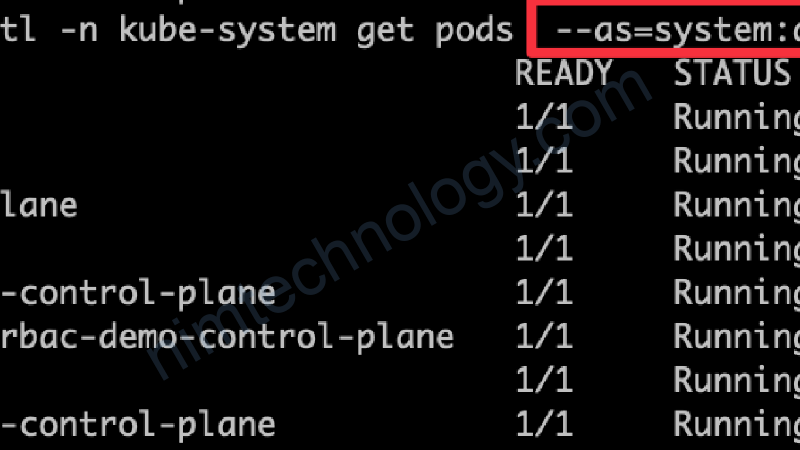

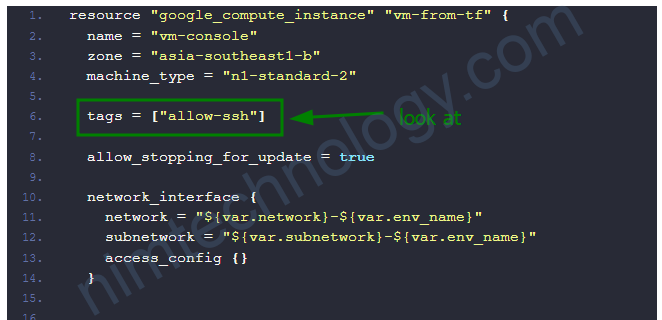

7) Connect to the private K8s cluster(GKE)

Bạn cũng đã thấy hiện tại mình đang cấu hình cluster của hình là sử dụng private network.

Nếu mà để IP PUBLIC cho node hay controller là Hacker tấn công là bay màu.

Giờ mình cần dựng 1 con VM và thông qua con đó thì sẽ controller và kết nối đến cluster k8s

https://binx.io/2022/01/07/how-to-create-a-vm-with-ssh-enabled-on-gcp/

file 02-vm.tf

resource "google_compute_instance" "vm-from-tf" {

name = "vm-console"

zone = "asia-southeast1-b"

machine_type = "n1-standard-2"

tags = ["allow-ssh"]

allow_stopping_for_update = true

network_interface {

network = "${var.network}-${var.env_name}"

subnetwork = "${var.subnetwork}-${var.env_name}"

access_config {}

}

boot_disk {

initialize_params {

image = "debian-9-stretch-v20210916"

size = 35

}

auto_delete = false

}

metadata = {

ssh-keys = "${split("@", var.compute_engine_service_account)[0]}:${file(var.key_pairs["root_public_key"])}"

}

labels = {

"env" = "vm-console"

}

scheduling {

preemptible = false

automatic_restart = false

}

service_account {

email = var.compute_engine_service_account

scopes = [ "cloud-platform" ]

}

lifecycle {

ignore_changes = [

attached_disk

]

}

}

resource "google_compute_disk" "disk-1" {

name = "disk-1"

size = 15

zone = "asia-southeast1-b"

type = "pd-ssd"

}

resource "google_compute_attached_disk" "adisk" {

disk = google_compute_disk.disk-1.id

instance = google_compute_instance.vm-from-tf.id

}

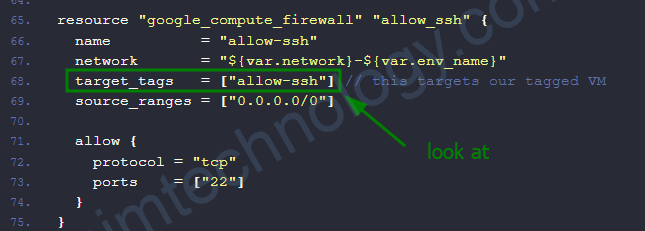

resource "google_compute_firewall" "allow_ssh" {

name = "allow-ssh"

network = "${var.network}-${var.env_name}"

target_tags = ["allow-ssh"] // this targets our tagged VM

source_ranges = ["0.0.0.0/0"]

allow {

protocol = "tcp"

ports = ["22"]

}

}

Các config trên thì khá là dễ hiểu

có 1 điểm minh cân lưu ý ở chỗ SSH key

và file variable.

variable "region" {

default = "asia-southeast1"

}

variable "project_id" {

default = "terraform-gcp-346216"

}

variable network {

type = string

default = "nimtechnology"

description = "this is a main VPC"

}

variable env_name {

type = string

default = "prod"

description = "This is Production environment"

}

variable subnetwork {

type = string

default = "cluster1"

description = "subnetwork name of cluster 1"

}

variable "ip_range_pods_name" {

description = "The secondary ip range to use for pods of cluster1"

default = "ip-range-pods-cluster1"

}

variable "ip_range_services_name" {

description = "The secondary ip range to use for services of cluster1"

default = "ip-range-services-cluster1"

}

variable "node_locations" {

default = [

"asia-southeast1-a",

"asia-southeast1-b",

"asia-southeast1-c",

]

}

variable "master_cidr" {

default = "10.40.0.0/28"

}

variable "compute_engine_service_account" {

default = "terraform-gcp@terraform-gcp-346216.iam.gserviceaccount.com"

}

variable ssh_user {

type = string

default = "root"

}

variable "key_pairs" {

type = map

default = {

root_public_key = "keys/id_rsa.pub",

root_private_key = "keys/root_id_ed25519"

}

}

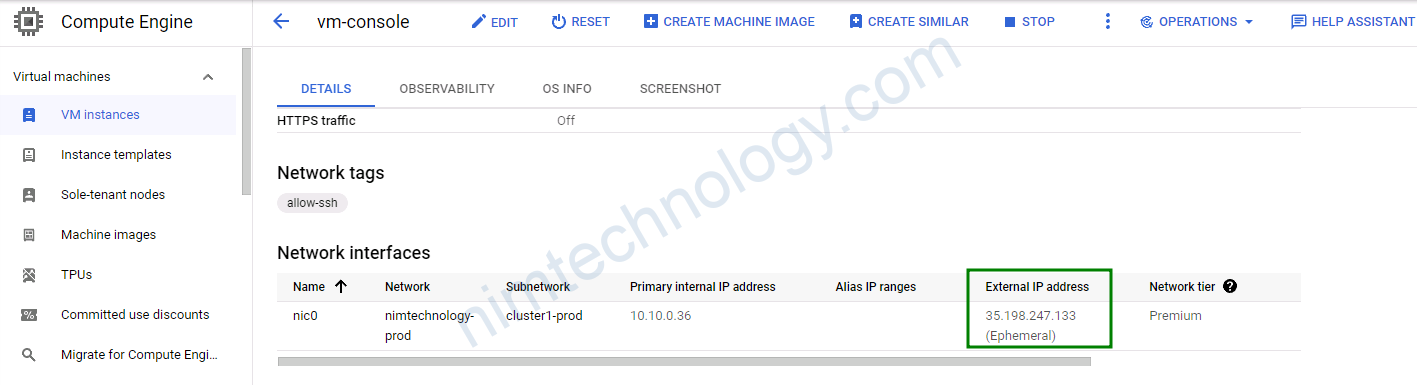

Giờ chúng ta thực hiện ssh vào IP thông qua internet

ssh terraform-gcp@35.198.247.133 -i /root/.ssh/id_rsauser mà chúng ta ssh chúng là user chạy terraform.

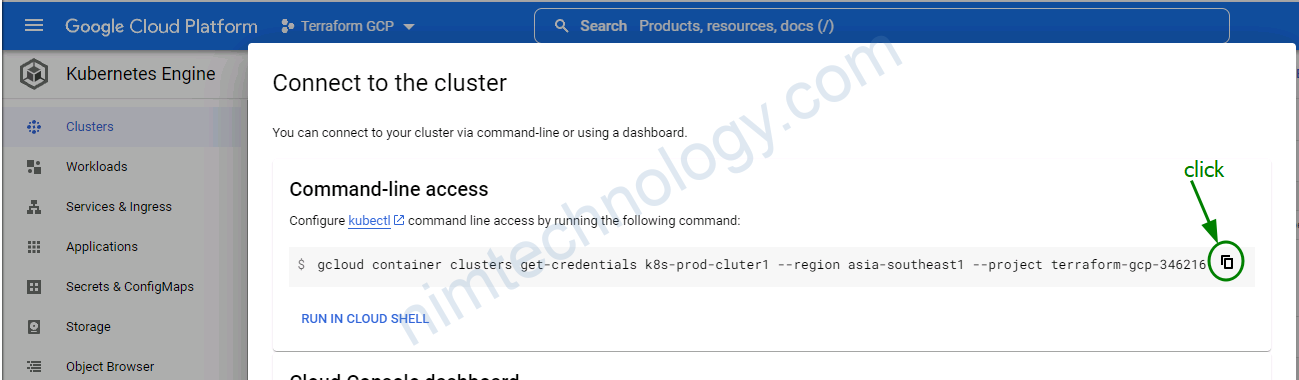

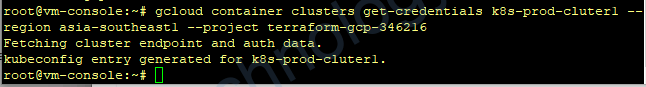

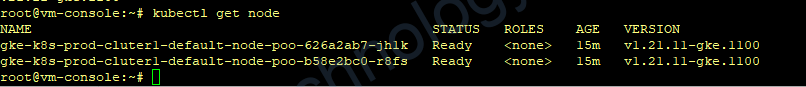

gcloud container clusters get-credentials k8s-prod-cluter1 --region asia-southeast1 --project terraform-gcp-346216

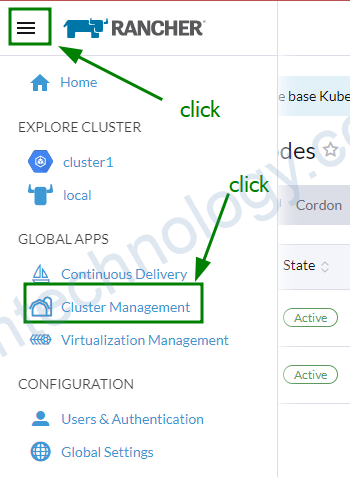

Giờ mình định cài rancher lên con VM để manage K8s GKE

thực hiện cài docker cho VM

docker-ce : Depends: containerd.io (>= 1.4.1) but it is not going to be installed

curl -O https://download.docker.com/linux/debian/dists/buster/pool/stable/amd64/containerd.io_1.4.3-1_amd64.deb sudo apt install ./containerd.io_1.4.3-1_amd64.deb

Nếu bạn không bị lỗi trên thì thực hiện bược tiếp theo.

sudo apt update -y sudo apt install apt-transport-https ca-certificates curl software-properties-common curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu focal stable" sudo apt update -y apt-cache policy docker-ce sudo apt install docker-ce -y sudo systemctl enable docker sudo systemctl restart docker sudo curl -L "https://github.com/docker/compose/releases/download/1.27.4/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose sudo chmod +x /usr/local/bin/docker-compose

Chạy 1 command để Install rancher.

docker run -d --restart=unless-stopped -p 80:80 -p 443:443 --privileged rancher/rancher:latesttruy cập IP Public bằng browser và làm theo hướng dẫn của rancher để đổi mật khẩu

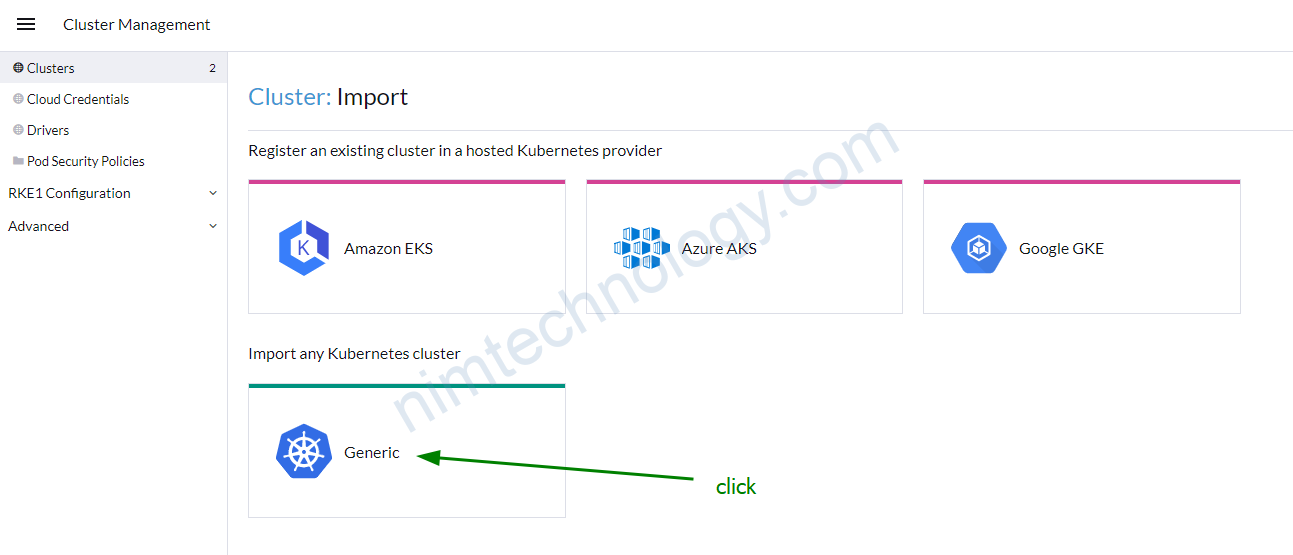

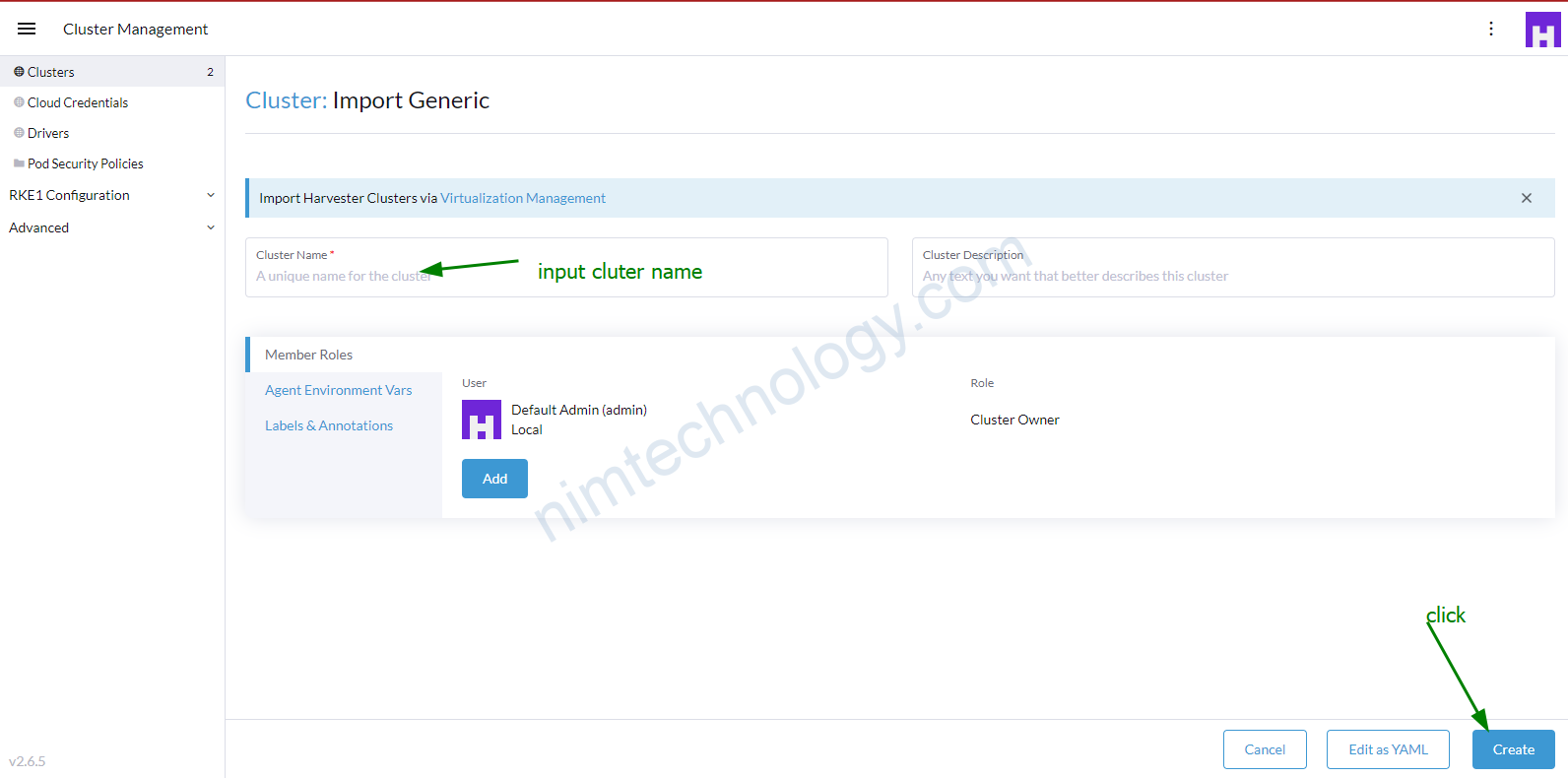

Sau khi login đã ngon lành thì bạn thực hiện import cluter gke vào rancher

Create a Service Account with a JSON private key and provide the JSON here. See Google Cloud docs for more info about creating a service account. These IAM roles are required: Compute Viewer (roles/compute.viewer), (Project) Viewer (roles/viewer), Kubernetes Engine Admin (roles/container.admin), Service Account User (roles/iam.serviceAccountUser). More info on roles can be found here.

sau đó bạn apply và thành công.

https://learnk8s.io/terraform-gke

https://admintuts.net/server-admin/provision-kubernetes-clusters-in-gcp-with-terraform/

https://bitbucket-devops-platform.bearingpoint.com/projects/INFONOVA/repos/terraform-modules/browse

8) Storage Class in GKE – Kubernetes

Hiện tại thì mình thấy là gke tạo sẵn cho mình 3 storage class

8.1) kubernetes.io/gce-pd

Đây là file yaml của storage class: standard

allowVolumeExpansion: true

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

storageclass.kubernetes.io/is-default-class: "true"

labels:

addonmanager.kubernetes.io/mode: EnsureExists

name: standard

parameters:

type: pd-standard

provisioner: kubernetes.io/gce-pd

reclaimPolicy: Delete

volumeBindingMode: Immediate

với loại này thì mình chỉ tạo được pvc có access mode là: Read Write Once (RWO) và Read Only Many (ROX)

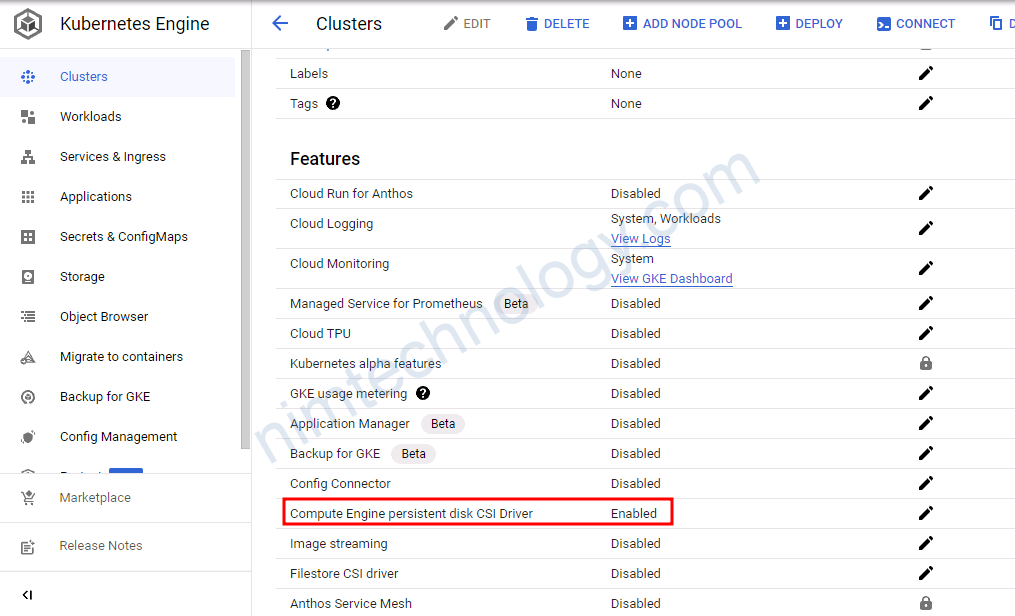

8.2) pd.csi.storage.gke.io

với loại này thì mình chỉ tạo được pvc có access mode là: Read Write Once (RWO) và Read Only Many (ROX)

Bạn sẽ cần phải enable Compute Engine Persistent Disk CSI Driver

Như config của cluter1 mình đã có enable trong terraform bạn có thể kéo lên xem lại nhé!gce_pd_csi_driver = true

Và đầy là content storage class.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

storageclass.kubernetes.io/is-default-class: "false"

labels:

addonmanager.kubernetes.io/mode: EnsureExists

name: balanced-as1b

parameters:

type: pd-balanced

allowVolumeExpansion: true

provisioner: pd.csi.storage.gke.io

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

allowedTopologies:

- matchLabelExpressions:

- key: topology.gke.io/zone

values:

- asia-southeast1-b

Nếu bạn sử dụng mode read write many khi tạo PVC sẽ bị lỗi như bên dưới!

failed to provision volume with StorageClass “standard-rwo”: rpc error: code = InvalidArgument desc = VolumeCapabilities is invalid: specified multi writer with mount access type

waiting for a volume to be created, either by external provisioner “pd.csi.storage.gke.io” or manually created by system administrator

8.3) Storage class NFS on Kubernetes

Bạn có thê tham khảo bài viết này để thực hiển config 1 storage class thông qua NFS nhé

9) Create multi Kubernetes on GKE (google cloud)

9.1) Create a VPC for another cluster

đương nhiên là mỗi khi create 1 cluster mới chúng ta sẽ cần tạo VPC cho cluster đó.

Ở bài lab này thì mình sẽ tiếp tục sử dụng vpc và thêm một số subnet cho cluster mới.

02-vpc.tf

module "gcp-network" {

source = "terraform-google-modules/network/google"

version = "5.1.0"

project_id = var.project_id

network_name = "${var.network}-${var.env_name}"

routing_mode = "GLOBAL"

delete_default_internet_gateway_routes = true

subnets = [

{

subnet_name = "${var.subnetwork}-${var.env_name}"

subnet_ip = "10.10.0.0/16"

subnet_region = var.region

subnet_private_access = "true"

},

//cluter2

{

subnet_name = "${var.subnetwork_other}-${var.env_name}"

subnet_ip = "10.12.0.0/16"

subnet_region = var.region

subnet_private_access = "true"

},

]

routes = [

{

name = "${var.subnetwork}-egress-internet"

description = "Default route to the Internet"

destination_range = "0.0.0.0/0"

next_hop_internet = "true"

},

]

secondary_ranges = {

"${var.subnetwork}-${var.env_name}" = [

{

range_name = var.ip_range_pods_name

ip_cidr_range = "10.20.0.0/16"

},

{

range_name = var.ip_range_services_name

ip_cidr_range = "10.30.0.0/16"

},

],

//cluter 2

"${var.subnetwork_other}-${var.env_name}" = [

{

range_name = var.ip_range_pods_name_other

ip_cidr_range = "10.22.0.0/16"

},

{

range_name = var.ip_range_services_name_other

ip_cidr_range = "10.32.0.0/16"

},

]

}

//cluster2

}

00-variable.tf

variable "region" {

default = "asia-southeast1"

}

variable "project_id" {

default = "terraform-gcp-346216"

}

variable network {

type = string

default = "nimtechnology"

description = "this is a main VPC"

}

variable env_name {

type = string

default = "prod"

description = "This is Production environment"

}

variable subnetwork {

type = string

default = "cluster1"

description = "subnetwork name of cluster 1"

}

variable "ip_range_pods_name" {

description = "The secondary ip range to use for pods of cluster1"

default = "ip-range-pods-cluster1"

}

variable "ip_range_services_name" {

description = "The secondary ip range to use for services of cluster1"

default = "ip-range-services-cluster1"

}

variable subnetwork_other {

type = string

default = "cluster2"

description = "subnetwork name of cluster 2"

}

variable "ip_range_pods_name_other" {

description = "The secondary ip range to use for pods of cluster2"

default = "ip-range-pods-cluster2"

}

variable "ip_range_services_name_other" {

description = "The secondary ip range to use for services of cluster2"

default = "ip-range-services-cluster2"

}

variable "node_locations" {

default = [

"asia-southeast1-a",

"asia-southeast1-b",

"asia-southeast1-c",

]

}

9.2) New k8s cluster – GKE

Giờ đã đến lúc chúng ta tạo cluster

00-variable.tf

variable "region" {

default = "asia-southeast1"

}

variable "project_id" {

default = "terraform-gcp-346216"

}

variable network {

type = string

default = "nimtechnology"

description = "this is a main VPC"

}

variable env_name {

type = string

default = "prod"

description = "This is Production environment"

}

variable subnetwork {

type = string

default = "cluster2"

description = "subnetwork name of cluster 2"

}

variable "ip_range_pods_name" {

description = "The secondary ip range to use for pods of cluster2"

default = "ip-range-pods-cluster2"

}

variable "ip_range_services_name" {

description = "The secondary ip range to use for services of cluster2"

default = "ip-range-services-cluster2"

}

variable "node_locations" {

default = [

"asia-southeast1-a",

"asia-southeast1-b",

"asia-southeast1-c",

]

}

variable "master_cidr" {

default = "10.42.0.0/28"

}

variable "compute_engine_service_account" {

default = "terraform-gcp@terraform-gcp-346216.iam.gserviceaccount.com"

}

variable ssh_user {

type = string

default = "root"

}

variable "key_pairs" {

type = map

default = {

root_public_key = "keys/id_rsa.pub",

root_private_key = "keys/root_id_ed25519"

}

}

02-k8s_cluster2.tf

module "k8s_prod_cluster2" {

source = "terraform-google-modules/kubernetes-engine/google//modules/beta-private-cluster"

version = "17.2.0"

project_id = var.project_id

name = "k8s-${var.env_name}-cluter2"

regional = true

region = var.region

zones = var.node_locations

network = "${var.network}-${var.env_name}"

# network_project_id = var.network_project_id #trường này dành cho organization

subnetwork = "${var.subnetwork}-${var.env_name}"

ip_range_pods = var.ip_range_pods_name

ip_range_services = var.ip_range_services_name

enable_private_endpoint = true

enable_private_nodes = true

network_policy = false

issue_client_certificate = false

remove_default_node_pool = true

master_global_access_enabled = false

kubernetes_version = "1.22.8-gke.201"

master_ipv4_cidr_block = var.master_cidr

service_account = var.compute_engine_service_account

create_service_account = false

maintenance_start_time = "19:00"

identity_namespace = "${var.project_id}.svc.id.goog"

node_metadata = "GKE_METADATA_SERVER"

enable_shielded_nodes = false

dns_cache = true

enable_vertical_pod_autoscaling = true

gce_pd_csi_driver = true

datapath_provider = "DATAPATH_PROVIDER_UNSPECIFIED"

master_authorized_networks = [

{

cidr_block = "10.0.0.0/8"

display_name = "subnet-vm-common-prod"

},

]

node_pools = [

{

name = "default-node-pool"

machine_type = "e2-custom-4-4096"

version = "1.22.8-gke.201"

min_count = 0

max_count = 4

disk_size_gb = 40

disk_type = "pd-ssd"

image_type = "COS"

auto_repair = true

auto_upgrade = false

preemptible = false

initial_node_count = 1

},

# {

# name = "preemptible-node-pool"

# machine_type = "e2-custom-4-4096"

# version = "1.21.11-gke.1100"

# min_count = 0

# max_count = 10

# disk_size_gb = 100

# disk_type = "pd-ssd"

# image_type = "COS"

# auto_repair = true

# auto_upgrade = false

# preemptible = true

# initial_node_count = 1

# },

# {

# name = "std8"

# machine_type = "e2-standard-8"

# version = "1.19.10-gke.1600"

# min_count = 0

# min_count = 0

# max_count = 2

# disk_size_gb = 60

# disk_type = "pd-ssd"

# image_type = "COS"

# auto_repair = true

# auto_upgrade = false

# preemptible = true

# },

# {

# name = "android-agent"

# machine_type = "e2-custom-4-4096"

# version = "1.19.10-gke.1600"

# node_locations = "asia-southeast1-b"

# min_count = 0

# max_count = 6

# disk_size_gb = 30

# disk_type = "pd-balanced"

# image_type = "COS"

# auto_repair = true

# auto_upgrade = false

# preemptible = false

# },

# {

# name = "redis"

# machine_type = "e2-custom-2-8192"

# version = "1.19.14-gke.1900"

# node_locations = "asia-southeast1-b"

# disk_size_gb = 30

# disk_type = "pd-balanced"

# image_type = "COS_CONTAINERD"

# autoscaling = true

# min_count = 0

# max_count = 20

# auto_repair = true

# auto_upgrade = false

# preemptible = false

# },

]

node_pools_oauth_scopes = {

all = [

"https://www.googleapis.com/auth/devstorage.read_only",

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

"https://www.googleapis.com/auth/service.management.readonly",

"https://www.googleapis.com/auth/servicecontrol",

"https://www.googleapis.com/auth/trace.append",

"https://www.googleapis.com/auth/cloud-platform",

"https://www.googleapis.com/auth/compute",

"https://www.googleapis.com/auth/monitoring.write",

"https://www.googleapis.com/auth/cloud_debugger",

]

}

node_pools_labels = {

all = {

env = "production"

app = "cluster2"

}

default-node-pool = {

default-node-pool = "1"

}

# preemptible-node-pool = {

# preemptible-pool = "1"

# }

# android-agent = {

# android-agent = "true"

# }

# redis = {

# "tiki.services/preemptible" = "false"

# "tiki.services/dedicated" = "redis"

# }

}

node_pools_metadata = {

all = {}

}

# node_pools_taints = {

# std8 = [

# {

# key = "dedicated"

# value = "std8"

# effect = "NO_SCHEDULE"

# },

# ]

# android-agent = [

# {

# key = "dedicated"

# value = "android-agent"

# effect = "NO_SCHEDULE"

# },

# ]

# redis = [

# {

# key = "dedicated"

# value = "redis"

# effect = "NO_SCHEDULE"

# }

# ]

# }

node_pools_tags = {

all = []

default-node-pool = [

"default-node-pool",

]

# preemptible-node-pool = [

# "preemptible-node-pool",

# ]

}

}

#------------------------------------------------------------------------------

# IAM role for k8s clusters

#------------------------------------------------------------------------------

# module "iam_prod_cluster_stackdriver_agent_roles" {

# source = "terraform-google-modules/iam/google//examples/stackdriver_agent_roles"

# version = "3.0.0"

# project = var.project_id

# service_account_email = var.compute_engine_service_account

# }

# resource "google_project_iam_member" "k8s_stackdriver_metadata_writer" {

# role = "roles/stackdriver.resourceMetadata.writer"

# member = "serviceAccount:${var.compute_engine_service_account}"

# project = var.project_id

# }